CLIPood: Generalizing CLIP to Out-of-Distributions [paper]

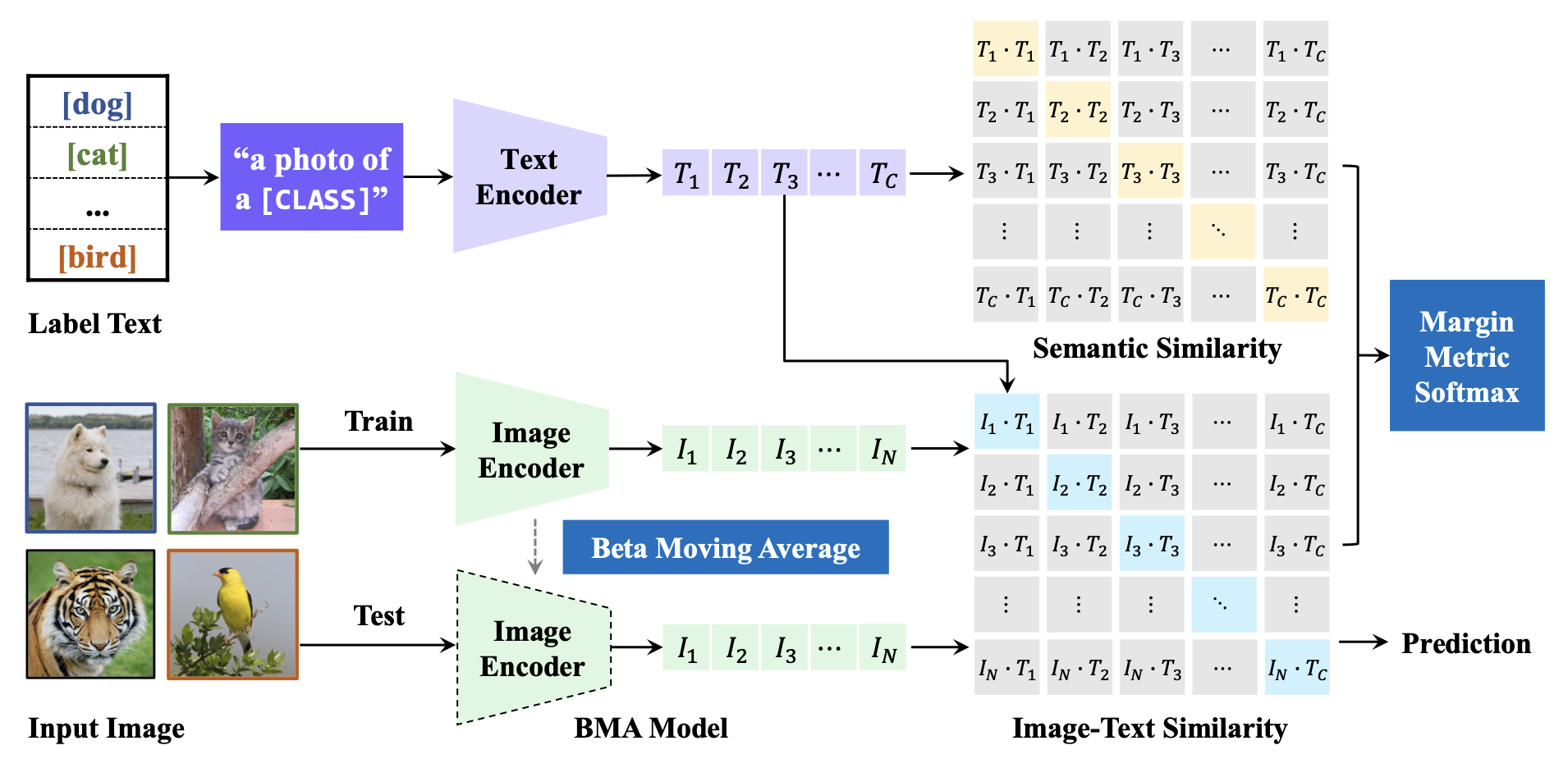

To maintain CLIP's OOD generalizability when adapting CLIP to downstream tasks, we propose CLIPood with the following features:

- Better fine-tuning paradigm to utilize knowledge in text modality.

- Margin matric softmax to exploit semantic relations.

- Beta moving average for balancing pre-trained and task-specific knowledge.

- State-of-the-art performance on three OOD settings.

Figure 1. Overview of CLIPood.

x = x.type(self.conv1.weight.dtype)between Line 223-224 of CLIP/clip/models.py. About this modification please refer to this issue

- Prepare Data. Our datasets are built over DomainBed and CoOp codebases. For usage, one should clone the above two repositories, then download data following the instructions, and arrange the folder as:

CLIPood/

|-- CoOp/

|-- data/

|-- caltech-101/

|-- eurosat/

|-- ... # other CoOp datasets

|-- ...

|-- DomainBed/

|-- domainbed/

|-- data/

|-- domain_net/

|-- office_home/

|-- ... # other DomainBed datasets

|-- datasets.py # the file to update next

|-- ...

|-- ...

|-- ...

- (Important!) Replace line 192-208 in file

DomainBed/domainbed/dataset.pywith the following codes:

transform = transforms.Compose([

transforms.Resize(224),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize((0.48145466, 0.4578275, 0.40821073), (0.26862954, 0.26130258, 0.27577711)),

])

augment_transform = transforms.Compose([

transforms.RandomResizedCrop(224, scale=(0.7, 1.0)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.48145466, 0.4578275, 0.40821073), (0.26862954, 0.26130258, 0.27577711)),

])This aims to align the data pre-processing with the CLIP model.

- Train and evaluate model. We provide all training configs in

scripts.pyto reproduce our main results. You can also tune them or experiment on your own dataset.

We extensively experiment on three OOD settings (domain shift, open class, in the wild). On all settings CLIPood achieves remarkable improvement gain and reaches the state-of-the-art.

If you find this repo useful, please cite our paper.

@inproceedings{shu2023CLIPood,

title={CLIPood: Generalizing CLIP to Out-of-Distributions},

author={Yang Shu and Xingzhuo Guo and Jialong Wu and Ximei Wang and Jianmin Wang and Mingsheng Long},

booktitle={International Conference on Machine Learning},

year={2023}

}

If you have any questions or want to use the code, please contact gxz23@mails.tsinghua.edu.cn.

We appreciate the following github repos a lot for their valuable code base or datasets:

https://github.com/thuml/Transfer-Learning-Library