Goal

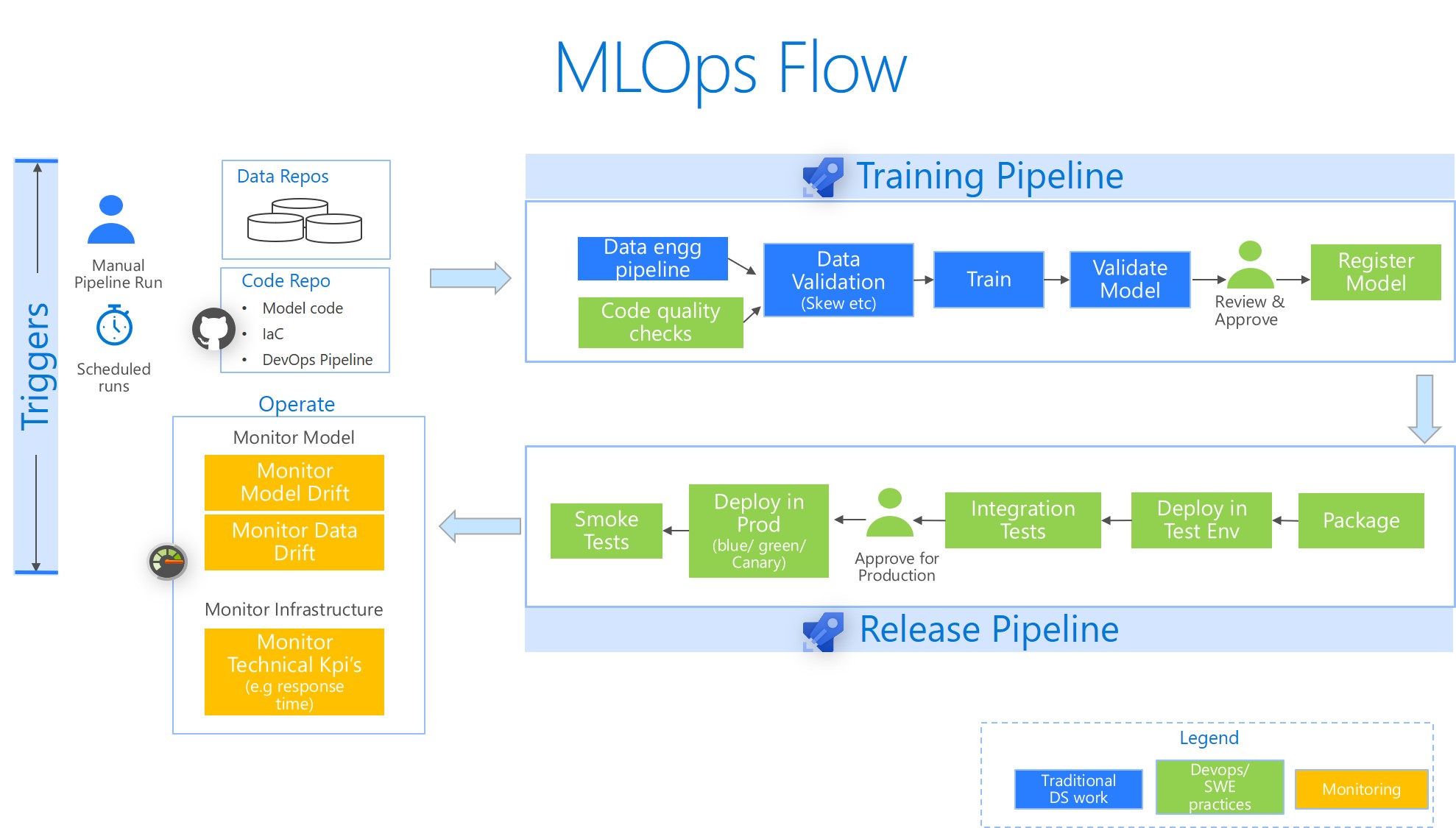

To Create a library of modular recipes (parameterized devops pipeline templates) which could then be composed to create custom end to end CI/CD pipelines for Machine Learning

Why care? To sustain business benefits of Machine learning across any organization, we need to bring in discipline, automation & best practices. Enter MLOps.

Approach

- Minimalistic: Focus is on clean, understandable pipeline & code

- Modular: Atomic recipes that could be referred and reused (e.g recipe: Deploy to production after approval)

Status: Project board

Technologies: Azure Machine Learning & Azure Devops

Technical Aspects

- Fully CI/CD YAML based multistage pipeline (does not use classic release pipelines in Azure devops)

- Use YAML based variables template (no need to configure variable groups through UI)

- Gated releases (manual approvals)

- CLI based MLOps: use Azure ML CLI from Devops pipelines as a mechanism for interacting with the ML platform. Simple and clean.

Get Started

- Understand what we are trying to do (below section)

- Setup the environment

- Run an end to end MLOps pipeline

Note: Automated builds based on code/asset changes have been disabled by setting triggers: none in the pipelines. The reason is to avoid triggering accidental builds during your learning phase.

Notes on our Base scenario:

- Directory Structure

mlopscontains the devops pipelinesenv_create_pipelinescontains pipelines to provision all the components in the cloud (ML workspace, AKS cluster etc)model_pipelinescontains individual pipelines for each of the modelsrecipes: contains parameterized, reusable devops pipeline for different scenarios

modelsdirectory has the source code for the individual models (training, scoring etc).setupdirectory contains documentation on usage

- Training: For training we use a simple LogisticRegression model on the German Credit card dataset. We build sklearn pipeline that does feature engineering. We export the whole pipeline as a the model binary (pkl file).

- We use Azure ML CLI as a mechanism for interacting with Azure ML due to simplicity reasons.

Acknowledgements

- MLOpsPython python repo was one of the inspirations for this - thanks to the contributors

- German Creditcard Dataset

Dua, D. and Graff, C. (2019). UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science.