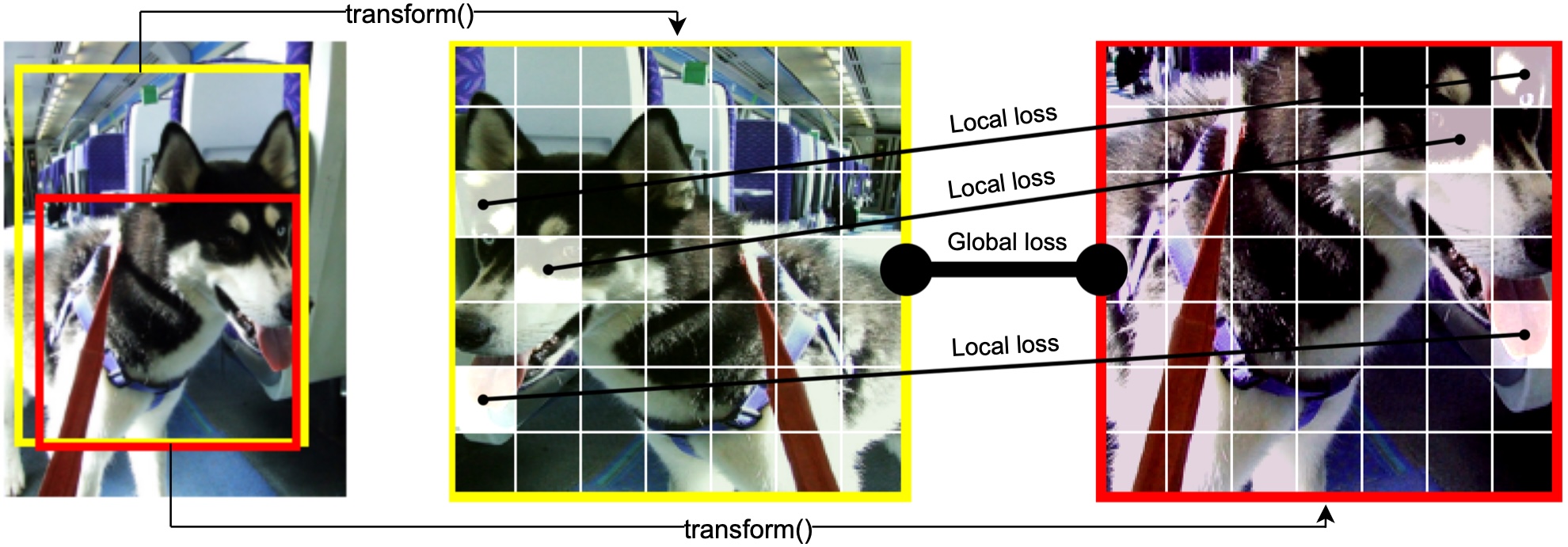

This repo contains the code for the WACV 2023 paper Global-Local Self-Distillation for Visual Representation Learning.

- Download PyTorch with cuda (tested with 1.12.0)

pip install xmltodict wandb pandas matplotlib yacs timm pyyaml einops

Run the main_global_local_self_distillation.py file. Command line args are defined in parser.py.

python main_global_local_self_distillation.py --arch swin_tiny --data_path some_path --output_dir some_output_path --batch_size_per_gpu 32 --epochs 300 --teacher_temp 0.07 --warmup_epochs 10 --warmup_teacher_temp_epochs 30 --norm_last_layer false --use_dense_prediction True --cfg experiments/imagenet/swin_tiny/swin_tiny_patch4__window7_224.yaml --use_fp16 True --dense_matching_type distancepython -m torch.distributed.launch --nproc_per_node=8 main_global_local_self_distillation.py --arch swin_tiny --data_path some_path --output_dir some_output_path --batch_size_per_gpu 32 --epochs 300 --teacher_temp 0.07 --warmup_epochs 10 --warmup_teacher_temp_epochs 30 --norm_last_layer false --use_dense_prediction True --cfg experiments/imagenet/swin_tiny/swin_tiny_patch4__window7_224.yaml --use_fp16 True --dense_matching_type distanceUse eval scripts from EsViT (knn and linear)

If you find our work useful, please consider citing:

@InProceedings{Lebailly_2023_WACV,

author = {Lebailly, Tim and Tuytelaars, Tinne},

title = {Global-Local Self-Distillation for Visual Representation Learning},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {1441-1450}

}

This code is adapted from EsViT which is in turn adapted from DINO.