Application of machine learning to the Coinbase (GDAX) orderbook using a stacked bidirectional LSTM/GRU model to predict new support and resistance on a 15-minute basis; Currently under heavy development.

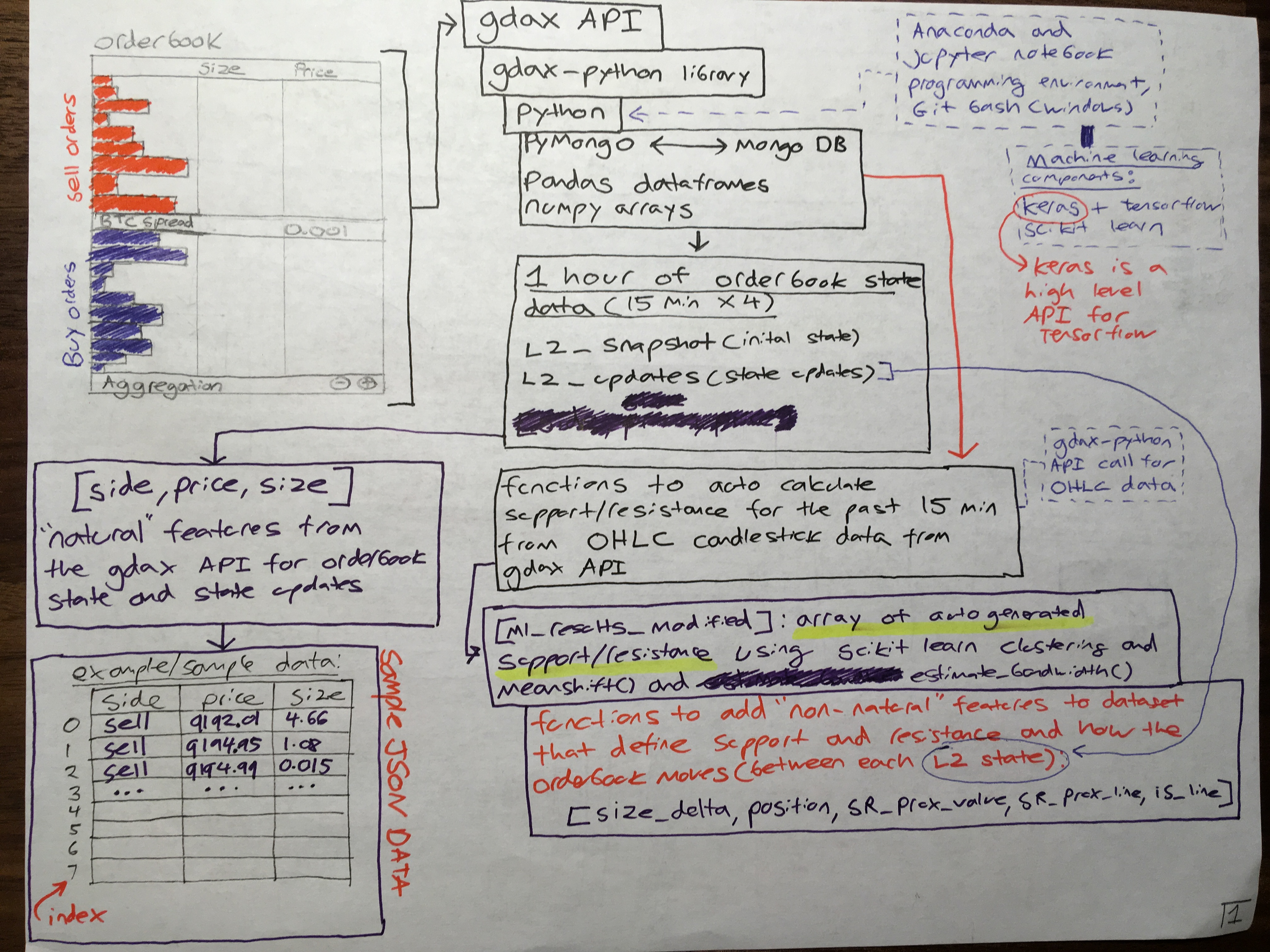

General project API/data structure:

- Anaconda environment strongly recommended

- see

requirements.txtforpip, orenvironment.ymlfor Anaconda/conda- Jupyter Notebook

- Python, Pandas, Matplotlib, MongoDB, PyMongo, Git LFS

- Scipy, Numpy, Feather

- Keras, Tensorflow, Scikit-Learn

- see

- Python client for the Coinbase Pro API: [coinbasepro-python] (https://github.com/danpaquin/coinbasepro-python)

- CUDA/CUDNN-compatible GPU highly recommended for model training, testing, and predicting

Local GPU used to greatly accelerate prototyping, construction, and building of ML model(s) for this project, especially considering the nature of the dataset & machine learning model complexity.

- Requirements to run tensorflow with GPU support

- Nvidia GPU compatible with with CUDA Compute 3.0

- Nvidia CUDA 9.0

- Nvidia cuDNN 7.0 (v7.1.2)

- Install cuDNN .dlls in CUDA directory

- Edit environment variables:

- C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v9.0

- pip uninstall tensorflow && pip install --ignore-installed --upgrade tensorflow-gpu

- Default tensorflow install is CPU-only; install CUDA and cuDNN requirements, then uninstall tensorflow and reinstall tensorflow-gpu (pip install --ignore-installed --upgrade tensorflow-gpu)

Latest notebook file(s) with project code:

9_data_pipeline_development.ipynb:

- Development of data pipelines and optimization of data from MongoDB instance to ML model pretraining

- Removal of deprecated packages + base package version upgrade (i.e. Pandas)

- Development groundwork for automation pipeline for automated hourly data scrape, cycling, and training for model through segregated instance or live online-based model

- Usage of in-line markdown cells in-notebook for readability and consistency

- Even further refinement to program structure

- Function scope and structure & function creation for common operations

- Parsing of raw data into 4 separate l2 update (4 consecutive 15 minute l2update segments)

9a_model_restructure.ipynb:

- Notebook used for further development of model in different formats, and testing of reduced complexity models

- Keras Sequential() Model

- Keras Functional API

- Raw Tensorflow

8_program_structure_improvement.ipynb:

- Previous notebook with proof-of-concept output results

- Several function calls via API and multiple packages required are deprecated

- Use as reference for updated development files/notebooks

6_raw_dataset_update.ipynb:

- Notebook file used to scrape/update raw_data for both MongoDB and csv format, 1 hour of websocket data from GDAX

- L2 Snapshot + L2 Updates without overhead of Match data response (does not have Match data; test data has Match data and adds significant I/O overhead)

- 'gdax-python' and 'gdax-ohlc-import' are repositories imported as Git Submodules:

- After cloning the main project repository, the following command is required to ensure that the submodule repository contents are pulled/present:

git submodule update --init --recursive - .gitmodules file is file for submodule parameters

- After cloning the main project repository, the following command is required to ensure that the submodule repository contents are pulled/present:

- 'model_saved' folder:

- Contains .json and .h5 files for current and previous Tensorflow/Keras models (trained model and model weight export/import)

- 'documentation' folder:

- 'rds_ml_yu.revised.pptx' is a powerpoint presentation summarizing the key technical components, scope, limitations, of this project.

- 'design_mockup' folder:

- Contains diagrams, drawings, and notes used in the process of model and project design during prototyping, testing, and expansion.

- 'design_explanation' folder:

- Contains 8 pages of detailed explanations and diagrams in regards to both project/model structure and design.

- 'previous_revisions' folder:

- Contains previous/outdated versions of readme documentation and powerpoint presentations documenting the nature of this project

- 'saved_charts' folder:

- Output of generate_chart() for candlestick chart with visualized autogenerated support and resistance from autoSR()

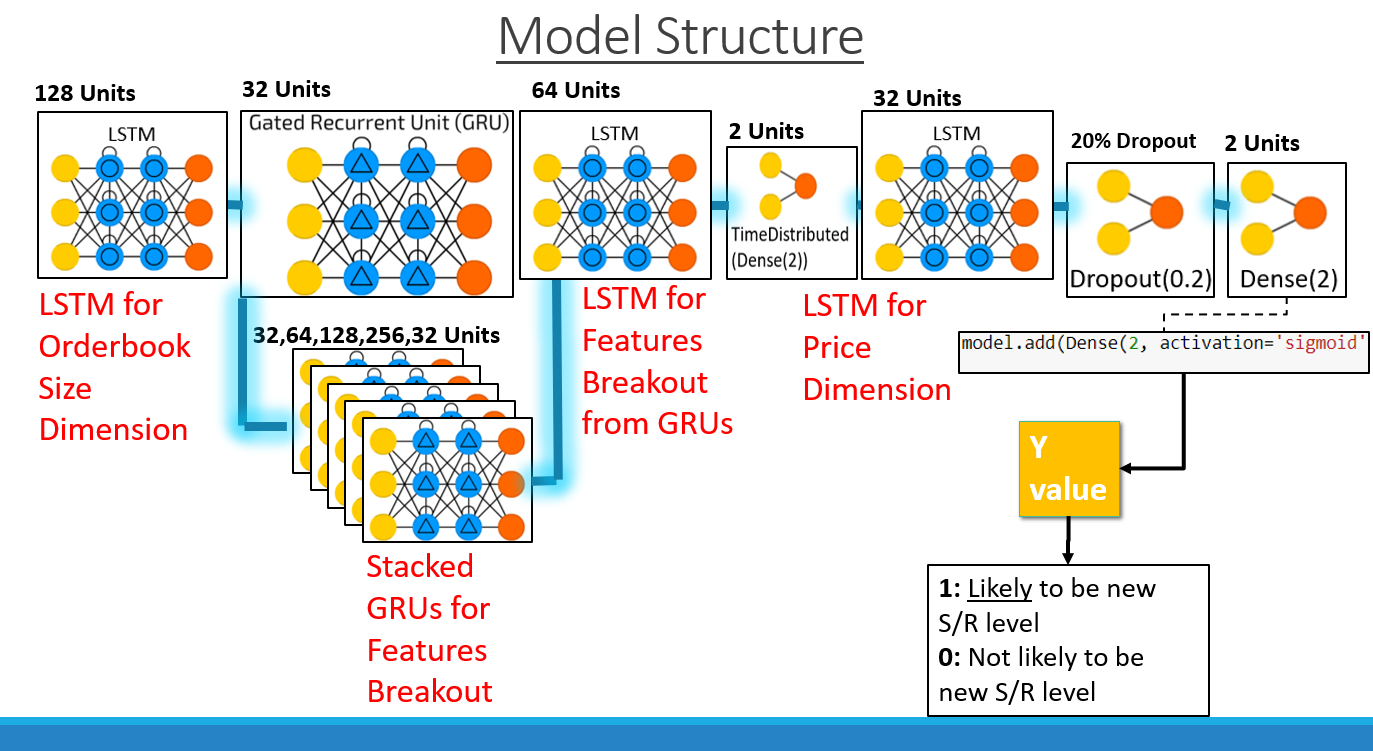

- Screenshot of model layer structure in text format

- Graphviz output of model layer structure

- 'test_data' folder:

- Only has 10 minutes of scraped data for testing, development, and model input prototyping (snapshot + l2 response updates)

- 'raw_data' folder:

- 1 hour of scraped data (snapshot + l2 response updates)

- l2update_15min_1-4: 1 hour of l2 updates split into four 15-minute increments

- mongo_raw.json: 1 hour of scraped data from the gdax-python API websocket in raw mongoDB format

- 1 hour of scraped data (snapshot + l2 response updates)

- 'raw_data_10h' folder:

- 10 hours of scraped data:

- l2update_10h, request_log_10h, and snapshot_asks/bids_10h

- 10 hours of scraped data in raw mongoDB export (JSON): mongo_raw_10h.json

- Data in .msg (MessagePack) format currently experimental/testing as alternative to .csv format for I/O operations

- 10 hours of scraped data:

- 'raw_data_pipeline' folder:

- Contains data in .feather format as part of data pipeline(s) implementation and development

- 'archived_ipynb' folder:

- Contains previous Jupyter Notebook files used in the construction, design, and prototyping of components of this project.

- Jupyter Notebook (.ipynb) notebook files 1-5 & 7

- Each successive notebook was used to construct and test whether at each "stage" if a project of this kind of scope would even be technically possible.

- Successive numbered notebooks generally improve and are iterative in nature on previous notebook files for this project.

- Contains previous Jupyter Notebook files used in the construction, design, and prototyping of components of this project.

- How to Construct Deep Recurrent Neural Networks

- Training and Analysing Deep Recurrent Neural Networks

- Where to Apply Dropout in Recurrent Neural Networks for Handwriting Recognition?

- Dropout improves Recurrent Neural Networks for Handwriting Recognition

- Speech Recognition with Deep Recurrent Neural Networks

- Recurrent Dropout without Memory Loss

- Deep Stacked Bidirectional and Unidirectional LSTM Recurrent Neural Network for Network-wide Traffic Speed Prediction

- gdax-orderbook-ml: BSD-3 Licensed, Copyright (c) 2018 Timothy Yu

- coinbasepro-python: MIT Licensed, Copyright (c) 2017 Daniel Paquin

- autoSR() function adapted from nakulnayyar/SupResGenerator, Copyright (c) 2016 Nakul Nayyar (https://github.com/nakulnayyar/SupResGenerator)