Homework1-MDPs

The lab materials are partially borrowed from UC Berkerly cs294

Introduction

In this homework, we solve MDPs with finte state and action space via value iteration, policy iteration, and tabular Q-learning.

What's Markov decision process (MDP)?

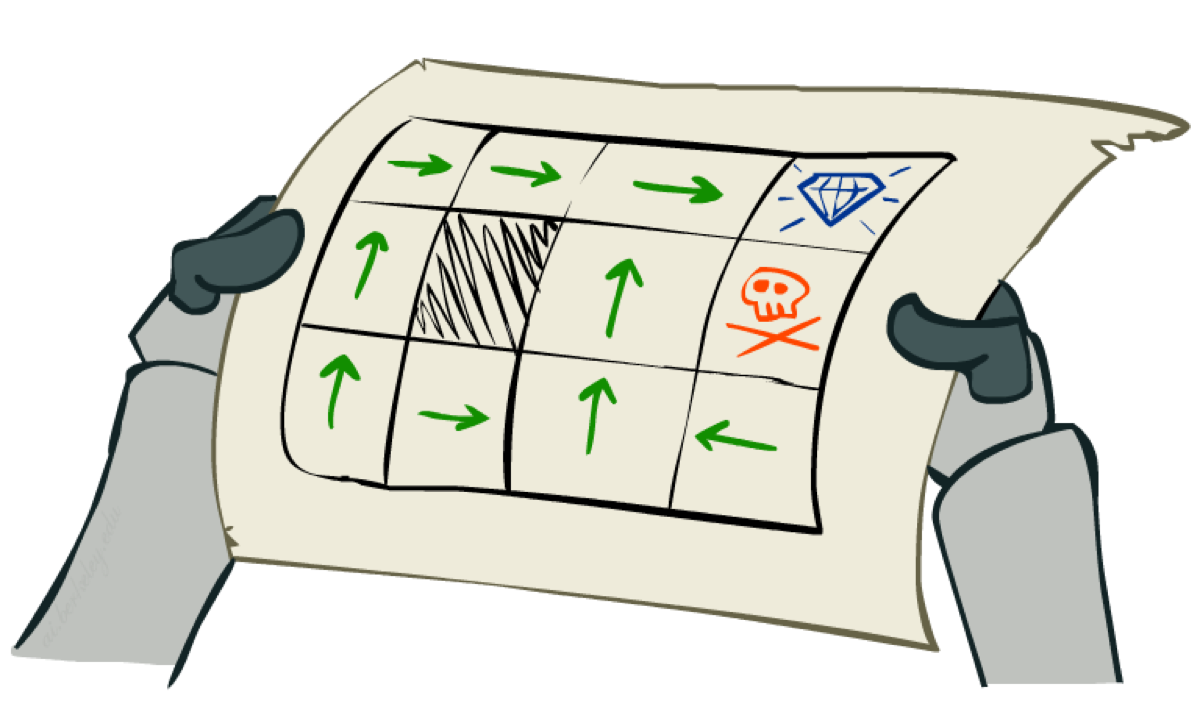

Markov Decision Process is a discrete time stochastic control process. At each time step, the process is in some state s, and the decision maker may choose any action a that is available in state s. The process responds at the next time step by randomly moving into a new state s', and giving the decision maker a corresponding reward R(s,a,s')

image borrowed from UCB CS188

Setup

- Python 3.5.3

- OpenAI gym

- numpy

- matplotlib

- ipython

All the codes you need to modified are in Lab2-MDPs.ipynb.

We encourage you to install Anaconda or Miniconda in your laptop to avoid tedious dependencies problem.

for lazy people:

conda env create -f environment.yml

source activate cedl

# deactivate when you want to leave the environment

source deactivate cedl

Prerequisites

If you are unfamiliar with Numpy or IPython, you should read materials from CS231n:

How to Start

Start IPython: After you clone this repository and install all the dependencies, you should start the IPython notebook server from the home directory Open the assignment: Open Lab1-MDPs (students).ipynb, and it will walk you through completing the assignment.

TODO

- [30%] value iteration

- [30%] policy iteration

- [30%] tabular Q-learning

- [10%] report

- [5%] Bonus, share you code and what you learn on github or yourpersonal blogs, such as this

Other

- Deadline: 10/26 23:59, 2017

- Office hour 2-3 pm in 資電館711 with Yuan-Hong Liao.

- Contact andrewliao11@gmail.com for bugs report or any questions.

- If you stuck in the homework, here are some nice material that you can take it a look

😄