NeurIPS 2020: MineRL Competition SQIL Baseline with PFRL

This repository is a SQIL baseline submission example with PFRL, based on the MineRL Rainbow Baseline with PFRL.

For detailed & latest documentation about the competition/template, see the original template repository.

This repository is a sample of the "Round 1" submission, i.e., the agents are trained locally.

test.py is the entrypoint script for Round 1.

Please ignore train.py, which will be used in Round 2.

train/ directory contains baseline agent's model weight files trained on MineRLObtainDiamondVectorObf-v0.

List of current baselines

How to Submit

After signing up the competition, specify your account data in aicrowd.json.

See the official doc

for detailed information.

Then you can create a submission by making a tag push to your repository on https://gitlab.aicrowd.com/. Any tag push (where the tag name begins with "submission-") to your repository is considered as a submission.

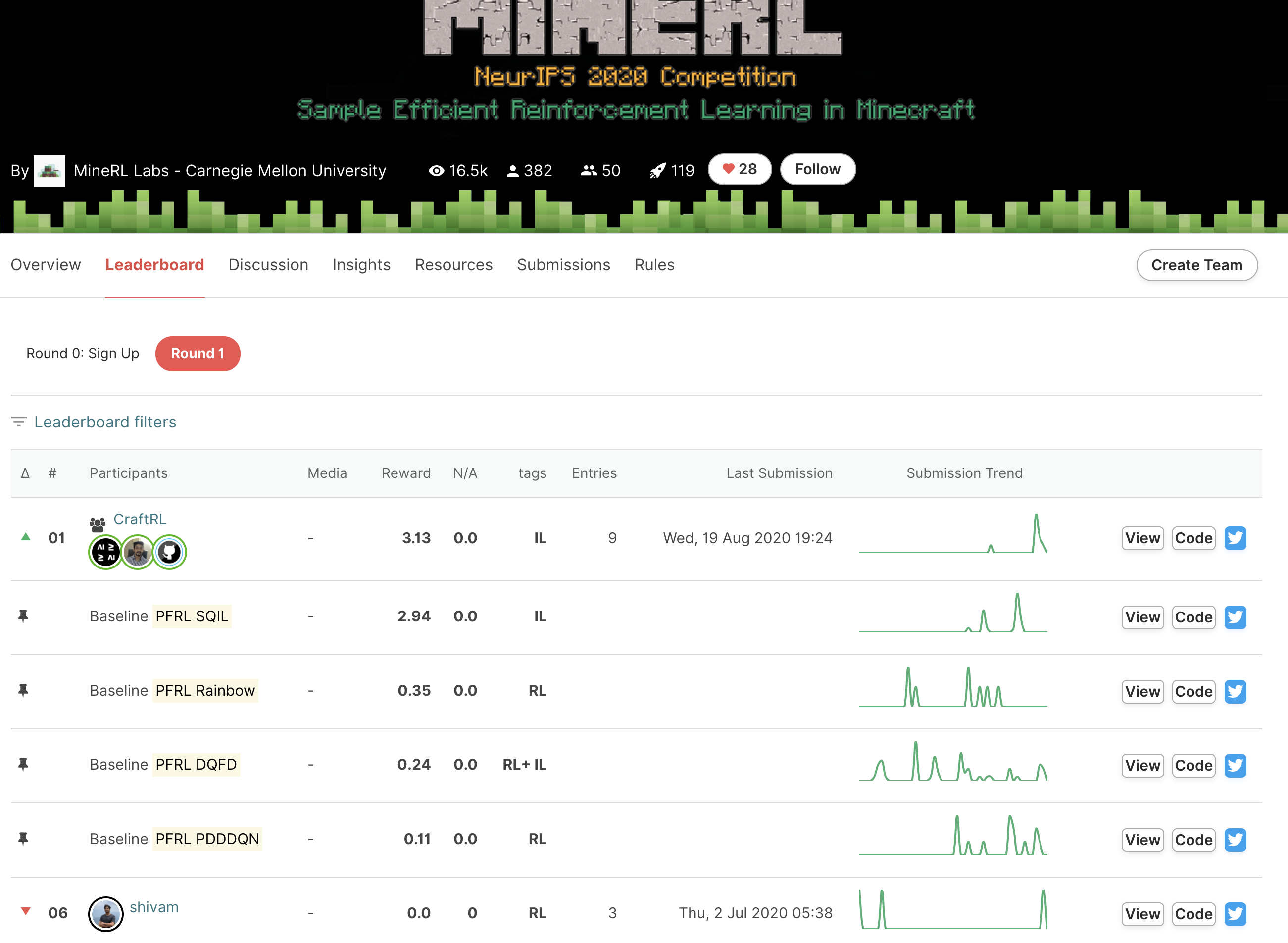

If everything works out correctly, you should be able to see your score on the competition leaderboard.

About Baseline Algorithm

This baseline consists of three main steps:

- Apply K-means clustering for the action space with the demonstration dataset.

- Calculate cumulative reward boundaries for each subtask so that the amount of frames in the demonstration is equally separated.

- Apply SQIL algorithm on the discretized action space.

K-means in the step 1 is from scikit-learn.

In this baseline, the agent maintains two clusters with different sampling criteria: One is sampled from frames changing vector in the next observation and the other is from the remaining.

The implementation of SQIL is not included into PFRL agents but it is based on PFRL's DQN implementation.

How to Train Baseline Agent on your own

mod/ directory contains all you need to train agent locally:

pip install numpy scipy scikit-learn pandas tqdm joblib pfrl

# Don't forget to set this!

export MINERL_DATA_ROOT=<directory you want to store demonstration dataset>

python3 mod/sqil.py \

--gpu 0 --env "MineRLObtainDiamondVectorObf-v0" \

--outdir result \

--replay-capacity 300000 --replay-start-size 5000 --target-update-interval 10000 \

--num-step-return 1 --lr 0.0000625 --adam-eps 0.00015 --frame-stack 4 --frame-skip 4 \

--gamma 0.99 --batch-accumulator mean --exp-reward-scale 10 --logging-level 20 \

--steps 4000000 --eval-n-runs 20 --arch dueling --dual-kmeans --kmeans-n-clusters-vc 60 --option-n-groups 10or you can call a fixed setting from train.py.

Team

The quick-start kit was authored by Shivam Khandelwal with help from William H. Guss

The competition is organized by the following team:

- William H. Guss (Carnegie Mellon University)

- Mario Ynocente Castro (Preferred Networks)

- Cayden Codel (Carnegie Mellon University)

- Katja Hofmann (Microsoft Research)

- Brandon Houghton (Carnegie Mellon University)

- Noboru Kuno (Microsoft Research)

- Crissman Loomis (Preferred Networks)

- Keisuke Nakata (Preferred Networks)

- Stephanie Milani (University of Maryland, Baltimore County and Carnegie Mellon University)

- Sharada Mohanty (AIcrowd)

- Diego Perez Liebana (Queen Mary University of London)

- Ruslan Salakhutdinov (Carnegie Mellon University)

- Shinya Shiroshita (Preferred Networks)

- Nicholay Topin (Carnegie Mellon University)

- Avinash Ummadisingu (Preferred Networks)

- Manuela Veloso (Carnegie Mellon University)

- Phillip Wang (Carnegie Mellon University)