This repo is a place where I document my experience as I am learning Kubernetes.

I am using a Mac so these notes are specific to a mac, although it should be pretty easy to adapt these for windows or linux.

The "Docker Desktop on Mac" includes a a single node Kubernetes cluster that can be used for local experimentation. I believe this might be similar to setting up minikube for local development.

The kubectl (Kube Control) is a client application for controlling a Kubernetes cluster manager.

|

💡

|

The documentation states that you should use a version of kubectl that is within one minor version of the cluster you are attempting to manage. This requirement often means you need multiple versions of kubectl.

|

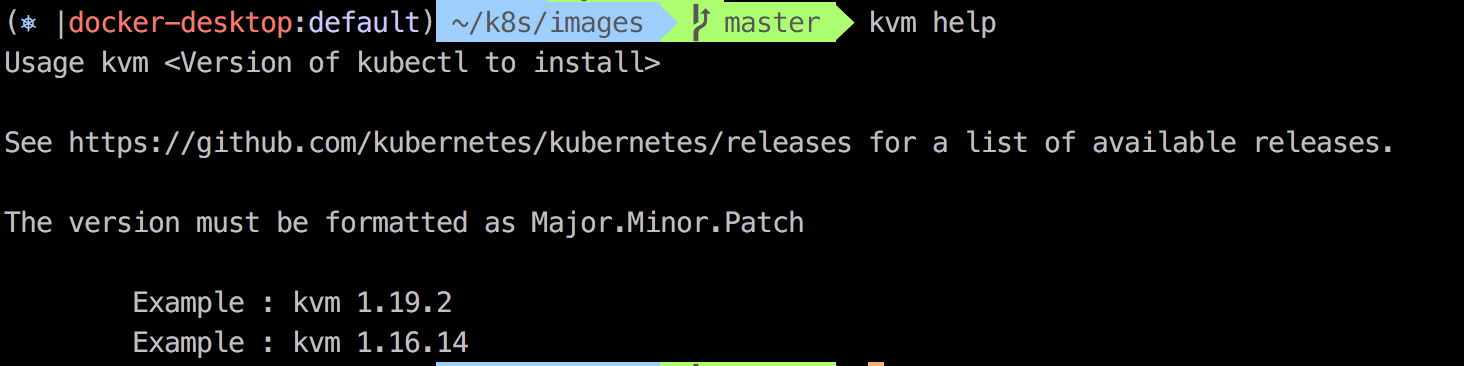

The 'kvm' bash script in this repository that can be used to download/switch between different versions of kubectl. The script is Mac-specific but can be easily adapted for Linux or Windows.

To use the kubectl version manager:

-

Clone this repo or just copy the file "kvm"

-

Change permissions on

kvmto add execute privileges.chmod 755 kvm -

From the root folder of the repo:

ln -s $(pwd)/kvm /usr/local/bin/kvm -

Assuming

/usr/local/binis on your execution path, you can now typekvm helpto get usage information.

|

❌

|

The utility requires the version to be formatted as "<Major>.<Minor>.<Patch>" and does not validate if the version exists. A list of versions can be found here: GitHub release page for Kubenertes. |

This is a small utility that will display the current Kubernetes context and namespace (used by kubectl) as part of your command prompt. See the following project for instalation instructions.

|

💡

|

Once instaled you can use kubeon / kubeoff to toggle the display.

|

This project allows you to change either your kubernetes context via kubectx and to change your namespace via 'kubens'. See the following project for instalation instructions.

You use 'kubectl' to interact with a Kubernetes cluster.

|

💡

|

You can use source <(kubectl completion zsh) to add command completion for kubectl

|

There is an interactive command line tool called k9s that automates many of the tasks you would normally need to do with kubectl. Once you are familiar with kubectl, you should explore the user of this tool to improve the interactions with your Kubernetes cluster.

The tool is well documented and can be installed on a Mac via brew install derailed/k9s/k9s

If your container needs to resolve external services and host names in your private data center you will have to adjust the docker daemon so that it includes the local DNS server.

This is done by adding dns properties to the daemon.json file:

Similar to the following:

{

"dns": ["<dns IP address>", "8.8.8.8"],

"dns-search": ["<local domain>"]

}|

💡

|

The dns-search attribute is used to default the search domain on unqualified hosts. |

A kubernetes cluster that is hosted in the data center will likely have a requirement to resolve host names that are local to that environment. This requires a changes to the CoreDNS module that is used by kubernetes for host resolution.

You can triage DNS resolution within your cluster by adding the dnsutils pod.

apiVersion: v1

kind: Pod

metadata:

name: dnsutils

namespace: default

spec:

containers:

- name: dnsutils

image: gcr.io/kubernetes-e2e-test-images/dnsutils:1.3

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Alwayskubectl apply -f dnsutils.yaml # (1)

kubectl exec -i -t dnsutils -- sh # (2)-

Apply the deployment to add the dnsutils pod.

-

Execute an interactive shell within the dnsutils pod. Once in the shell, you can use

nslookupandpingto test if resolution is working.

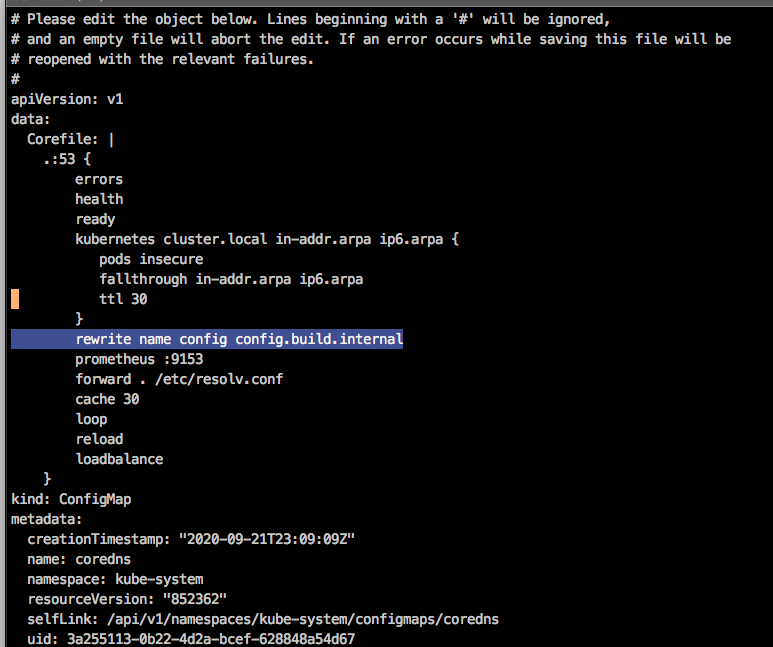

There are cases where a single host needs to be alaised as it’s fully qualtified hostname. This can be accomplished for an individual host by using a rewrite rule.

To do this, edit the ConfigMap for the CoreDNS plugin:

In this example, we want to map config (an alias for our externalized configuration server) to its fully qualified path of config.build.internal.

We can do this by editing the ConfigMap for coredns:

-

kubectl edit --namespace=kube-system configmap coredns -

Add

rewrite name config config.build.internalto the ConfigMap:

An image that connects to services outside the kubernetes cluster but hosted within an internal data center requires that the cluster can correctly resolve those host names. This typicaly involves adding a private DNS server that will be used for name resolution.

Additional DNS queries can be forwarded to a private DNS server by adding a coredns-custom ConfigMap.

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns-custom

namespace: kube-system

data:

build.server: | # (1)

build.internal { # (2)

forward . 10.143.15.140 # (3)

}-

All custom server files must have a “.server” file extension.

-

Any host name with the

build.internalsuffix will have the forwarding rule applied. -

The IP Address of the DNS server to forward the request.

The changes to the DNS Server can be applied using the following two commands:

kubectl apply -f dns-config.yaml # (1)

kubectl delete pod --namespace kube-system -l k8s-app=kube-dns # (2)-

Apply the ConfigMap to the cluster.

-

Restart the DNS module by deleting the pods. (Kubernetes will then recreate them)

In my local kubernetes environment, I wanted to use a private repository to host my images. This can be easily accomplished

by using the docker-compose.yml file located in this repository. This just starts up a container register (with no security) on port 5000.

You can also find alternatives out there that will host the registery in Kubernetes itself. In an organization, you will likely have something

like artifactory as your private image registry and a local registry is only necessary when creating a completely localized kubernetes environment.

Assuming the registry is running and the maven coordinates of your spring boot application are com.build:product-api:1.0.222-SNAPSOT

-

mvn spring-boot:build-imageThis uses cloud native build packs to build the oci compliant image. -

docker tag product-api:1.0.122-SNAPSHOT localhost:5000/buildcom/product-apiTo tag the image. -

docker push localhost:5000/buildcom/product-apiTo push the image to the registry.

To deploy a single image to Kubernetes, a deployment.yaml file can be generated using kubectrl. The following will create the deployment using the

newly created image and define a service that exposes the image publicly from the cluster.

kubectl create deployment product-api --image=localhost:5000/buildcom/product-api --dry-run=client -o=yaml > deployment.yamlkubectl create service clusterip product-api --tcp=8080:8080 --dry-run=client -o=yaml >> service.yamlkubectl apply -f deployment.yaml

kubectl apply -f service.yamlHelm is an orchestration tool that allows you to easily group a set of kubernetes resources into one unit and the deploy, update, and rollback those resources in a reproducible manner.

The recipe for installing spring cloud data flow requires the use of the Helm orchestraion utility. This can be installed on a client machine via the following command: