This project was developed during the project seminar Text Mining in Practice at HPI. More details about the course can be found here: https://hpi.de/naumann/teaching/teaching/ss-18/text-mining-in-practice-ps-master.html

Our goal was to build deep learning models for the prediction of the comment volume for newspaper articles. We focused on articles of https://www.theguardian.com

During the project, we've worked in different environments on different machines. Therefore, we've used docker in our development process.

To use our setup, please go into the docker directory and run docker-compose up.

This setup dose not contain any code from this repository but provides a convenient environment.

We use ssh to access the VMs. To use your own ssh-public-key, please provide your key in docker/setup/ssh_keys.pub.

The keys within this file will be installed within the container.

Our docker environment provides two machines (developer/production): each one has access to a different postgres database.

Each database can be administrated individually. For us it was a convenient approach to have a small amount of the production

database for our developer environment. For more details, please check out our docker-compose.yml.

Feel free not using docker, you just need to supply a postgres database.

To setup the database, please execute our schema file on your postgres instance.

To import the data (authors, articles, and comments), you can use the run.py, but we recommend to use a native

postgres import strategy to speed up the process.

Please make a copy of the setttings.default.yml -> settings.yml and adjust it to your environment.

Before you start, make sure to install all requirements with pip3 install -r requirements.txt.

To run our models and further actions, please execute python3 run.py.

An overview of possible options will be given by using python3 run.py -h

The models are created using descendants from the generic class src.models.model_builder.ModelBuilder.

We created the following base models:

This model used a trainable embedding layer converting the headline words to a headline embedding matrix. The network also uses two dense layers (hence the name).

This model uses the same embedding as the Headline Dense model. The embeddings get transformed by an embedding layer with three different kernel sizes and max-pooling is used afterwards.

This model uses the first words of an article text, embedds them like the Headline Dense model, and uses a LSTM-Layer to process the embedded words.

This model uses the category of an article and two dense layers.

This model uses multiple time features extracted from the release time stamp of the article. The time features are:

- minute

- hour

- day of the week

- day of the year

The time features get embedded and processed through two dense layers.

This model uses the logarithm from the headline and the article word count. The logarithm is used to create exponential sized bins for the articles. The difference between 900 and 1000 words is not important rather than the difference between 50 and 150 words.

The logarithms get embedded and processed through two dense layers.

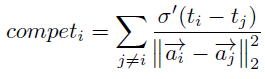

This model uses a self computed competitve score. The competitive score is used to express the competition for every article. It is calculated using the formula:

with

Based on the performance and the correlation of the base models, we combined certain models.

The correlations can be seen here: