This is a simple python program that:

-

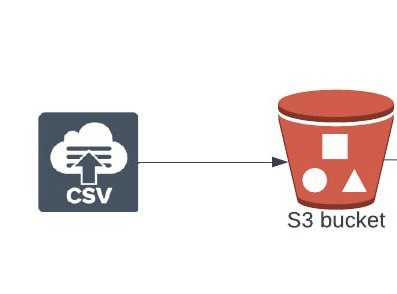

CREATES an AWS S3 bucket programmatically using the [

boto3 library] (https://boto3.readthedocs.io), -

UPLOAD a csv file contained with arbitrary(fake) data generated with the python

faker libraryto the created S3 bucket using theboto3 library, -

DELETE the bucket and its contents with the

boto3 library.

Beginner Friendly 😎 😎

The projects aims to demonsrate simply how to use python with AWS via it Boto3 library in connecting/upload to an S3 bucket.

Programmatically was emphasized because all this actions can be done similarly with the AWS console, but performing this actions with python scripts gives the GREAT ADVANTAGE OF AUTOMATION and as a Data Engineer, this is a pioneering mindset.

- Cloud services - AWS S3 object storage

- Python faker library

- Languages -- Python

-

A functioning AWS account with the below information;

aws_access_key_idaws_secret_access_key

-

The project does contain a requirement.txt, install the required libraries by running

$ pip install -r requirement.txtin the project terminal.

It is advisable to use an [AWS IAM USER], read about this from (https://docs.aws.amazon.com) for best practices as I also did.

- any preferred location permissible by the

Boto3 library,- bucket name which is required to be unique globally,

- filename is hardcoded as "file100.csv", but you can change it in the

create_bucket.pyscript.

Unfortunately we cannot create an AWS account from a python script 🤣 🤣, visit the [official AWS website] (https://aws.amazon.com).

The information from the requirements (access_key, secret_access_key and bucket_name) would be supplied into the connection.conf which is the only expected section of this program to be altered to successfully run the program. Of course, it's your code now 👍, you can make any amount of changes.

I used both of the boto3 API call - Client and Resource for the sake of expanding our horizons, either is completely usable alone to run the program, I'll encourage to check the internet for more to see the difference.

The program is separated into three python script and built to run independently;

create_bucket.pyfor creating the S3 bucket with the supplied AWS account credentials, advised to be run first if starting with a new bucket.upload_csv_to_bucket.pyto generate and upload the csv file to bucket and location specified in theconnection.conffile.delete_bucket.pydeletes the bucket, ensure it is deleted (review the code). If only you so wish.

- We can do better by involving a scheduler like Airflow to automate running the scripts consecutively.

- A messaging system like Amazon SNS (Simple Notification Service) to update us regarding the state of our program.

- The program becoming an application if containerised with Docker and be shared publicly in DockerHub.

Want to try out the program, read the short "setup.txt" to get things working properly.

More of this is definitely coming, do come back to check them out.