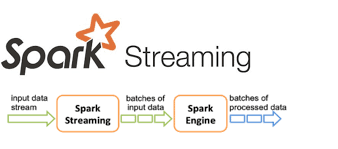

This is a Spark streaming program that streams data from a file source(e.g CSV, JSON, Parquet, AVRO), applies aggregations with SparkSQL and sends the data and its aggregations to a sink (e.g console, file source).

To demonstrate a simple Spark streaming with aggregation using PySpark.

- Used the Python Faker library to produce fake data files (CSV, JSON) which is populated to a source destination at intervals set with the built-in python time module.

- Define the table schema with StructType.

- Create a spark session, spark readstream object and a output spark writestream object.

- Performed aggregation on the data with SparkSQL (i.e with SQL query).

-

Inside the cloned repo, create your virtual env, and run

pip install -r dependencies.txtin the activated virtual env to set up required libraries and packages. -

Activte the virtual env.

-

It would be a good idea to split the terminal, run

python spark_streaming_from_csv.pythenpython generation_csv_from_faker.pyin the terminal. -

I would advice not to delay time in running both scripts as the spark streaming session is timed, otherwise change the numerial argument in

.awaitTerminatio(). Any number gives it a time in secs and nothing awaits for a keyboard or program interruption. -

Any acceptable file format by Spark can be used with this program with a well defined schema. 👍

- We can include scheduling and orchestration with Apache Airflow.

Having any issues, reach me at this email. More is definitely coming, do come back to check them out.

Now go get your cup of coffee/tea and enjoy a good code-read and criticism 👍 👍.