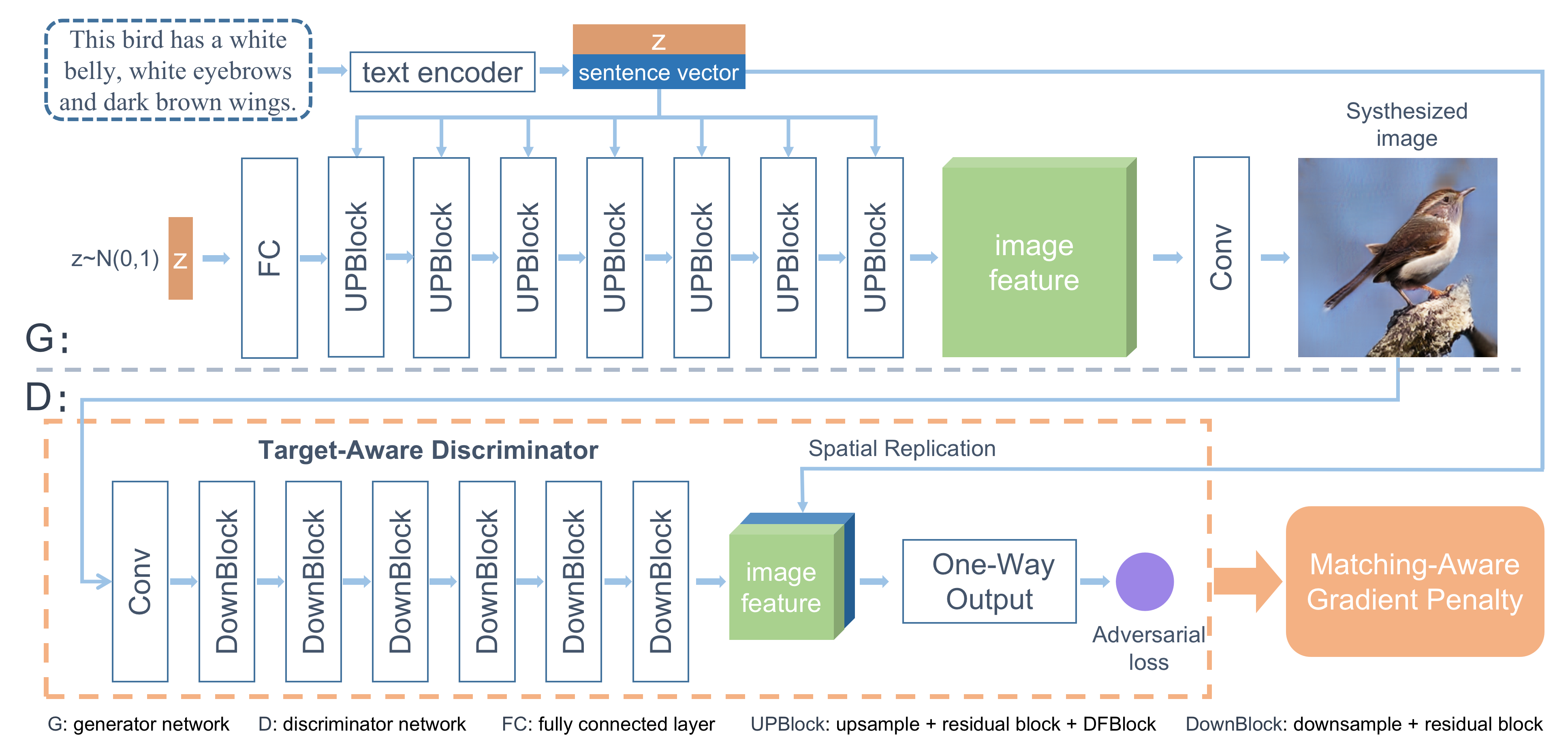

Official Pytorch implementation for our paper DF-GAN: A Simple and Effective Baseline for Text-to-Image Synthesis by Ming Tao, Hao Tang, Fei Wu, Xiao-Yuan Jing, Bing-Kun Bao, Changsheng Xu.

[CVPR2023]Our new simple and effective model GALIP (paper link, code link) achieves comparable results to Large Pretrained Diffusion Models! Furthermore, our GALIP is training-efficient which only requires 3% training data, 6% learnable parameters. Our GALIP achieves ~120 x faster synthesis speed and can be inferred on CPU.

GALIP significantly lowers the hardware threshold for training and inference. We hope that more users can find the interesting of AIGC.

- python 3.8

- Pytorch 1.9

- At least 1x12GB NVIDIA GPU

Clone this repo.

git clone https://github.com/tobran/DF-GAN

pip install -r requirements.txt

cd DF-GAN/code/

- Download the preprocessed metadata for birds coco and extract them to

data/ - Download the birds image data. Extract them to

data/birds/ - Download coco2014 dataset and extract the images to

data/coco/images/

cd DF-GAN/code/

- For bird dataset:

bash scripts/train.sh ./cfg/bird.yml - For coco dataset:

bash scripts/train.sh ./cfg/coco.yml

If your training process is interrupted unexpectedly, set resume_epoch and resume_model_path in train.sh to resume training.

Our code supports automate FID evaluation during training, the results are stored in TensorBoard files under ./logs. You can change the test interval by changing test_interval in the YAML file.

- For bird dataset:

tensorboard --logdir=./code/logs/bird/train --port 8166 - For coco dataset:

tensorboard --logdir=./code/logs/coco/train --port 8177

- DF-GAN for bird. Download and save it to

./code/saved_models/bird/ - DF-GAN for coco. Download and save it to

./code/saved_models/coco/

We synthesize about 3w images from the test descriptions and evaluate the FID between synthesized images and test images of each dataset.

cd DF-GAN/code/

- For bird dataset:

bash scripts/calc_FID.sh ./cfg/bird.yml - For coco dataset:

bash scripts/calc_FID.sh ./cfg/coco.yml - We compute inception score for models trained on birds using StackGAN-inception-model.

- Our evaluation codes do not save the synthesized images (about 3w images). If you want to save them, set save_image: True in the YAML file.

- Since we find that the IS can be overfitted heavily through Inception-V3 jointed training, we do not recommend the IS metric for text-to-image synthesis.

The released model achieves better performance than the CVPR paper version.

| Model | CUB-FID↓ | COCO-FID↓ | NOP↓ |

|---|---|---|---|

| DF-GAN(paper) | 14.81 | 19.32 | 19M |

| DF-GAN(pretrained model) | 12.10 | 15.41 | 18M |

cd DF-GAN/code/

- For bird dataset:

bash scripts/sample.sh ./cfg/bird.yml - For coco dataset:

bash scripts/sample.sh ./cfg/coco.yml

- Replace your text descriptions into the ./code/example_captions/dataset_name.txt

- For bird dataset:

bash scripts/sample.sh ./cfg/bird.yml - For coco dataset:

bash scripts/sample.sh ./cfg/coco.yml

The synthesized images are saved at ./code/samples.

If you find DF-GAN useful in your research, please consider citing our paper:

@inproceedings{tao2022df,

title={DF-GAN: A Simple and Effective Baseline for Text-to-Image Synthesis},

author={Tao, Ming and Tang, Hao and Wu, Fei and Jing, Xiao-Yuan and Bao, Bing-Kun and Xu, Changsheng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={16515--16525},

year={2022}

}

The code is released for academic research use only. For commercial use, please contact Ming Tao.

Reference