DCSR: Dual Camera Super-Resolution

Dual-Camera Super-Resolution with Aligned Attention Modules

ICCV 2021 (Oral Presentation)

paper | project website | dataset | demo video | results on CUFED5

Introduction

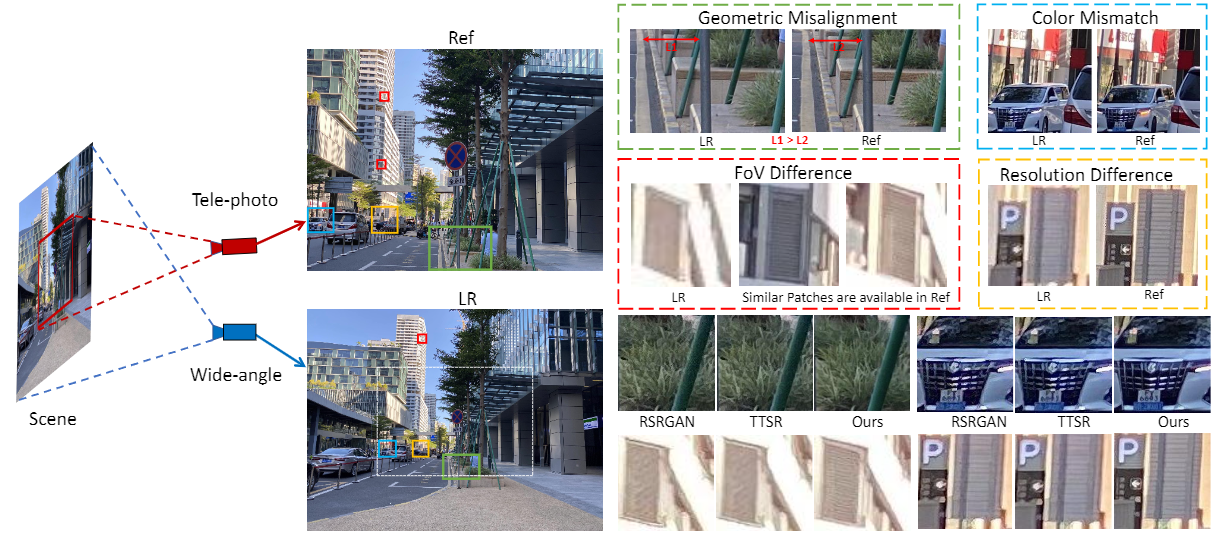

We present a novel approach to reference-based super resolution (RefSR) with the focus on real-world dual-camera super resolution (DCSR).

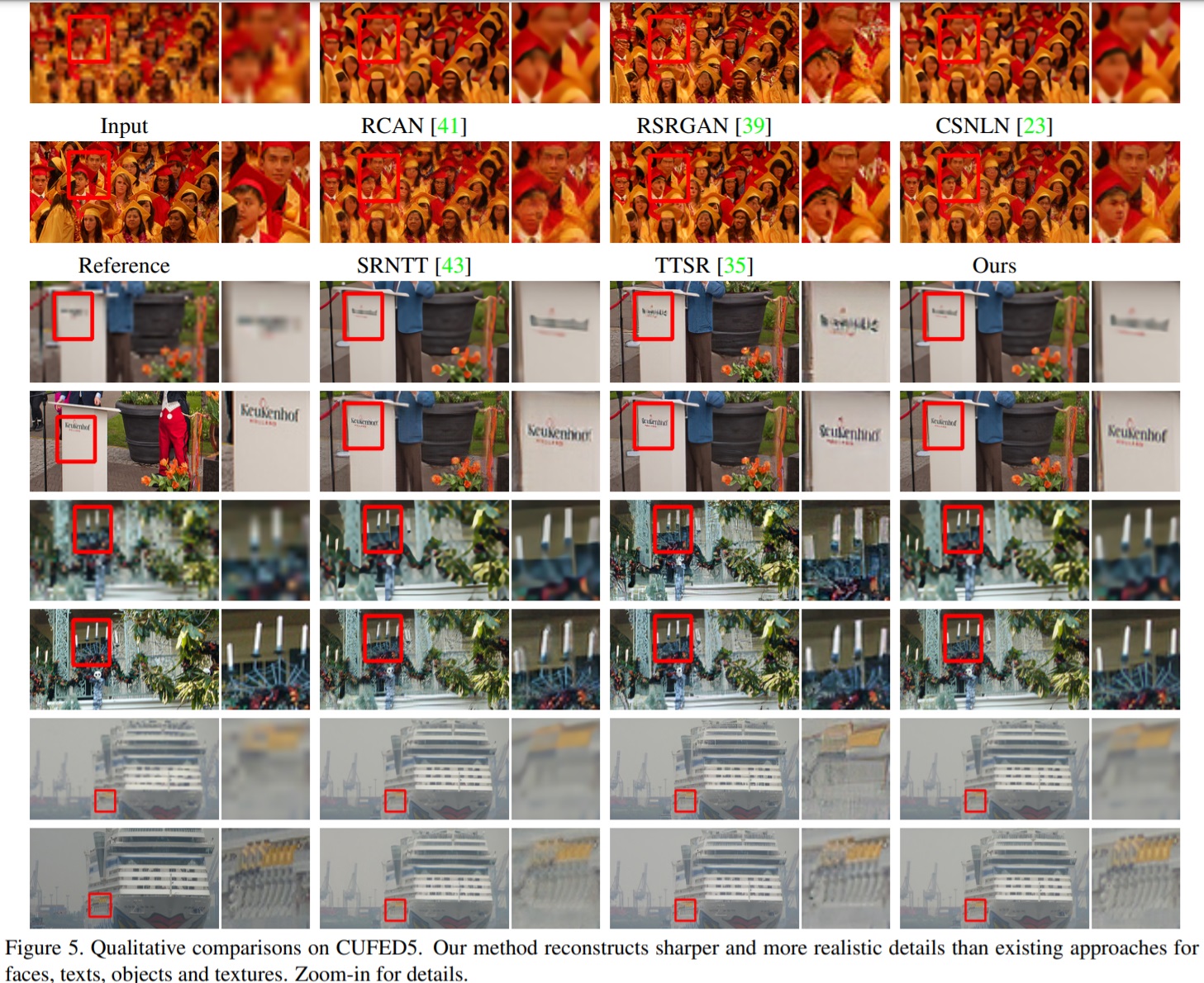

Results

4X SR results on CUFED5 testset can be found in this link.

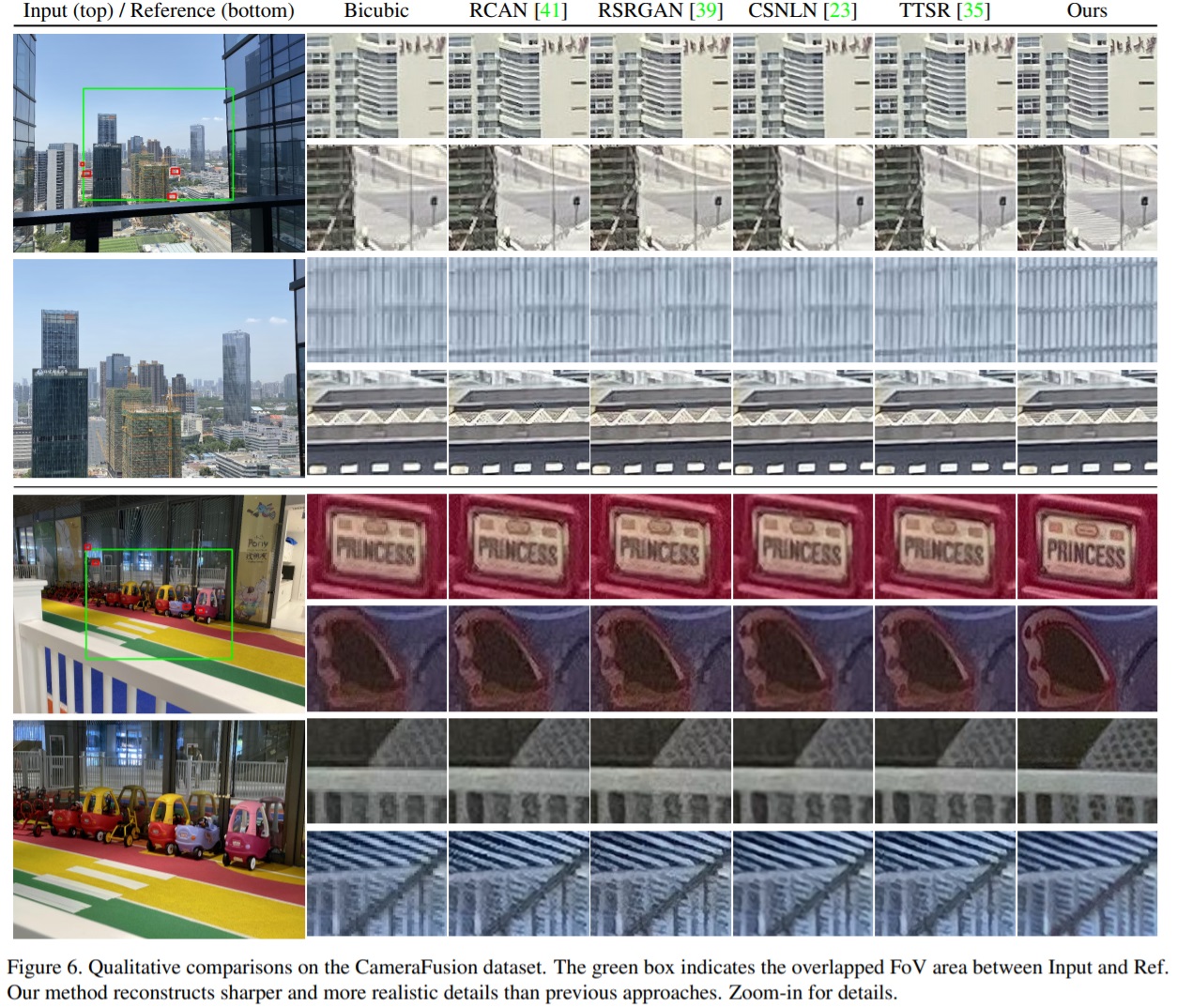

More 2X SR results on CameraFusion dataset can be found in our project website.

Setup

Installation

git clone https://github.com/Tengfei-Wang/DualCameraSR.git

cd DualCameraSR

Environment

The environment can be simply set up by Anaconda:

conda create -n DCSR python=3.7

conda activate DCSR

pip install -r requirements.txt

Dataset

Download our CameraFusion dataset from this link. This dataset currently consists of 143 pairs of telephoto and wide-angle images in 4K resolution captured by smartphone dual-cameras.

mkdir data

cd ./data

unzip CameraFusion.zip

Quick Start

The pretrained models have been put in ./experiments/pretrain. For quick test, run the scipts:

# For 4K test (with ground-truth High-Resolution images):

sh test.py

# For 8K test (without SRA):

sh test_8k.sh

# For 8K test (with SRA):

sh test_8k_SRA.sh

Training

To train the DCSR model on CameraFusion, run:

sh train.sh

The trained model should perform well on 4K test, but may suffer performance degradation on 8K test.

After the regular training, we can use Self-supervised Real-image Adaptation (SRA) to finetune the trained model for real-world 8K image applications:

sh train_SRA.sh

Citation

If you find this work useful for your research, please cite:

@InProceedings{wang2021DCSR,

author = {Wang, Tengfei and Xie, Jiaxin and Sun, Wenxiu and Yan, Qiong and Chen, Qifeng},

title = {Dual-Camera Super-Resolution with Aligned Attention Modules},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2021}

}

Acknowledgement

We thank the authors of EDSR, CSNLN, TTSR and style-swap for sharing their codes.