【CVPR'24】OST: Refining Text Knowledge with Optimal Spatio-Temporal Descriptor for General Video Recognition

Tongjia Chen1, Hongshan Yu1, Zhengeng Yang2, Zechuan Li1, Wei Sun1, Chen Chen3

In this work, we introduce a novel general video recognition pipeline OST. We prompt an LLM to augment category names into Spatio-Temporal Descriptors and refine the semantic knowledge via Optimal Descriptor Solver.

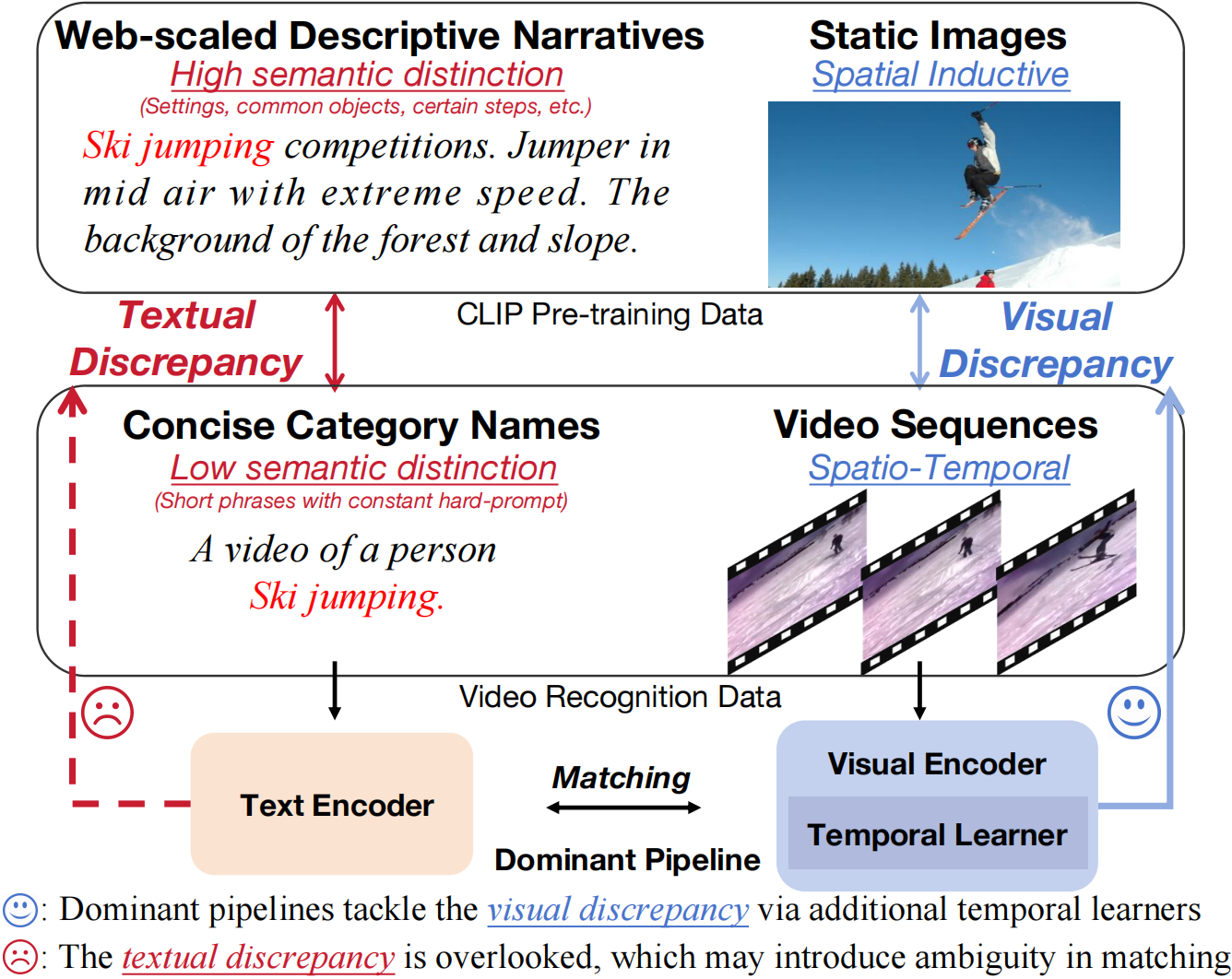

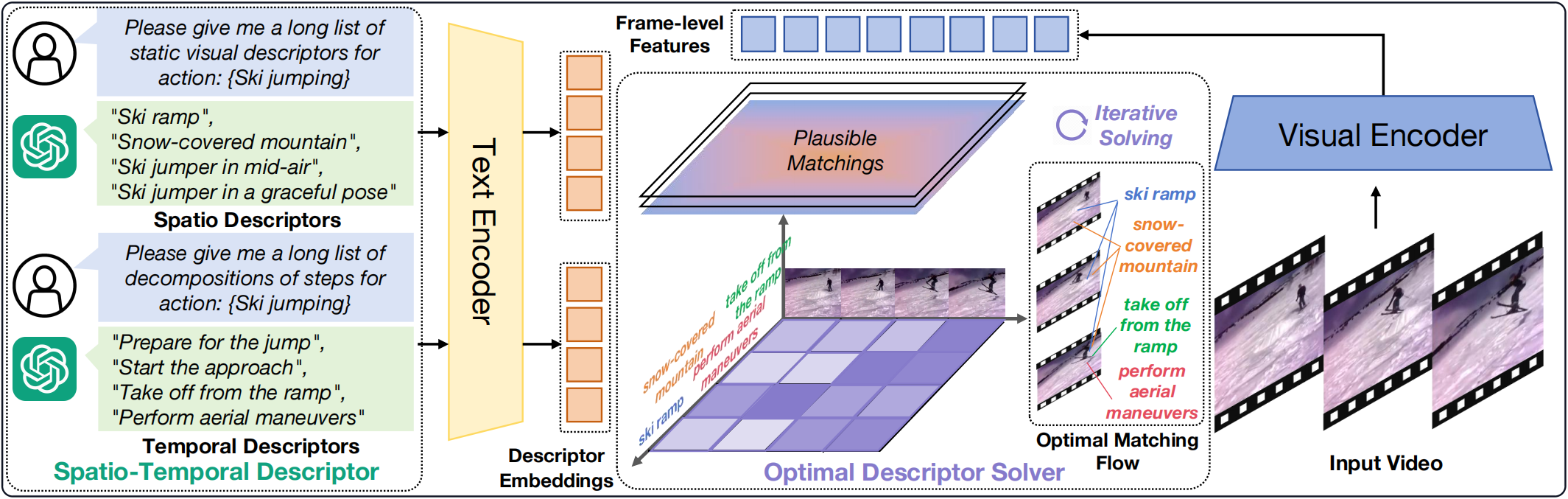

Dominant pipelines propose to tackle the visual discrepancies with additional temporal learners while overlooking the textual discrepancy between descriptive narratives and concise category names. This oversight results in a less separable latent space, which may hinder video recognition.We query the Large Language Model to augment category names to generate corresponding Category Descriptors. The descriptors disentangled category names into Spatio-Temporal Descriptors for static visual cues and temporal evolution, respectively. To fully refine the textual knowledge, we propose Optimal Descriptor Solver that adaptively aligns descriptors with video frames. An optimal matching flow is calculated through the iterative solving of the entropy-regularized OT problem to assign optimal descriptors for each video instance.

- Code Release

- Environment

- Checkpoint Release

- Fully-supervised code & model weights

Our codebase is mainly built on ViFi-CLIP, please follow the instruction provided in their repository to build environments.

(Note that you may need to build your environments on mmcv 1.x)

Fow all the experiments illustrated in the main paper, we provide config files in the configs folder. For example, to train OST on Kinetics-400, you can run the following command:

python -m torch.distributed.launch --nproc_per_node=8 \

main_nce.py -cfg configs/zero_shot/train/k400/16_32_ost.yaml --output /PATH/TO/OUTPUT To evaluate a model, please use the specific config file in the configs folder according to the dataset and data splits. To evaluate OST in the zero-shot setting with 32 frames on UCF-101 zero-shot split-1, you can run the command below:

python -m torch.distributed.launch --nproc_per_node=8 \

main_nce.py -cfg configs/zero_shot/eval/ucf/16_32_ost_zs_ucf101_split1.yaml --output /PATH/TO/OUTPUT \

--only_test --resume /PATH/TO/CKPTPlease note that we use 8 GPUs in all of our main experiments, and the results may vary due to different environment settings and hardwares.

We use OpenAI pretrained CLIP-B/16 model in all of our experiments. We provide checkpoints of our OST in zero-shot and few-shot settings below. All of the model checkpoints are available in the HuggingFace Space 🤗.

Zero-shot setting

For the zero-shot setting, the model is first fine-tuned on Kinetics-400 and then directly evaluated on 3 downstream datasets. So here we provide our Kinetics-400 fine-tuned model weights for reproducing the zero-shot results illustrated in our main paper.

| Config | Input | HMDB-51 | UCF-101 | Kinetics-600 | Checkpoints |

|---|---|---|---|---|---|

| OST | 55.9 | 79.7 | 75.1 | Link |

Few-shot setting

For few-shot setting, we follows the evaluation protocal of mainstream pipelines. We evaluate OST in two different settings (Directly tuning on CLIP & Fine-tuned on K400).

Directly tuning on CLIP

| Config | Input | Shots | Dataset | Top-1 Acc. | Checkpoints |

|---|---|---|---|---|---|

| OST | 2 | HMDB-51 | 59.1 | Link | |

| OST | 4 | HMDB-51 | 62.9 | Link | |

| OST | 8 | HMDB-51 | 64.9 | Link | |

| OST | 16 | HMDB-51 | 68.2 | Link | |

| OST | 2 | UCF-101 | 82.5 | Link | |

| OST | 4 | UCF-101 | 87.5 | Link | |

| OST | 8 | UCF-101 | 91.7 | Link | |

| OST | 16 | UCF-101 | 93.9 | Link | |

| OST | 2 | Something-Something V2 | 7.0 | Link | |

| OST | 4 | Something-Something V2 | 7.7 | Link | |

| OST | 8 | Something-Something V2 | 8.9 | Link | |

| OST | 16 | Something-Something V2 | 12.2 | Link |

Fine-tuned on K400

Please note for this setting, you only need to replace the original CLIP with our Kinetics-400 finetuned model.

| Config | Input | Shots | Dataset | Top-1 Acc. | Checkpoints |

|---|---|---|---|---|---|

| OST | 2 | HMDB-51 | 64.8 | Link | |

| OST | 4 | HMDB-51 | 66.7 | Link | |

| OST | 8 | HMDB-51 | 69.2 | Link | |

| OST | 16 | HMDB-51 | 71.6 | Link | |

| OST | 2 | UCF-101 | 90.3 | Link | |

| OST | 4 | UCF-101 | 92.6 | Link | |

| OST | 8 | UCF-101 | 94.4 | Link | |

| OST | 16 | UCF-101 | 96.2 | Link | |

| OST | 2 | Something-Something V2 | 8.0 | Link | |

| OST | 4 | Something-Something V2 | 8.9 | Link | |

| OST | 8 | Something-Something V2 | 10.5 | Link | |

| OST | 16 | Something-Something V2 | 12.6 | Link |

If you find this work useful, please consider citing our paper! ;-)

@article{

chen2023ost,

title={OST: Refining Text Knowledge with Optimal Spatio-Temporal Descriptor for General Video Recognition},

author={Tongjia Chen, Hongshan Yu, Zhengeng Yang, Zechuan Li, Wei Sun, Chen Chen.},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024},

}

The work was done while Tongjia was a research intern mentored by Chen Chen. We thank Ming Li (UCF) and Yong He (UWA) for proof-reading and discussion.

This repository is built upon portions of ViFi-CLIP, MAXI, and Text4Vis. We sincerely thank the authors for releasing their code.