In this notebook, we’ll recreate a style transfer method that is outlined in the paper, Image Style Transfer Using Convolutional Neural Networks, by Gatys in PyTorch.

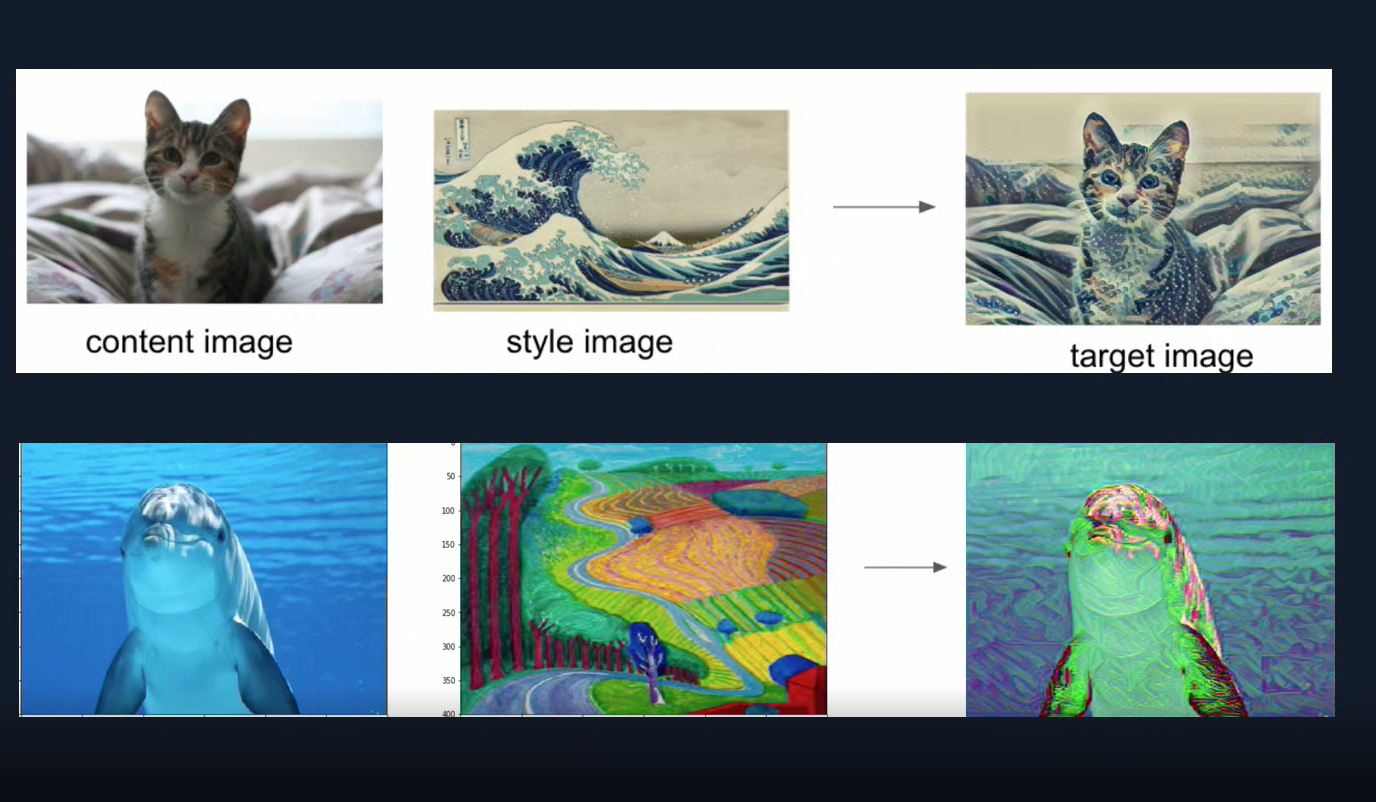

- Style transfer relies on separating the content and style of an image. Given one content image and one style image, we aim to create a new, target image which should contain our desired content and style components

- objects and their arrangement are similar to that of the content image style, colors, and textures are similar to that of the style image

- Load in a pre-trained VGG Net

- Freeze the weights in selected layers, so that the model can be used as a fixed feature extractor

- Load in content and style images

- Extract features from different layers of our model

- Complete a function to calculate the gram matrix of a given convolutional layer

- Define the content, style, and total loss for iteratively updating a target image

We use SemVer for versioning. For the versions available, see the tags on this repository.

- Pytorch - An open source machine learning framework that accelerates the path from research prototyping to production deployment.

- Tom Ge - Fullstack egineer - github profile

This project is licensed under the MIT License