Fracdiff performs fractional differentiation of time-series, a la "Advances in Financial Machine Learning" by M. Prado. Fractional differentiation processes time-series to a stationary one while preserving memory in the original time-series. Fracdiff features super-fast computation and scikit-learn compatible API.

See M. L. Prado, "Advances in Financial Machine Learning".

pip install fracdifffdiff: A function that extendsnumpy.diffto fractional differentiation.sklearn.Fracdiff: A scikit-learn transformer to compute fractional differentiation.sklearn.FracdiffStat:Fracdiffplus automatic choice of differentiation order that makes time-series stationary.torch.fdiff: A functional that extendstorch.diffto fractional differentiation.torch.Fracdiff: A module that computes fractional differentiation.

Fracdiff is blazingly fast.

The following graphs show that Fracdiff computes fractional differentiation much faster than the "official" implementation.

It is especially noteworthy that execution time does not increase significantly as the number of time-steps (n_samples) increases, thanks to NumPy engine.

The following tables of execution times (in unit of ms) show that Fracdiff can be ~10000 times faster than the "official" implementation.

| n_samples | fracdiff | official |

|---|---|---|

| 100 | 0.675 +-0.086 | 20.008 +-1.472 |

| 1000 | 5.081 +-0.426 | 135.613 +-3.415 |

| 10000 | 50.644 +-0.574 | 1310.033 +-17.708 |

| 100000 | 519.969 +-8.166 | 13113.457 +-105.274 |

| n_features | fracdiff | official |

|---|---|---|

| 1 | 5.081 +-0.426 | 135.613 +-3.415 |

| 10 | 6.146 +-0.247 | 1350.161 +-15.195 |

| 100 | 6.903 +-0.654 | 13675.023 +-193.960 |

| 1000 | 13.783 +-0.700 | 136610.030 +-540.572 |

(Run on Ubuntu 20.04, Intel(R) Xeon(R) CPU E5-2673 v3 @ 2.40GHz. See fracdiff/benchmark for details.)

A function fdiff calculates fractional differentiation.

This is an extension of numpy.diff to a fractional order.

import numpy as np

from fracdiff import fdiff

a = np.array([1, 2, 4, 7, 0])

fdiff(a, 0.5)

# array([ 1. , 1.5 , 2.875 , 4.6875 , -4.1640625])

np.array_equal(fdiff(a, n=1), np.diff(a, n=1))

# True

a = np.array([[1, 3, 6, 10], [0, 5, 6, 8]])

fdiff(a, 0.5, axis=0)

# array([[ 1. , 3. , 6. , 10. ],

# [-0.5, 3.5, 3. , 3. ]])

fdiff(a, 0.5, axis=-1)

# array([[1. , 2.5 , 4.375 , 6.5625],

# [0. , 5. , 3.5 , 4.375 ]])A transformer class Fracdiff performs fractional differentiation by its method transform.

from fracdiff.sklearn import Fracdiff

X = ... # 2d time-series with shape (n_samples, n_features)

f = Fracdiff(0.5)

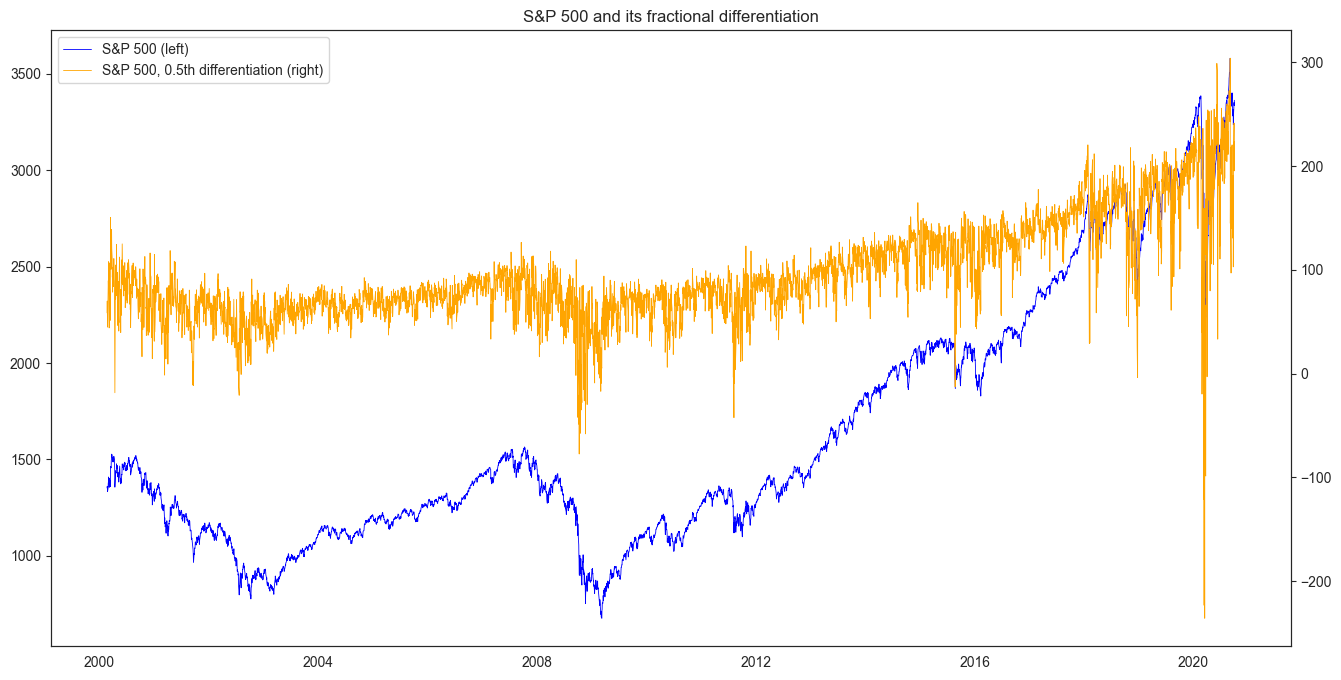

X = f.fit_transform(X)For example, 0.5th differentiation of S&P 500 historical price looks like this:

Fracdiff is compatible with scikit-learn API.

One can imcorporate it into a pipeline.

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

X, y = ... # Dataset

pipeline = Pipeline([

('scaler', StandardScaler()),

('fracdiff', Fracdiff(0.5)),

('regressor', LinearRegression()),

])

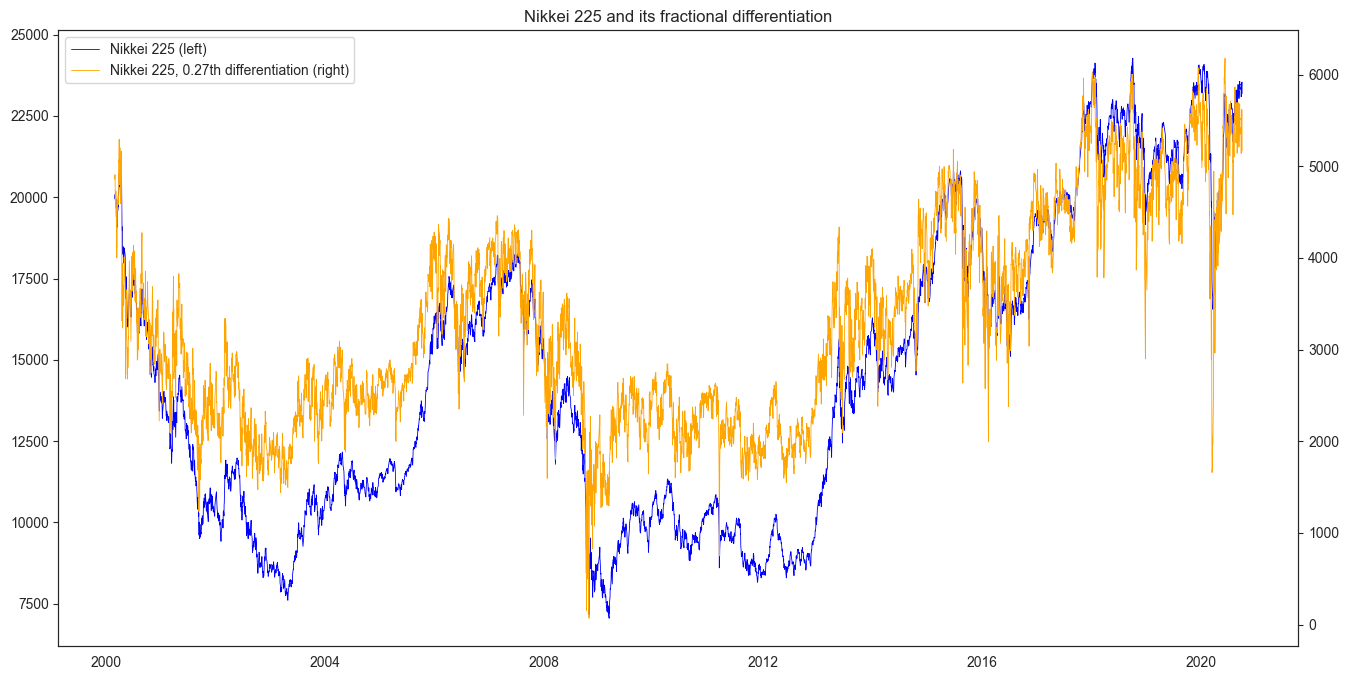

pipeline.fit(X, y)A transformer class FracdiffStat finds the minumum order of fractional differentiation that makes time-series stationary.

Differentiated time-series with this order is obtained by subsequently applying transform method.

This series is interpreted as a stationary time-series keeping the maximum memory of the original time-series.

from fracdiff.sklearn import FracdiffStat

X = ... # 2d time-series with shape (n_samples, n_features)

f = FracdiffStat()

X = f.fit_transform(X)

f.d_

# array([0.71875 , 0.609375, 0.515625])The result for Nikkei 225 index historical price looks like this:

One can fracdiff a PyTorch tensor. One can enjoy strong GPU acceleration.

from fracdiff.torch import fdiff

input = torch.tensor(...)

output = fdiff(input, 0.5)from fracdiff.torch import Fracdiff

module = Fracdiff(0.5)

module

# Fracdiff(0.5, dim=-1, window=10, mode='same')

input = torch.tensor(...)

output = module(input)More examples are provided here.

Example solutions of exercises in Section 5 of "Advances in Financial Machine Learning" are provided here.

Any contributions are more than welcome.

The maintainer (simaki) is not making further enhancements and appreciates pull requests to make them. See Issue for proposed features. Please take a look at CONTRIBUTING.md before creating a pull request.