Team Name: Delos Mobile

Team Members:

- Justin Michela | jmichela3@gmail.com

- Daniel Han | daniel88han@gmail.com

- Thomas Tracey | tommytracey@gmail.com

- Saajan Shridhar | saajan.is@gmail.com

Team Lead: Justin Michela

This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree in which we program a real self-driving car.

The goal of the project is to get Udacity's self-driving car to drive around a test track while avoiding obstacles and stopping at traffic lights.

Here is a brief video from Udacity that explains the project in more detail.

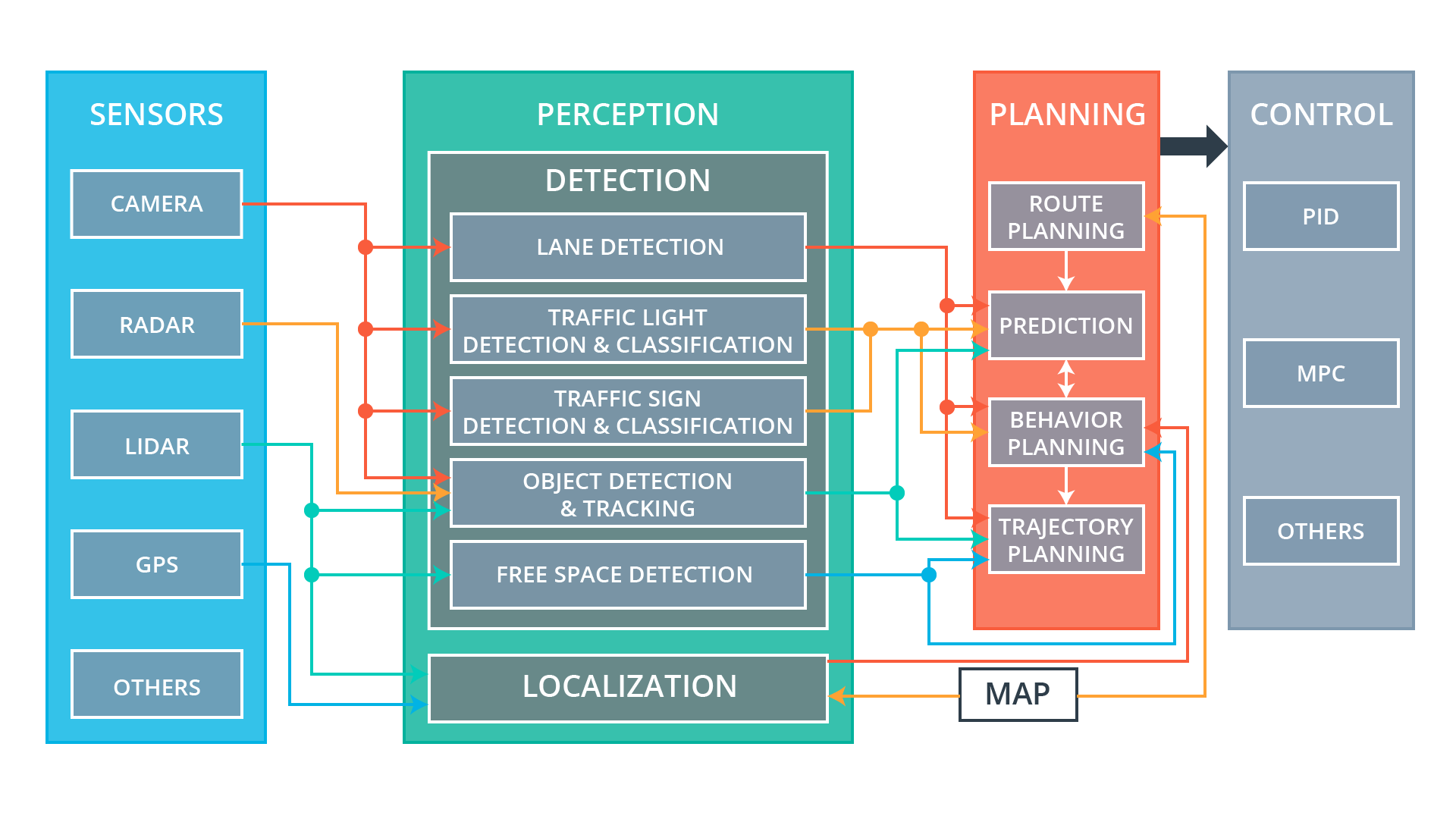

There are three primary subsystems which need to be built and integrated in this project:

- Perception — Takes in sensor data to localize the vehicle and detect various aspects of the driving environment (e.g., road lanes, traffic lights, etc.)

- Planning — Determines the optimal path and target velocity to ensure a safe and smooth driving experience. Then updates the vehicle waypoints accordingly.

- Control — Actuates the vehicles' steering, throttle, and brake commands to execute the desired driving path and target velocity.

As you can see, within each of the three subsystems, there are various components. These components are already quite familiar to us since we've built each of them throughout the prior 12 projects of the program. But we've yet to build an integrated system and test it on an actual car. So, for this final project we need to build and integrate a subset of these components.

At a high-level, we need to:

- Get the car to drive safely and smoothly around the track.

- Localize the car's position on the track.

- Keep the car driving in the middle lane.

- Use camera sensor data to detect traffic lights.

- Classify whether the traffic light is green, yellow, or red.

- Get the car to stop smoothly at red lights.

- Get the car to smoothly resume driving when the light turns green.

First, we are required to get everything working in the simulator. Then, once approved, our code will be tested by Udacity in "Carla," the self-driving car pictured above.

Here is a high-level breakdown of our approach.

- Implement traffic light detection and classification using a convolutional neural network.

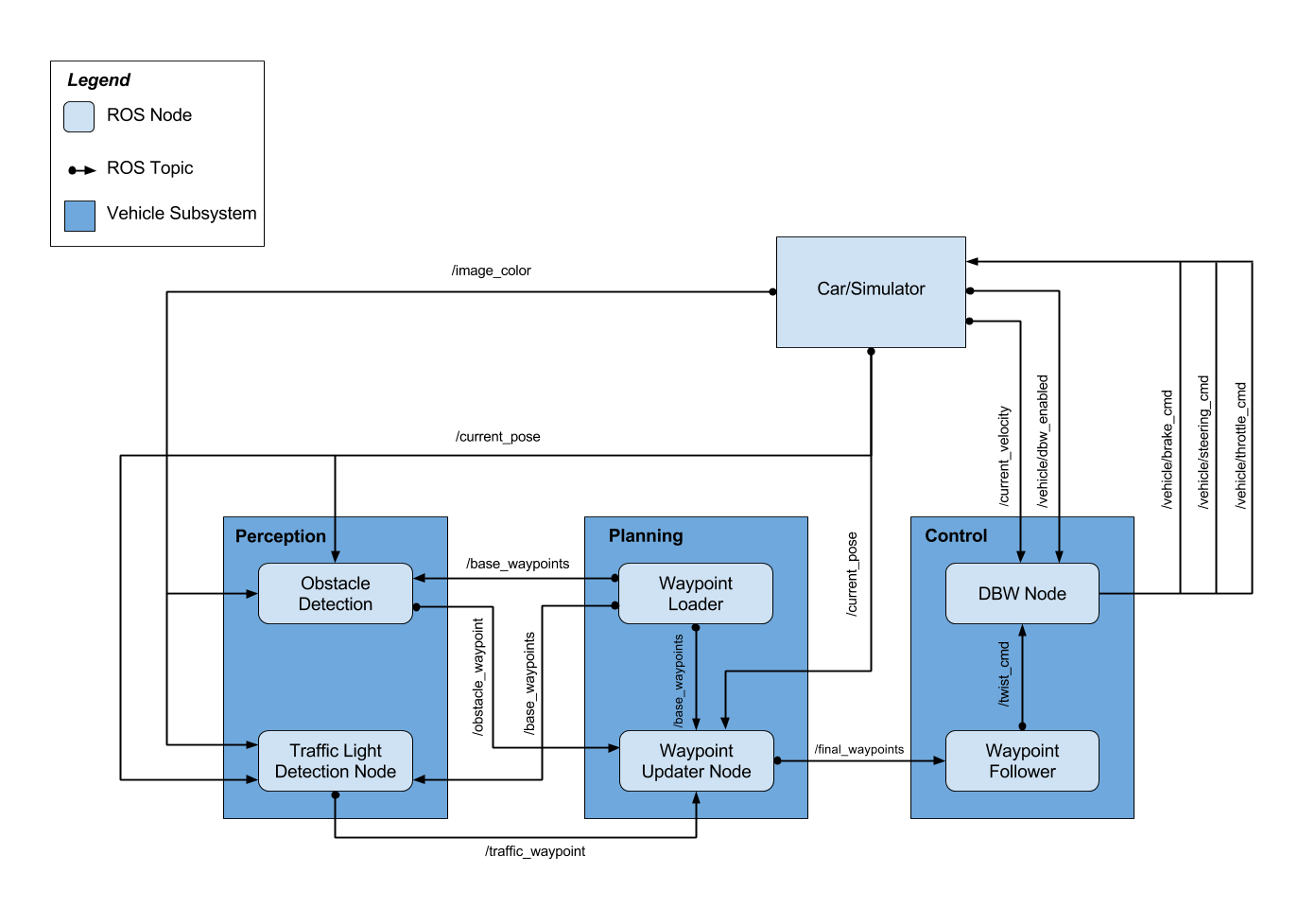

- Implement a ROS node called the

waypoint_updater. This node sets the target velocity for each waypoint based on any upcoming traffic lights or obstacles. For example, whenever the car needs to stop at a red light, this node will set decelerating velocities for the series of waypoints between the car and the traffic light.

- Implement a

drive_by_wireROS node. That takes target trajectory information as input and sends control commands to navigate the vehicle.

Udacity has provided a ROS framework for us to build and connect the nodes pictured above. This framework allows us to run our code in the simulator as well as the actual self-driving car.

Here is a video of our project running on a real self-driving car.

The VM that we tested on was limited on resources. As a result, a lag is observed when all the ROS components are running simultaneously and the lag worsens progressively.

To overcome this problem, we turn the camera off in simulator (effectively disabling the Traffic Light detection component) unless a Traffic Light is on the horizon.

This should not be required on a machine with higher specs.

Please use one of the two installation options, either native or docker installation.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

The Udacity provided virtual machine has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using this.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

-

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

-

Download the Udacity Simulator.

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneTo set up port forwarding, please refer to the instructions from term 2

- Clone the project repository

git clone https://github.com/udacity/CarND-Capstone.git- Install python dependencies

cd CarND-Capstone

pip install -r requirements.txt- Make and run styx

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launch- Run the simulator

- Download training bag that was recorded on the Udacity self-driving car.

- Unzip the file

unzip traffic_light_bag_file.zip- Play the bag file

rosbag play -l traffic_light_bag_file/traffic_light_training.bag- Launch your project in site mode

cd CarND-Capstone/ros

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images