Face detection Keras model using yolov3 as a base model and a pretrained model, including face detection

Using the pretranied yolov3 Keras model, The face detection model is developed using uncontrained college students face dataset provided by UCCS and referring to YOLOv3: An Incremental Improvement.

- Develop face vijnana yolov3.

- Train and evaluate face detector with the UCCS dataset.

This project is closed.

The face detection model has been developed and tested on Linux(Ubuntu 16.04.6 LTS), Anaconda 4.6.11, Python 3.6.8, Tensorflow 1.13.1 (Keras's backend), Keras 2.2.4 and on 8 CPUs, 52 GB memory, 4 x NVIDIA Tesla K80 GPUs.

Install Anaconda

conda create -n tf1_p36 python=3.6

conda activate tf1_p36

Install CUDA Toolkit 10.1

Install cuDNN v7.6.0 for CUDA 10.1

conda install tensorflow==1.13.1

or

conda install tensorflow-gpu==1.13.1

git clone https://github.com/tonandr/face_vijnana_yolov3.git

cd face_vijnana_yolov3

python setup.py sdist bdist_wheel

pip install -e ./

cd src\space

wget https://pjreddie.com/media/files/yolov3.weights

In the resource directory, make the training and validation folders, and copy training images & training.csv into the training folder and validation images & validation.csv into the validation folder.

The dataset can be obtained from UCCS.

{

"fd_conf": {

"mode": "train",

"raw_data_path": "/home/ubuntu/face_vijnana_yolov3/resource/training",

"test_path": "/home/ubuntu/face_vijnana_yolov3/resource/validation",

"output_file_path": "solution_fd.csv",

"multi_gpu": false,

"num_gpus": 4,

"yolov3_base_model_load": false,

"hps": {

"lr": 0.0001,

"beta_1": 0.99,

"beta_2": 0.99,

"decay": 0.0,

"epochs": 67,

"step": 1,

"batch_size": 40,

"face_conf_th": 0.5,

"nms_iou_th": 0.5,

"num_cands": 60,

"face_region_ratio_th": 0.8

},

"nn_arch": {

"image_size": 416,

"bb_info_c_size": 6

},

"model_loading": false

},

"fi_conf": {

"mode": "fid_db",

"raw_data_path": "/home/ubuntu/face_vijnana_yolov3/resource/training",

"test_path": "/home/ubuntu/face_vijnana_yolov3/resource/validation",

"output_file_path": "solution_fi.csv",

"multi_gpu": false,

"num_gpus": 4,

"yolov3_base_model_load": false,

"hps": {

"lr": 0.000001,

"beta_1": 0.99,

"beta_2": 0.99,

"decay": 0.0,

"epochs": 35,

"step": 1,

"batch_size": 16,

"sim_th": 0.2

},

"nn_arch": {

"image_size": 416,

"dense1_dim": 64

},

"model_loading": true

}

}

It is assumed that 4 Tesla K80 GPUs are provided. You should set mode to "train". For accelerating computing, you can set multi_gpu to true and the number of gpus.

python face_detection.py

You can download the pretrained face detection Keras model. It should be moved into face_vijnana_yolov3/src/space.

Set mode to 'evaluate' or 'test', and you should set model_loading to true.

python face_detection.py

Mode should be set to "data" in fi_conf.

python face_identification.py

You can download subject faces and the relevant meta file.

The subject face folder should be moved to the resource folder, and the relevant meta file should be moved to the src/space folder.

Set mode to "train". To train the model with previous weights, you should set model_loading to true.

python face_identification.py

Set mode to 'evaluate' or 'test', and you should set model_loading to true.

python face_identification.py

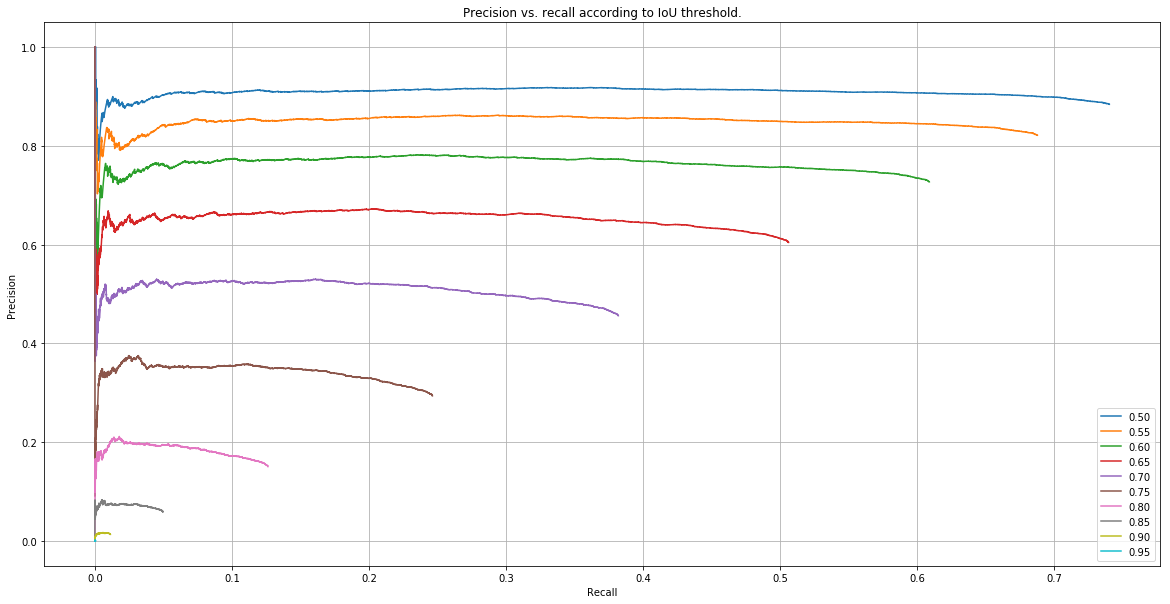

After getting the face detection solution file of solution_fd.csv, mAP could be calculated as follows.

python evaluate.py -m cal_map_fd -g validation.csv -s solution_fd.csv

the result is saved in p_r_curve.h5 as the hdf5 format, so you load it and analyze the performance.

We have evaluated face vijnana yolov3's face detection performance with the UCCS dataset. Yet, the model wasn't trained until saturation, so via training more, the performance can be enhanced.

| mAP | AP50 | AP55 | AP60 | AP65 | AP70 | AP75 | AP80 | AP85 | AP90 | AP95 |

|---|---|---|---|---|---|---|---|---|---|---|

| 23.57 | 67.21 | 58.35 | 46.61 | 33.04 | 19.45 | 8.41 | 2.32 | 0.35 | 0.0172 | 0.0000635 |

There are face detection result images.