Raster Vision is an open source Python framework for building computer vision models on satellite, aerial, and other large imagery sets (including oblique drone imagery).

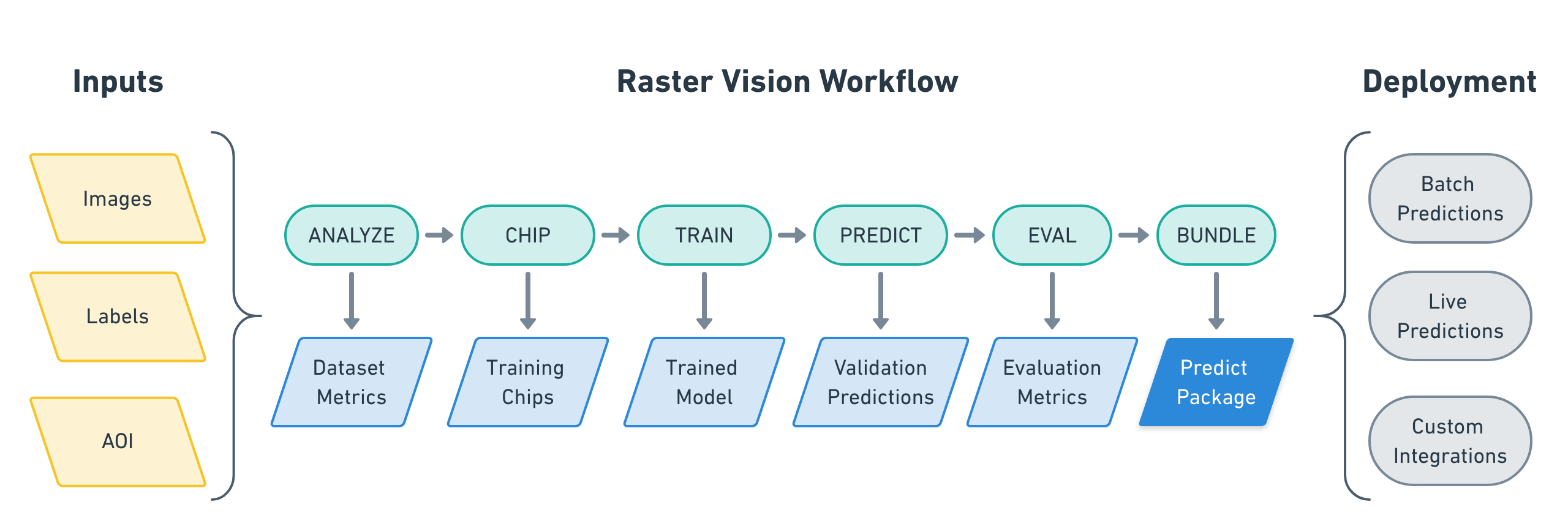

- It allows users (who don't need to be experts in deep learning!) to quickly and repeatably configure experiments that execute a machine learning workflow including: analyzing training data, creating training chips, training models, creating predictions, evaluating models, and bundling the model files and configuration for easy deployment.

- There is built-in support for chip classification, object detection, and semantic segmentation using Tensorflow.

- Experiments can be executed on CPUs and GPUs with built-in support for running in the cloud using AWS Batch.

- The framework is extensible to new data sources, tasks (eg. object detection), backends (eg. TF Object Detection API), and cloud providers.

- There is a QGIS plugin for viewing the results of experiments on a map.

See the documentation for more details.

Setup

There are several ways to setup Raster Vision:

- To build Docker images from scratch, after cloning this repo, run

docker/build, and run the container usingdocker/run. - Docker images are published to quay.io. The tag for the

raster-visionimage determines what type of image it is:- The

cpu-*tags are for running the CPU containers. - The

gpu-*tags are for running the GPU containers. - We publish a new tag per merge into

master, which is tagged with the first 7 characters of the commit hash. To use the latest version, pull thelatestsuffix, e.g.raster-vision:gpu-latest. Git tags are also published, with the Github tag name as the Docker tag suffix.

- The

- Raster Vision can be installed directly using

pip install rastervision. However, some of its dependencies will have to be installed manually.

For more detailed instructions, see the Setup docs.

Example

The best way to get a feel for what Raster Vision enables is to look at an example of how to configure and run an experiment. Experiments are configured using a fluent builder pattern that makes configuration easy to read, reuse and maintain.

# tiny_spacenet.py

import rastervision as rv

class TinySpacenetExperimentSet(rv.ExperimentSet):

def exp_main(self):

base_uri = ('https://s3.amazonaws.com/azavea-research-public-data/'

'raster-vision/examples/spacenet')

train_image_uri = '{}/RGB-PanSharpen_AOI_2_Vegas_img205.tif'.format(base_uri)

train_label_uri = '{}/buildings_AOI_2_Vegas_img205.geojson'.format(base_uri)

val_image_uri = '{}/RGB-PanSharpen_AOI_2_Vegas_img25.tif'.format(base_uri)

val_label_uri = '{}/buildings_AOI_2_Vegas_img25.geojson'.format(base_uri)

channel_order = [0, 1, 2]

background_class_id = 2

# ------------- TASK -------------

task = rv.TaskConfig.builder(rv.SEMANTIC_SEGMENTATION) \

.with_chip_size(300) \

.with_chip_options(chips_per_scene=50) \

.with_classes({

'building': (1, 'red')

}) \

.build()

# ------------- BACKEND -------------

backend = rv.BackendConfig.builder(rv.TF_DEEPLAB) \

.with_task(task) \

.with_debug(True) \

.with_batch_size(1) \

.with_num_steps(1) \

.with_model_defaults(rv.MOBILENET_V2) \

.build()

# ------------- TRAINING -------------

train_raster_source = rv.RasterSourceConfig.builder(rv.RASTERIO_SOURCE) \

.with_uri(train_image_uri) \

.with_channel_order(channel_order) \

.with_stats_transformer() \

.build()

train_label_raster_source = rv.RasterSourceConfig.builder(rv.RASTERIZED_SOURCE) \

.with_vector_source(train_label_uri) \

.with_rasterizer_options(background_class_id) \

.build()

train_label_source = rv.LabelSourceConfig.builder(rv.SEMANTIC_SEGMENTATION) \

.with_raster_source(train_label_raster_source) \

.build()

train_scene = rv.SceneConfig.builder() \

.with_task(task) \

.with_id('train_scene') \

.with_raster_source(train_raster_source) \

.with_label_source(train_label_source) \

.build()

# ------------- VALIDATION -------------

val_raster_source = rv.RasterSourceConfig.builder(rv.RASTERIO_SOURCE) \

.with_uri(val_image_uri) \

.with_channel_order(channel_order) \

.with_stats_transformer() \

.build()

val_label_raster_source = rv.RasterSourceConfig.builder(rv.RASTERIZED_SOURCE) \

.with_vector_source(val_label_uri) \

.with_rasterizer_options(background_class_id) \

.build()

val_label_source = rv.LabelSourceConfig.builder(rv.SEMANTIC_SEGMENTATION) \

.with_raster_source(val_label_raster_source) \

.build()

val_scene = rv.SceneConfig.builder() \

.with_task(task) \

.with_id('val_scene') \

.with_raster_source(val_raster_source) \

.with_label_source(val_label_source) \

.build()

# ------------- DATASET -------------

dataset = rv.DatasetConfig.builder() \

.with_train_scene(train_scene) \

.with_validation_scene(val_scene) \

.build()

# ------------- EXPERIMENT -------------

experiment = rv.ExperimentConfig.builder() \

.with_id('tiny-spacenet-experiment') \

.with_root_uri('/opt/data/rv') \

.with_task(task) \

.with_backend(backend) \

.with_dataset(dataset) \

.with_stats_analyzer() \

.build()

return experiment

if __name__ == '__main__':

rv.main()Raster Vision uses a unittest-like method for executing experiments. For instance, if the above was defined in tiny_spacenet.py, with the proper setup you could run the experiment using:

> rastervision run local -p tiny_spacenet.pySee the Quickstart for a more complete description of running this example.

Resources

- Raster Vision Documentation

- raster-vision-examples: A repository of examples of running RV on open datasets

- raster-vision-aws: Deployment code for setting up AWS Batch with GPUs

- raster-vision-qgis: A QGIS plugin for visualizing the results of experiments on a map

Contact and Support

You can find more information and talk to developers (let us know what you're working on!) at:

Contributing

We are happy to take contributions! It is best to get in touch with the maintainers about larger features or design changes before starting the work, as it will make the process of accepting changes smoother.

Everyone who contributes code to Raster Vision will be asked to sign the Azavea CLA, which is based off of the Apache CLA.

-

Download a copy of the Raster Vision Individual Contributor License Agreement or the Raster Vision Corporate Contributor License Agreement

-

Print out the CLAs and sign them, or use PDF software that allows placement of a signature image.

-

Send the CLAs to Azavea by one of:

- Scanning and emailing the document to cla@azavea.com

- Faxing a copy to +1-215-925-2600.

- Mailing a hardcopy to: Azavea, 990 Spring Garden Street, 5th Floor, Philadelphia, PA 19107 USA