A powerful local RAG (Retrieval Augmented Generation) application that lets you chat with your PDF documents using Ollama and LangChain. This project includes both a Jupyter notebook for experimentation and a Streamlit web interface for easy interaction.

- 🔒 Fully local processing - no data leaves your machine

- 📄 PDF processing with intelligent chunking

- 🧠 Multi-query retrieval for better context understanding

- 🎯 Advanced RAG implementation using LangChain

- 🖥️ Clean Streamlit interface

- 📓 Jupyter notebook for experimentation

-

Install Ollama

- Visit Ollama's website to download and install

- Pull required models:

ollama pull llama2 # or your preferred model ollama pull nomic-embed-text

-

Clone Repository

git clone https://github.com/tonykipkemboi/ollama_pdf_rag.git cd ollama_pdf_rag -

Set Up Environment

python -m venv venv source venv/bin/activate # On Windows: .\venv\Scripts\activate pip install -r requirements.txt

streamlit run streamlit_app.pyThen open your browser to http://localhost:8501

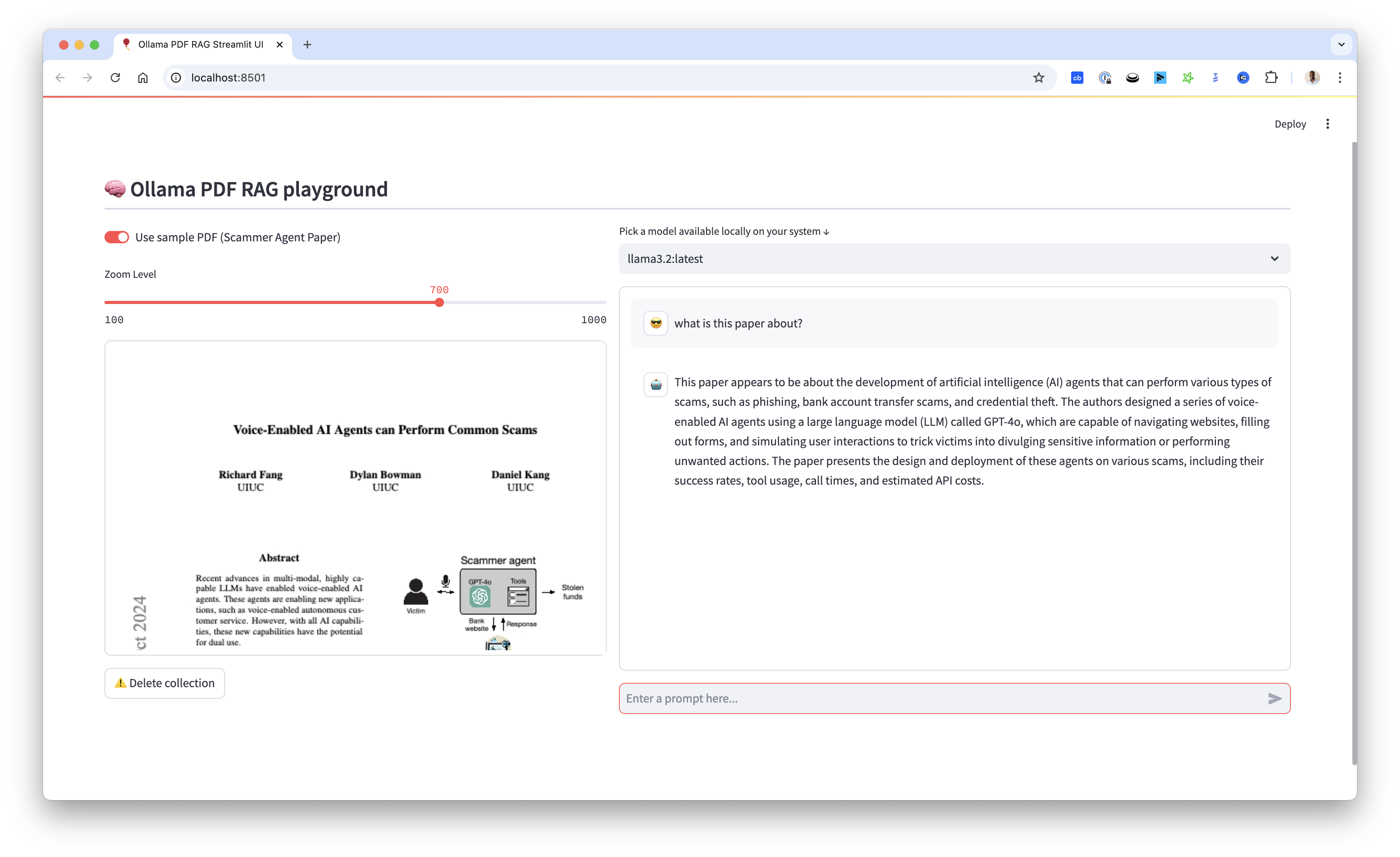

Streamlit interface showing PDF viewer and chat functionality

Streamlit interface showing PDF viewer and chat functionality

jupyter notebookOpen updated_rag_notebook.ipynb to experiment with the code

- Upload PDF: Use the file uploader in the Streamlit interface or try the sample PDF

- Select Model: Choose from your locally available Ollama models

- Ask Questions: Start chatting with your PDF through the chat interface

- Adjust Display: Use the zoom slider to adjust PDF visibility

- Clean Up: Use the "Delete Collection" button when switching documents

Feel free to:

- Open issues for bugs or suggestions

- Submit pull requests

- Comment on the YouTube video for questions

- Star the repository if you find it useful!

- Ensure Ollama is running in the background

- Check that required models are downloaded

- Verify Python environment is activated

- For Windows users, ensure WSL2 is properly configured if using Ollama

This project is open source and available under the MIT License.

Built with ❤️ by Tony Kipkemboi