By Deleja-Hotko Julian and Topor Karol

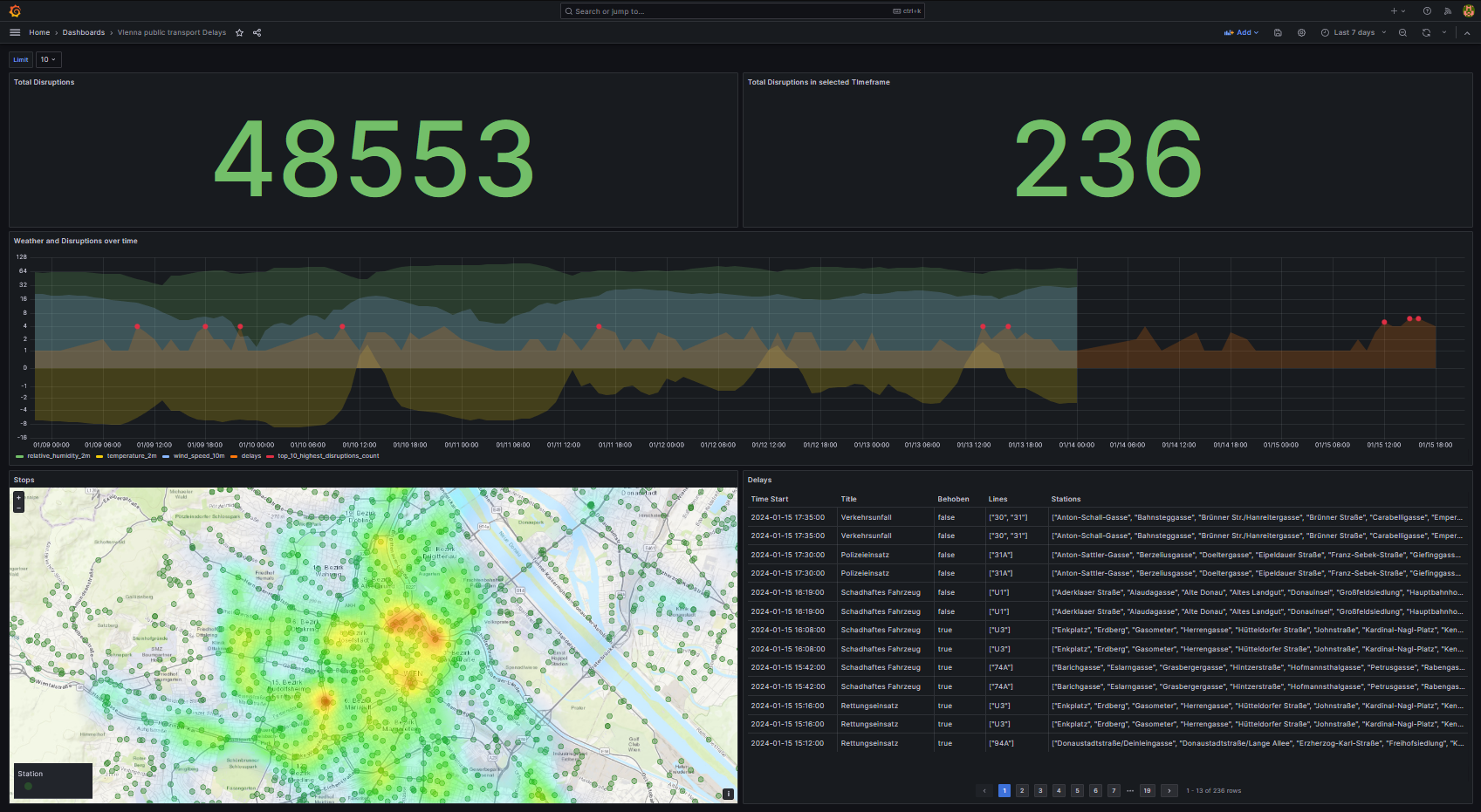

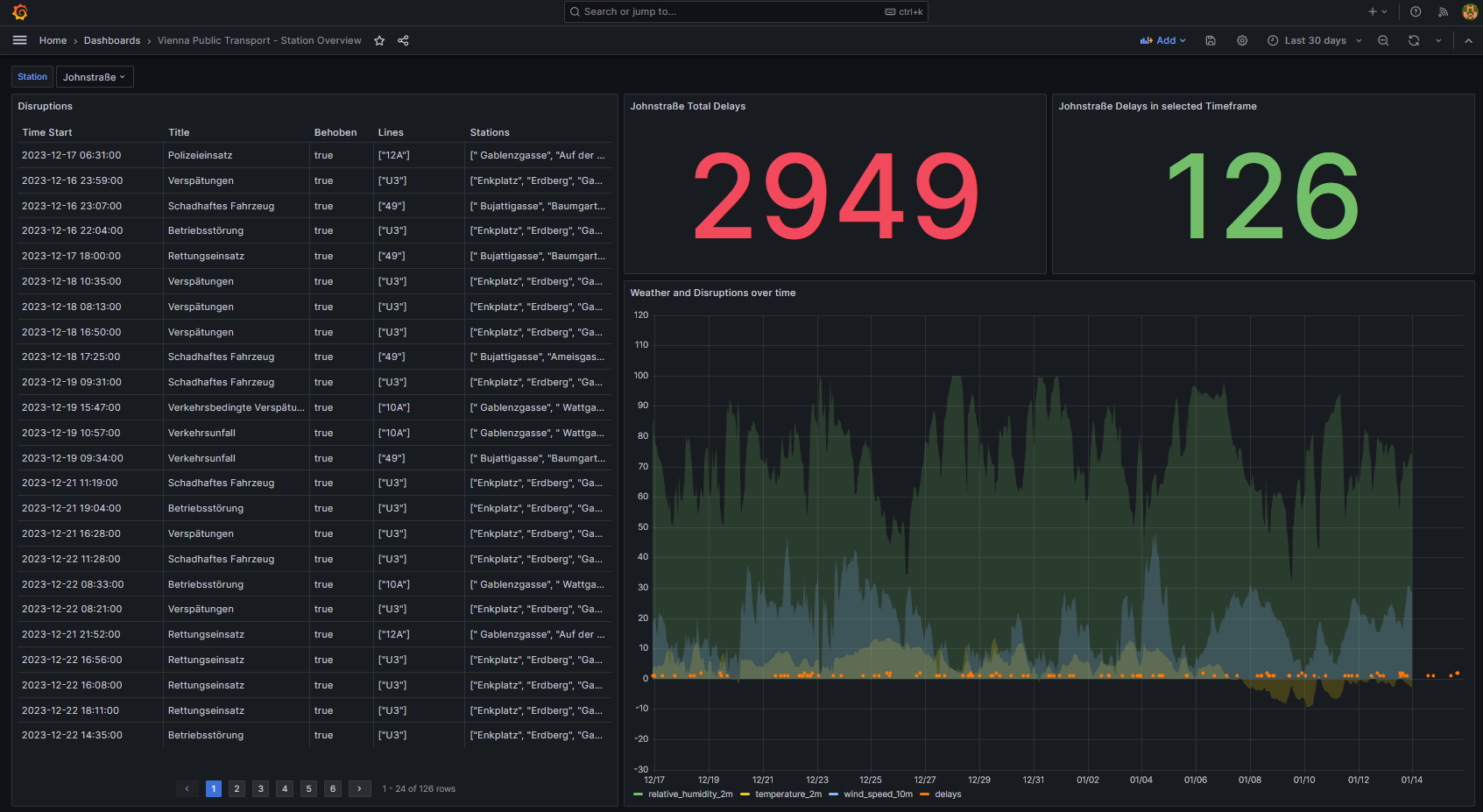

This project explores the relationship between weather patterns and public transport disruptions in Vienna, utilizing Grafana for data visualization.

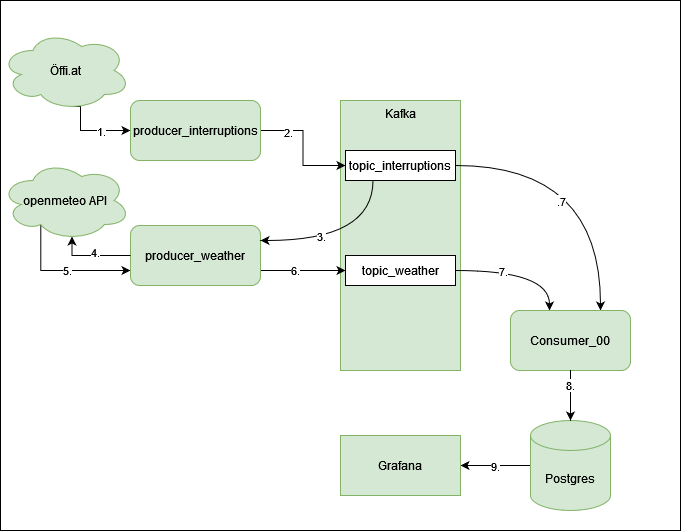

The architecture for data processing and visualization in this project includes the following components:

producer_delays: A custom web scraper implemented in Python using BeautifulSoup to collect data on public transport delays from öffi.at website.producer_weather: A custom API scraper, also in Python, that retrieves weather data from the openmeteo API.Kafka: Functions as the central messaging bus that transports events, which in this context are the delays and weather data.consumer_00: A custom Python consumer that processes the data from Kafka.Postgres: The database utilized for persisting the collected data.Grafana: The tool used for visualizing the data that has been stored in the Postgres database.

Each of these components plays a critical role in the pipeline from data collection to visualization.

This pipeline details the process for analyzing how weather impacts public transport disruptions in Vienna:

-

Producer for Delays (

producer_delays):- Starts the data collection by scraping the öffi.at website for public transport delay details.

- Filters the acquired HTML content for relevant information and sends the data to the Kafka system.

-

Producer for Weather (

producer_weather):- Monitors the

topic_delaysin Kafka for new public transport interruption events. - Upon detecting new events, it extracts the date and time details.

- Uses these details to retrieve corresponding weather conditions from the openmeteo API.

- Parses the received weather data and forwards it to Kafka.

- Monitors the

-

Consumer (

consumer_00):- Connects to Kafka topics, ingests the interruption and weather data streams, and processes them for subsequent storage.

-

PostgreSQL Database (

Postgres):- Receives the processed data and serves as the permanent storage solution, ensuring data integrity and accessibility.

-

Grafana:

- Utilizes the stored data within Postgres to create visualizations that elucidate the relationship between weather patterns and transport delays.

The entire pipeline is constructed to be both strong and flexible to support consistent data processing and enable comprehensive analysis.

Ensure you have the following prerequisites installed:

- docker

- docker-compose

docker-compose -f docker/docker-compose.yaml up -d

he System will autmatically start up and run all collection and processing scripts on creation, no further interaction should be necessary. Note: To avoid rate limiting, Öffi.at data is scraped at a fairly slow pace, meaning the database and visualization will be almost empty if inspected immediatly after creation.