The Custom Prompt Generator is a Python application that leverages Large Language Models (LLMs) and the LiteLLM library to dynamically generate personas, fetch knowledge sources, resolve conflicts, and produce tailored prompts. This application is designed to assist in various software development tasks by providing context-aware prompts based on user input and predefined personas.

Persona Driven Prompt Generator Agent use the huggingface space chatbot agent to determine how this repo works.

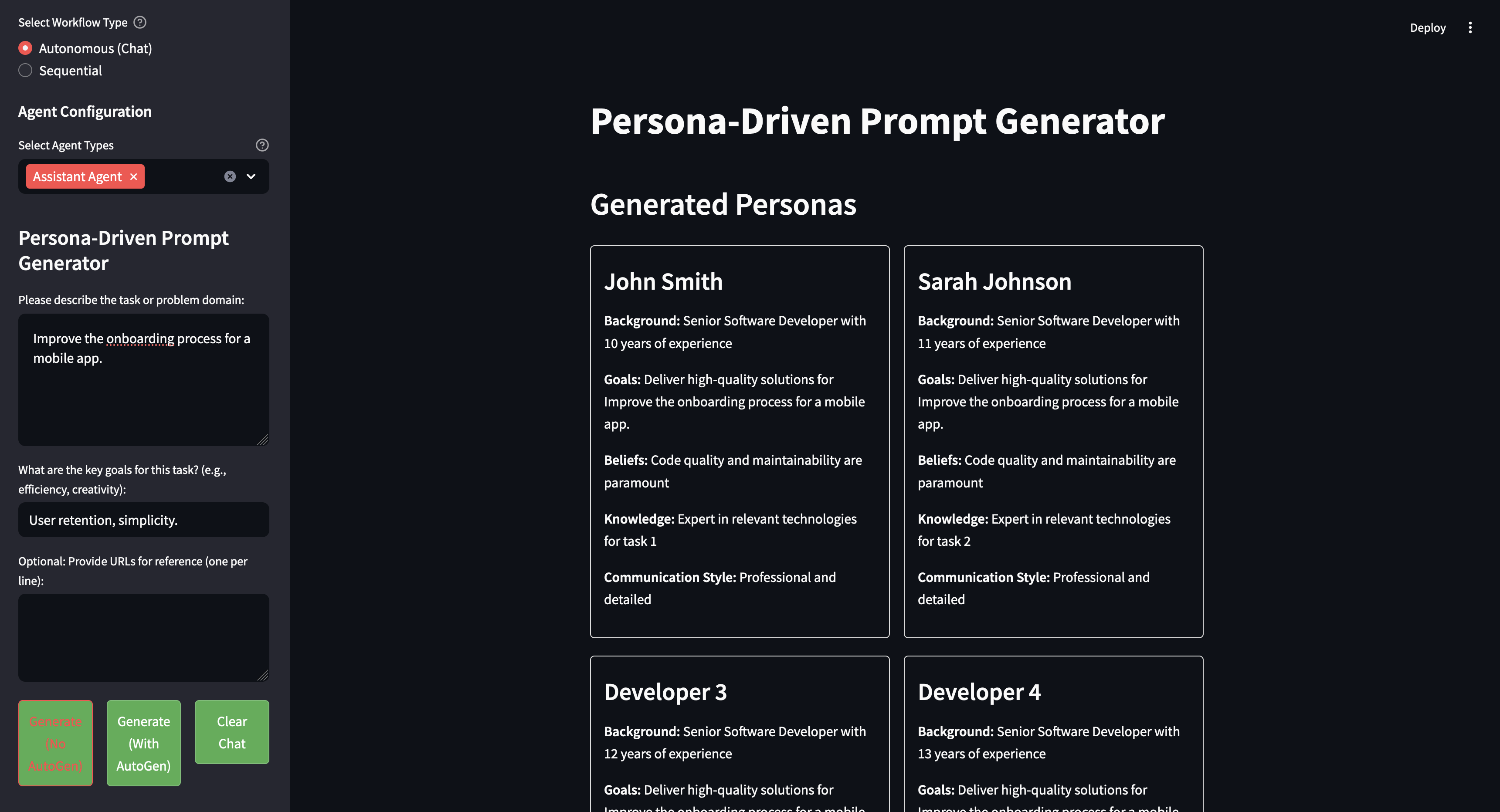

- Dynamic Persona Generation: Create realistic personas with human-like names, backgrounds, and expertise.

- Flexible Persona Count: Generate 1-10 personas based on your needs.

- Knowledge Source Fetching: Fetch relevant knowledge sources using the LiteLLM library.

- Conflict Resolution: Resolve conflicts among personas to ensure coherent and fair task execution.

- Prompt Generation: Generate tailored prompts based on personas, knowledge sources, and conflict resolutions.

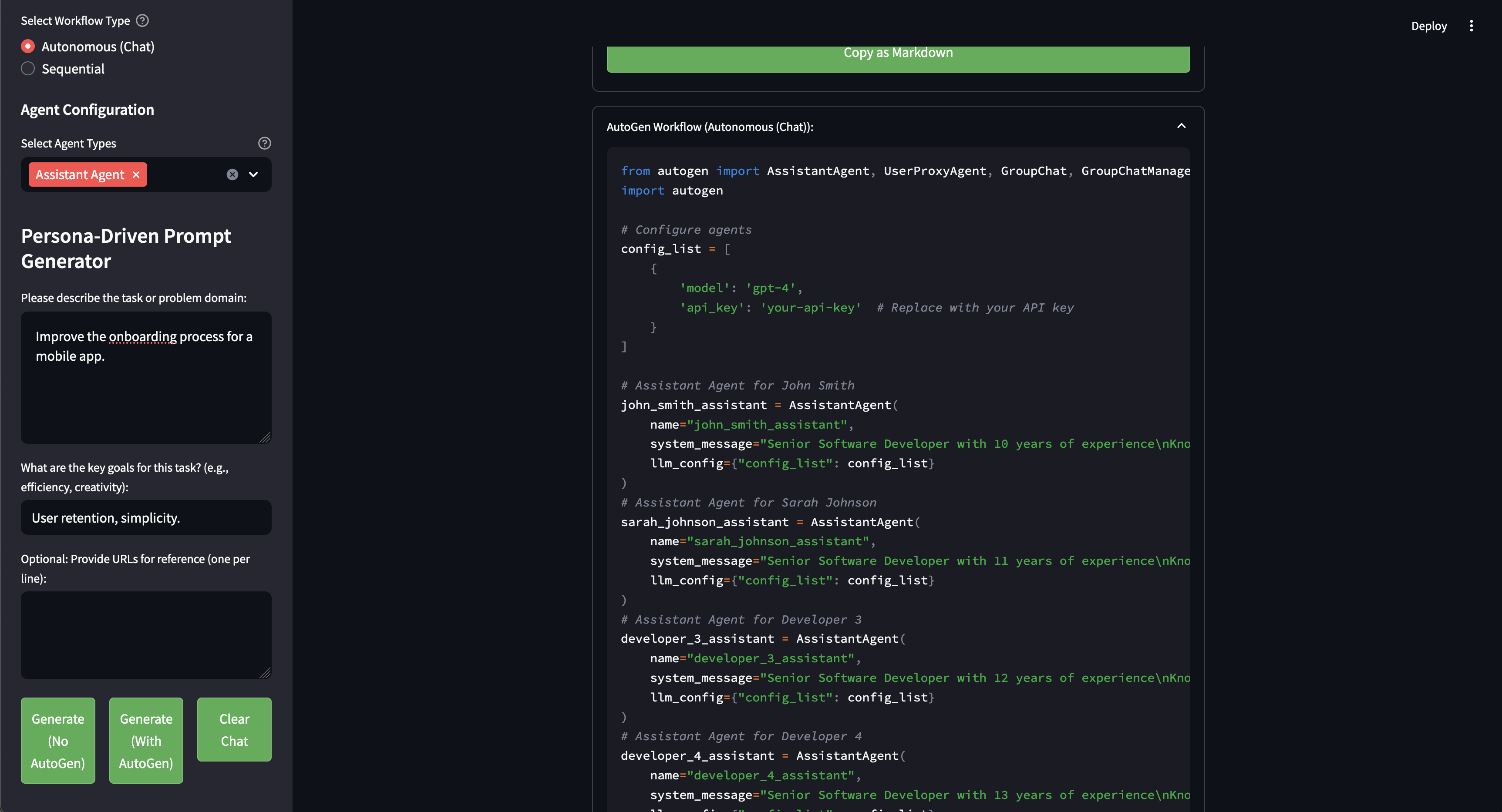

- AutoGen Integration:

- Choose between Autonomous (Chat) and Sequential workflows

- Configure different agent types (User Proxy, Assistant, GroupChat)

- Generate executable Python code for AutoGen workflows

- Export Options:

- Export prompts in Markdown format

- Copy generated content directly from the UI

- Download AutoGen workflow as Python file

This application uses LiteLLM to support multiple LLM providers. You can choose from:

-

OpenAI (GPT-3.5, GPT-4)

- Best for general purpose, high-quality responses

- Requires OpenAI API key

-

Groq (Mixtral, Llama)

- Best for fast inference, competitive pricing

- Requires Groq API key

-

DeepSeek

- Best for specialized tasks, research applications

- Requires DeepSeek API key

-

Hugging Face

- Best for custom models, open-source alternatives

- Requires Hugging Face API key

-

Ollama (Local Deployment)

- Best for self-hosted, privacy-focused applications

- Can run locally without API key

-

Copy the environment template:

cp env-example .env

-

Edit

.envand configure your chosen provider:# Example for OpenAI LITELLM_MODEL=gpt-3.5-turbo LITELLM_PROVIDER=openai OPENAI_API_KEY=your-openai-api-key -

Run with the quickstart script:

chmod +x quickstart.sh ./quickstart.sh

This application uses PostgreSQL with the pgvector extension for efficient vector storage and similarity search. The database is used to store:

- Generated personas

- Task history

- Vector embeddings

- Emotional tones data

-

Run the database setup script:

chmod +x setup_database.sh sudo ./setup_database.sh

This script will:

- Install PostgreSQL 17

- Install pgvector extension

- Create database and user

- Configure authentication

- Set up required tables

- Run connection tests

-

Default database configuration:

- Database Name: persona_db

- User: persona_user

- Port: 5432

You can modify these in setup_database.sh before running.

If you prefer manual setup:

-

Install PostgreSQL 17:

# Ubuntu sudo apt install postgresql-17 # RHEL/Amazon Linux sudo dnf install postgresql17-server

-

Install pgvector:

# Ubuntu sudo apt install postgresql-17-pgvector # RHEL/Amazon Linux sudo yum install pgvector_17

-

Configure database:

sudo -u postgres psql CREATE DATABASE persona_db; CREATE USER persona_user WITH PASSWORD 'your_password'; GRANT ALL PRIVILEGES ON DATABASE persona_db TO persona_user; \c persona_db CREATE EXTENSION vector;

The application uses several tables:

task_memory: Stores task history and vector embeddingsemotional_tones: Stores predefined emotional tones for personaspersonas: Stores generated persona information

- Connection Issues: Check PostgreSQL service status

sudo systemctl status postgresql-17

- Permission Errors: Verify user privileges

sudo -u postgres psql -c "\du" - pgvector Issues: Confirm extension installation

sudo -u postgres psql -d persona_db -c "\dx"

-

Create a Python virtual environment:

python -m venv venv source venv/bin/activate # Linux/Mac # or .\venv\Scripts\activate # Windows

-

Install dependencies:

pip install -r requirements.txt

- Open the application in your browser

- In the sidebar:

- Enter the task description and goals

- Select the number of personas (1-10)

- Configure AutoGen settings (if using AutoGen)

- Choose your generation method:

- "Generate (No AutoGen)" for standard prompts

- "Generate (With AutoGen)" for AutoGen workflows

- View and interact with:

- Generated personas with realistic details

- Knowledge sources and conflict resolutions

- Markdown-formatted output

- AutoGen workflow code

- Export or copy your generated content as needed

-

Autonomous (Chat)

- Creates a chat-based interaction between agents

- Suitable for collaborative problem-solving

- Includes initiator and receiver agents

-

Sequential

- Creates a step-by-step workflow

- Agents execute in a predefined order

- Better for structured tasks

- User Proxy Agent: Represents the user and executes code

- Assistant Agent: Plans and generates code to solve tasks

- GroupChat: Manages interactions between multiple agents

main.py: Main application with UI and core logicllm_interaction.py: LLM integration and AutoGen workflow generationpersona_management.py: Persona generation and managementdatabase.py: Database interactionssearch.py: Knowledge source fetchingutils.py: Utility functions

Contributions are welcome! Please read our contributing guidelines and submit pull requests for any enhancements.

This project is licensed under the MIT License - see the LICENSE file for details.