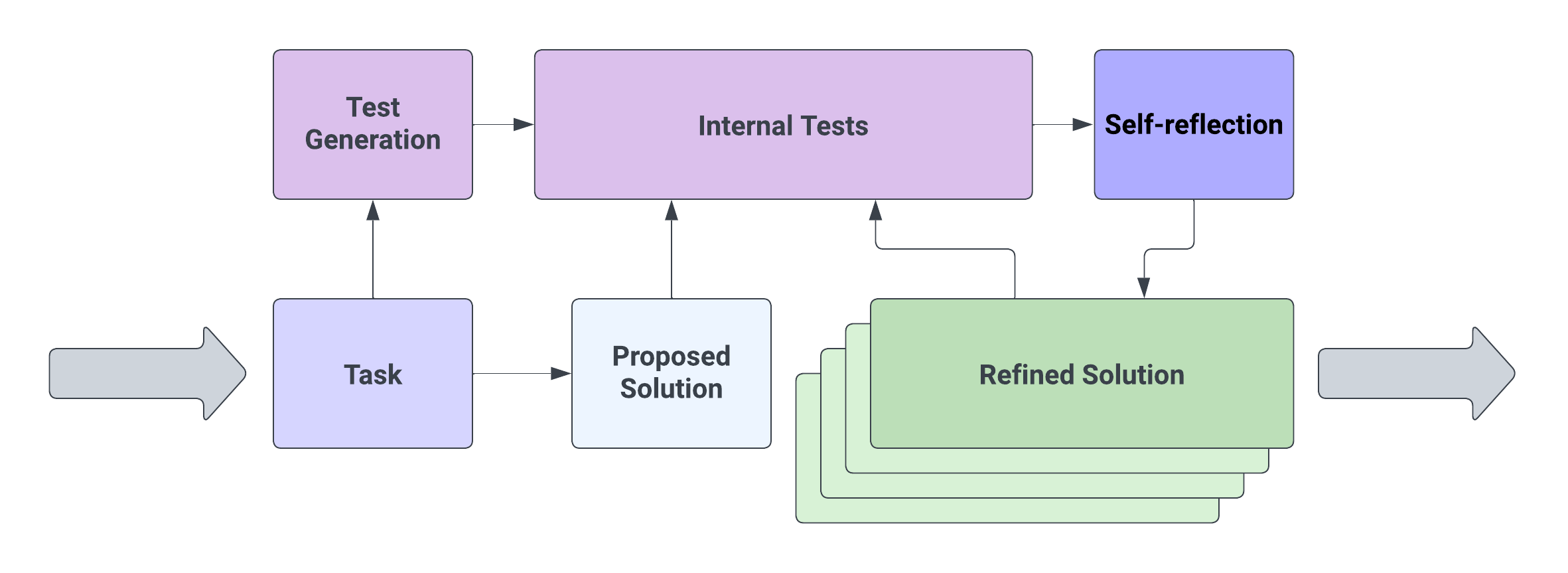

This is a spin-off project inspired by the paper: Reflexion: an autonomous agent with dynamic memory and self-reflection. Noah Shinn, Beck Labash, Ashwin Gopinath. Preprint, 2023

Read more about this project in this post

Check out an interesting type-inference implementation here: OpenTau

Check out the code for the original paper here

Check out a new superhuman programming agent gym here

This repo contains scratch code that was used for testing. The real version of Reflexion for benchmark-agnostic, language-agnostic code generation will be released after the first version of the upcoming paper to respect the privacy of the work (and collaboration) in progress.

If you have any questions, please contact noahshinn024@gmail.com

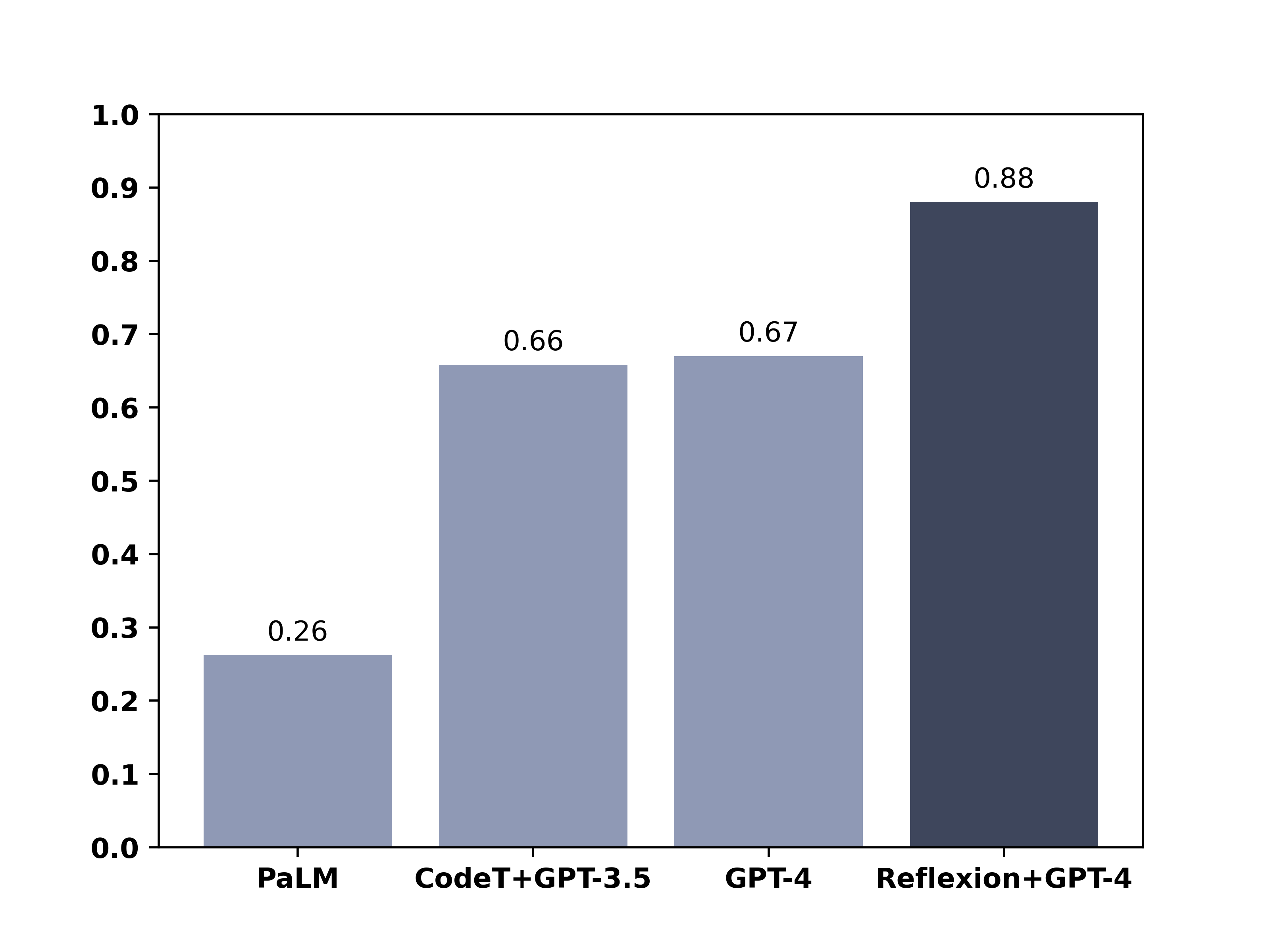

Due to the nature of these experiments, it may not be feasible for individual developers to rerun the results due to limited access to GPT-4 and significant API charges. Due to recent requests, both trials have been rerun once more and are dumped in ./root with a script here to validate the solutions with the unit tests provided by HumanEval.

To run the validation on your log files or the provided log files:

python ./validate_py_results.py <path to jsonlines file>Please do not run the Reflexion agent in an unsecure environment as the generated code is not validated before execution.

Note: This is a spin-off implementation that implements a relaxation on the internal success criteria proposed in the original paper.

@article{shinn2023reflexion,

title={Reflexion: an autonomous agent with dynamic memory and self-reflection},

author={Shinn, Noah and Labash, Beck and Gopinath, Ashwin},

journal={arXiv preprint arXiv:2303.11366},

year={2023}

}