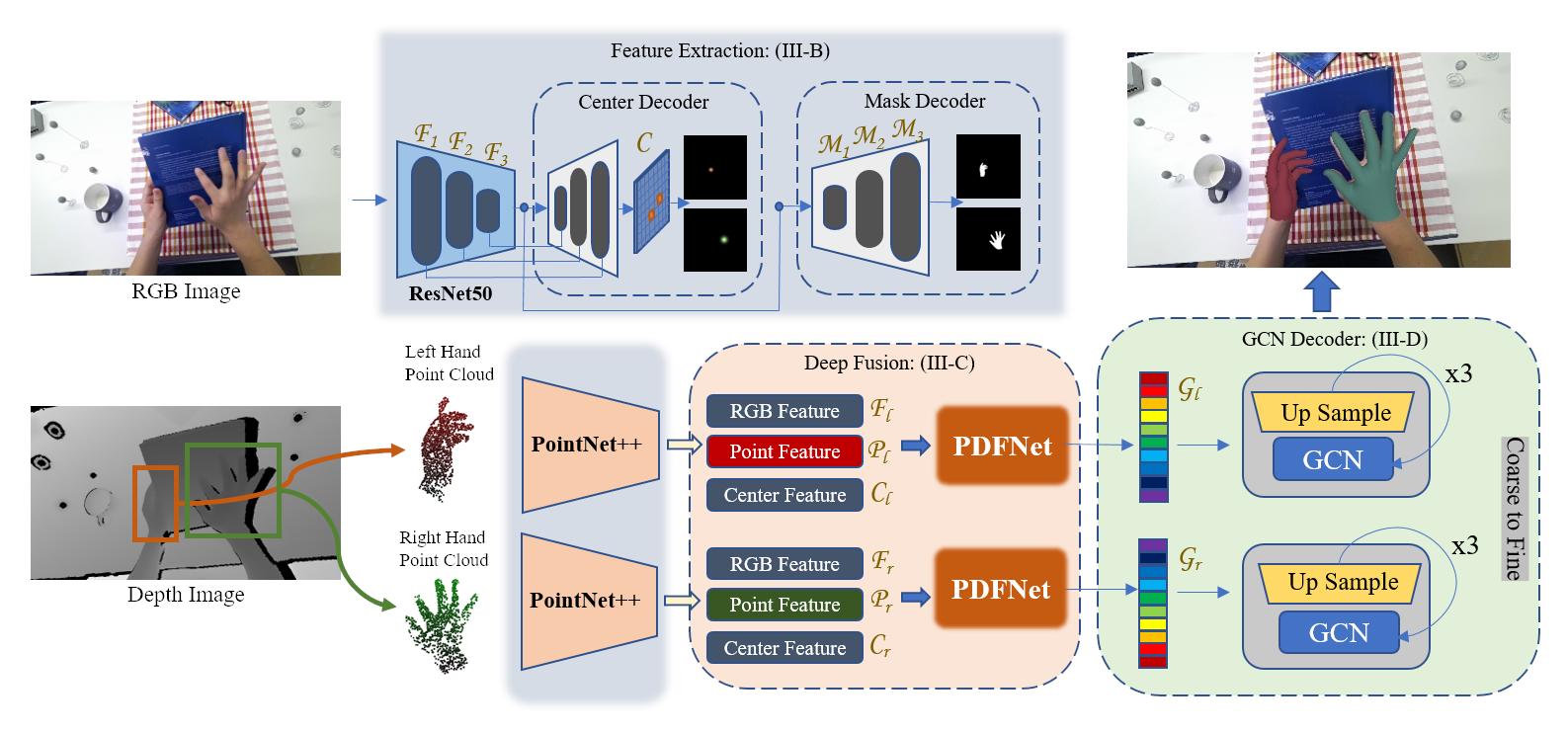

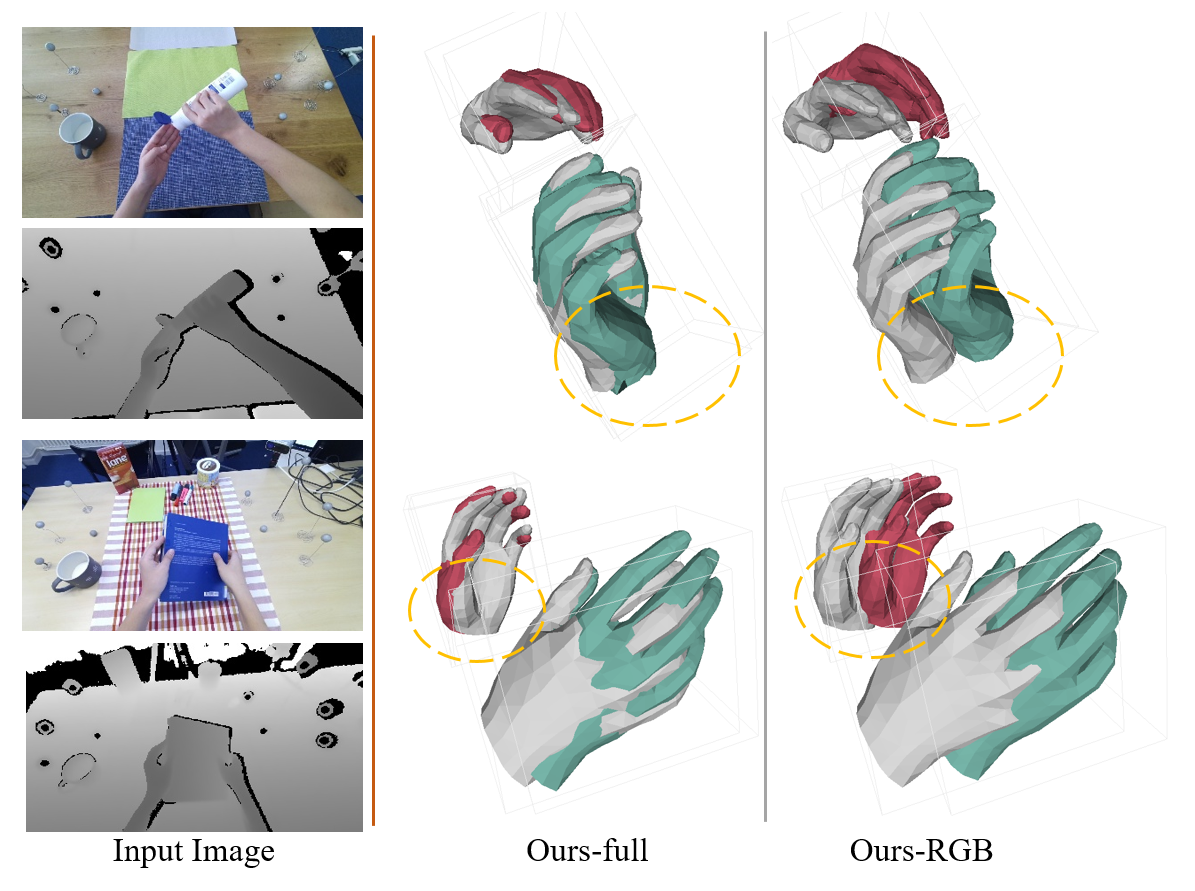

- This repo is official PyTorch implementation of Pyramid Deep Fusion Network for Two-Hand Reconstruction from RGB-D Images.

- Environment

conda create -n PDFNet python=3.7

conda activate PDFNet

# If you failed to install pytorch, you may try to modify your conda source: https://mirrors.tuna.tsinghua.edu.cn/help/anaconda/

conda install pytorch==1.10.1 torchvision==0.11.2 torchaudio==0.10.1 cudatoolkit=11.3 -c pytorch -c conda-forge

# install pytorch3d from source if you are not using latest pytorch version

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

conda install -c bottler nvidiacub

conda install pytorch3d -c pytorch3d

pip install -r requirements.txt

The ${ROOT} is described as below.

${ROOT}

|-- data

|-- lib

|-- outputs

|-- scripts

|-- assets

datacontains packaged dataset loaders and soft links to the datasets' image and annotation directories.libcontains main codes for SMHR, including dataset loader code, network model, training code and other utils files.scriptscontains running scripts.outputscontains log, trained models, imgs, and pretrained models for model outputs.assetscontains demo images.

You need to follow directory structure of the data as below. (recommend to use soft links to access the datasets)

${ROOT}

|-- data

| |-- H2O

| | |-- ego_view

| | | |-- subject*

| | |-- label_split

| |-- H2O3D

| | |-- evaluation

| | |-- train

| | |***.txt

| |***.pth

| |***.pkl

- Download the H2O dataset from the [website]

- Download the H2O3D dataset from the [website]

- Download pre-trained models and dataset loaders here [cloud] (Extraction code: 83di) and put them in the

datafolder.

- Prepare RGB-D image pairs into assets.

- Modify

demo.pyto use images from H2O dataset. #L100: base_dir = 'assets/H2O/color' - run

bash scripts/demo.sh - You can see rendered outputs in

outputs/color/.

Coming Soon...

The pytorch implementation of PointNet++ is based on Hand-Pointnet. The GCN network is based on IntagHand. We thank the authors for their great job!

If you find the code useful in your research, please consider citing the paper.

@article{RenPDFNet,

title={Pyramid Deep Fusion Network for Two-Hand Reconstruction from RGB-D Images},

author={Jinwei Ren, Jianke Zhu},

booktitle={Arxiv},

month=july,

year={2023},

}