This repository contains the code of the course project for SDS 383D - 2022 Spring and continuing development.

- MNIST

- CIFAR-10

- Celeb-A 1

Although not explicitly stated in their paper or readme doc, the original preprocessing leading to the precomputed statistics for Celeb-A in the official repo adopts a less common setting, i.e., center-cropping 108 x 108, bilinear3 down-sampling to 64 x 64. Under this repo, we will release precomputed statistics for both versions (one associated with the original preprocessing and the other associated with the preprocessing used in datasets.py). If not otherwise mentioned, we will use the latter in all experiment settings.

usage: train.py [-h] [--model {vaegan,vae,gan}] [--backbone {resnet,dcgan}]

[--out-act {sigmoid,tanh}] [--epochs EPOCHS] [--lr LR]

[--d-factor D_FACTOR] [--g-factor G_FACTOR] [--beta1 BETA1]

[--beta2 BETA2] [--dataset {mnist,cifar10,celeba}]

[--batch-size BATCH_SIZE] [--num-workers NUM_WORKERS]

[--root ROOT] [--task {reconstruction,generation,deblur}]

[--device DEVICE] [--eval-device EVAL_DEVICE]

[--base-ch BASE_CH] [--latent-dim LATENT_DIM]

[--reconst_ch RECONST_CH] [--instance-noise]

[--fig-dir FIG_DIR] [--chkpt-dir CHKPT_DIR]

[--log-dir LOG_DIR] [--seed SEED] [--resume] [--calc-metrics]

[--chkpt-intv CHKPT_INTV] [--comment COMMENT]

[--anti-artifact]

optional arguments:

-h, --help show this help message and exit

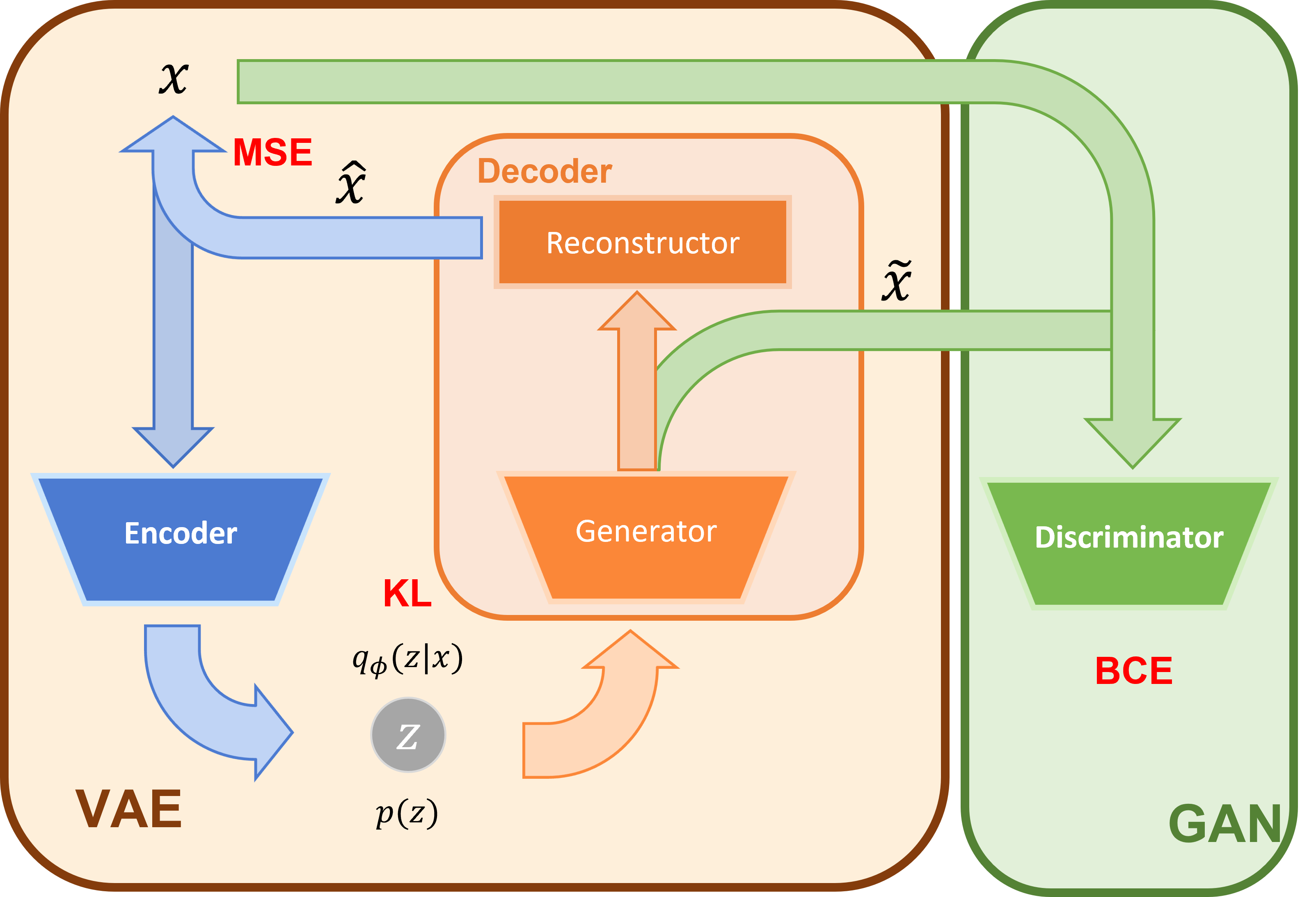

--model {vaegan,vae,gan}

--backbone {resnet,dcgan}

--out-act {sigmoid,tanh}

--epochs EPOCHS

--lr LR

--d-factor D_FACTOR

--g-factor G_FACTOR

--beta1 BETA1

--beta2 BETA2

--dataset {mnist,cifar10,celeba}

--batch-size BATCH_SIZE

--num-workers NUM_WORKERS

--root ROOT

--task {reconstruction,generation,deblur}

--device DEVICE

--eval-device EVAL_DEVICE

--base-ch BASE_CH

--latent-dim LATENT_DIM

--reconst_ch RECONST_CH

--instance-noise

--fig-dir FIG_DIR

--chkpt-dir CHKPT_DIR

--log-dir LOG_DIR

--seed SEED

--resume

--calc-metrics

--chkpt-intv CHKPT_INTV

--comment COMMENT

--anti-artifact

usage: eval.py [-h] [--root ROOT] [--dataset {mnist,cifar10,celeba}]

[--model {gan,vae,vaegan}] [--backbone {dcgan,resnet}]

[--base-ch BASE_CH] [--latent-dim LATENT_DIM]

[--reconst-ch RECONST_CH] [--out-act {sigmoid,tanh}]

[--model-device MODEL_DEVICE] [--eval-device EVAL_DEVICE]

[--eval-batch-size EVAL_BATCH_SIZE]

[--eval-total-size EVAL_TOTAL_SIZE] [--nhood-size NHOOD_SIZE]

[--row-batch-size ROW_BATCH_SIZE]

[--col-batch-size COL_BATCH_SIZE] [--chkpt CHKPT]

[--precomputed-dir PRECOMPUTED_DIR]

[--metrics METRICS [METRICS ...]] [--save] [--anti-artifact]

optional arguments:

-h, --help show this help message and exit

--root ROOT

--dataset {mnist,cifar10,celeba}

--model {gan,vae,vaegan}

--backbone {dcgan,resnet}

--base-ch BASE_CH

--latent-dim LATENT_DIM

--reconst-ch RECONST_CH

--out-act {sigmoid,tanh}

--model-device MODEL_DEVICE

--eval-device EVAL_DEVICE

--eval-batch-size EVAL_BATCH_SIZE

--eval-total-size EVAL_TOTAL_SIZE

--nhood-size NHOOD_SIZE

--row-batch-size ROW_BATCH_SIZE

--col-batch-size COL_BATCH_SIZE

--chkpt CHKPT

--precomputed-dir PRECOMPUTED_DIR

--metrics METRICS [METRICS ...]

--save

--anti-artifact| Model | FID2 | Precision4 | Recall4 |

|---|---|---|---|

| VAE | 52.988 | 0.768 | 0.000 |

| VAEGAN | 42.761 | 0.795 | 0.003 |

| GAN | 16.203 | 0.750 | 0.151 |

Each 4x4 block: (from left to right) original, VAE, VAEGAN_reconst, VAEGAN_gen

Footnotes

-

Liu, Ziwei, et al. "Deep learning face attributes in the wild." Proceedings of the IEEE international conference on computer vision. 2015. ↩

-

Heusel, Martin, et al. "Gans trained by a two time-scale update rule converge to a local nash equilibrium." Advances in neural information processing systems 30 (2017). ↩ ↩2

-

as indicated by

scipy.image.resize↩ -

Kynkäänniemi, Tuomas, et al. "Improved precision and recall metric for assessing generative models." Advances in Neural Information Processing Systems 32 (2019). ↩ ↩2