Han Liang, Wenqian Zhang, Wenxuan Li, Jingyi Yu, Lan Xu.

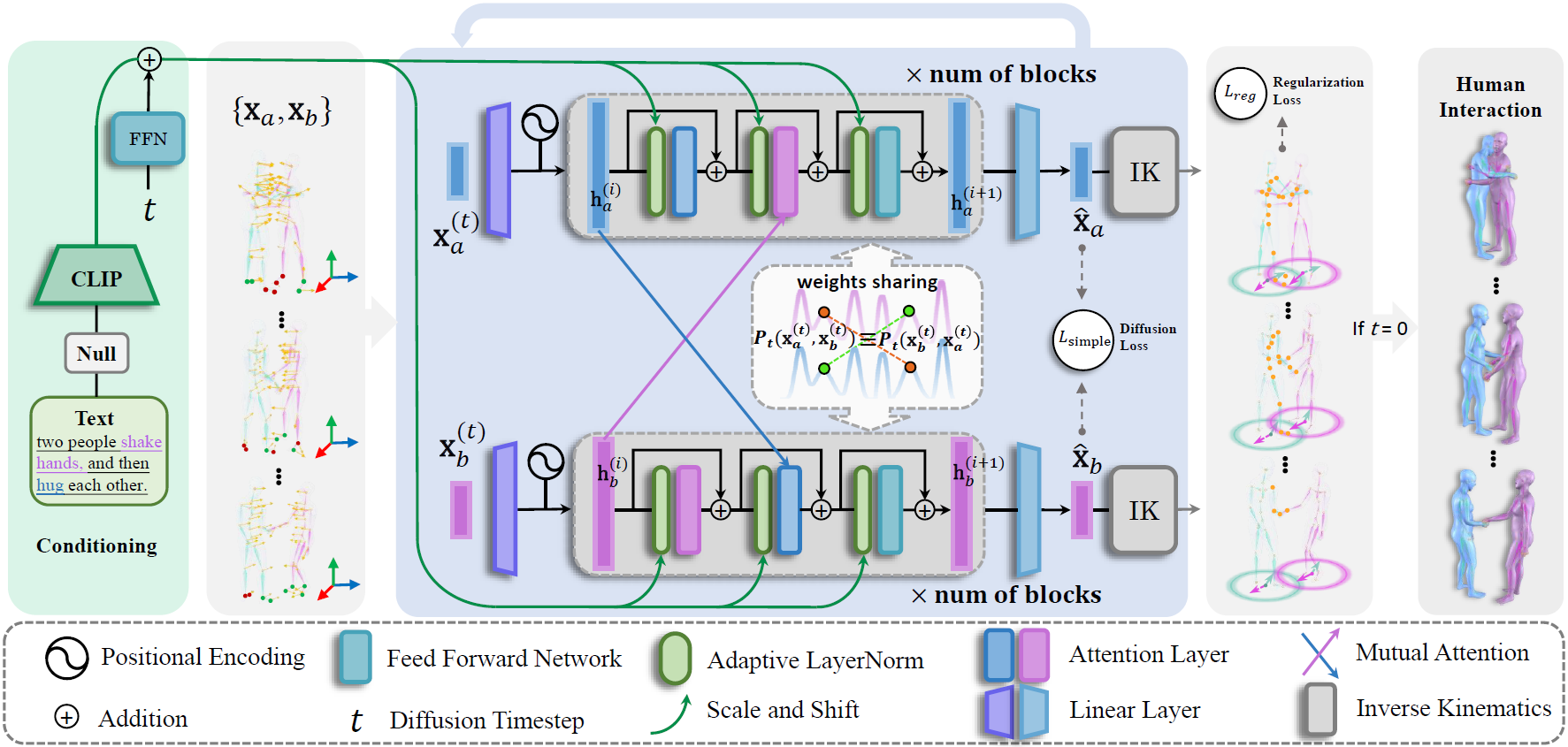

This repository contains the official implementation for the paper: InterGen: Diffusion-based Multi-human Motion Generation under Complex Interactions (IJCV 2024).

Our work is capable of simultaneously generating high-quality interactive motions of two people with only text guidance, enabling various downstream tasks including person-to-person generation, inbetweening, trajectory control and so forth.

For more qualitative results, please visit our webpage.

InterHuman is a comprehensive, large-scale 3D human interactive motion dataset encompassing a diverse range of 3D motions of two interactive people, each accompanied by natural language annotations.

It is made available under Creative Commons BY-NC-SA 4.0 license. You can access the dataset in our webpage with the google drive link for non-commercial purposes, as long as you give appropriate credit by citing our paper and indicating any changes that you've made. The redistribution of the dataset is prohibited.

This code was tested on Ubuntu 20.04.1 LTS and requires:

- Python 3.8

- conda3 or miniconda3

- CUDA capable GPU (one is enough)

conda create --name intergen

conda activate intergen

pip install -r requirements.txtDownload the data from webpage. And put them into ./data/.

<DATA-DIR>

./annots //Natural language annotations where each file consisting of three sentences.

./motions //Raw motion data standardized as SMPL which is similiar to AMASS.

./motions_processed //Processed motion data with joint positions and rotations (6D representation) of SMPL 22 joints kinematic structure.

./split //Train-val-test split.Run the shell script:

./prepare/download_pretrain_model.shModify config files ./configs/model.yaml and ./configs/infer.yaml

In an intense boxing match, one is continuously punching while the other is defending and counterattacking.

With fiery passion two dancers entwine in Latin dance sublime.

Two fencers engage in a thrilling duel, their sabres clashing and sparking as they strive for victory.

The two are blaming each other and having an intense argument.

Two good friends jump in the same rhythm to celebrate.

Two people bow to each other.

Two people embrace each other.

...python tools/infer.pyThe results will be rendered and put in ./results/

Modify config files ./configs/model.yaml ./configs/datasets.yaml and ./configs/train.yaml, and then run:

python tools/train.pyModify config files ./configs/model.yaml and ./configs/datasets.yaml

python tools/eval.pyIf you find our work useful in your research, please consider citing:

@article{liang2024intergen,

title={Intergen: Diffusion-based multi-human motion generation under complex interactions},

author={Liang, Han and Zhang, Wenqian and Li, Wenxuan and Yu, Jingyi and Xu, Lan},

journal={International Journal of Computer Vision},

pages={1--21},

year={2024},

publisher={Springer}

}

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

All material is made available under Creative Commons BY-NC-SA 4.0 license. You can use and adapt the material for non-commercial purposes, as long as you give appropriate credit by citing our paper and indicating any changes that you've made.