Code for the paper "Model Selection for Bayesian Autoencoders".

The code is under refactoring, feel free to contact me via email (ba-hien.tran@eurecom.fr) if you have any issues or questions.

Run the following command to install necessary python packages for our code

pip3 install -r requirements.txt- The MNIST dataset is already supported by the

torchvision.datasetspackage.

- The pre-processed Frey and Yale datasets are located in the directories

datasets/FreyFacesanddatasets/YaleFaces, respectively.

- Please download the aligned CelebA dataset at here, which includes two files:

img_align_celeba.zipandlist_eval_partition.txt. - Then, extract the file

img_align_celeba.zipunder the directorydatasets/celeba/raw. The filelist_eval_partition.txtalso should be located in this directory.

Here are a few examples to run the BAE model with optimized prior on various datasets:

python3 experiments/bae_conv_mnist.py \

--seed=1 \

--out_dir="./exp/conv_mnist/bae_latent_50_train_200_prior_optim" \

--prior_dir="./exp/conv_mnist/prior" \

--samples_per_class=10 \

--train="True" \

--prior_type="optim" \

--training_size=200 \

--latent_size=50 \

--optimize_prior="True" \

--batch_size=64 \

--n_iters_prior=2000 \

--lr=0.003 \

--mdecay=0.05 \

--num_burn_in_steps=2000 \

--n_mcmc_samples=32 \

--keep_every=1000 \

--only_zero="True" \

--iter_start_collect=6000python3 experiments/bae_conv_face.py \

--seed=1 \

--out_dir="./exp/conv_face/bae_latent_50_zero_train_500_prior_optim" \

--prior_dir="./exp/conv_face/prior" \

--n_epochs_pretrain=200 \

--prior_type="optim" \

--training_size=500 \

--latent_size=50 \

--optimize_prior="True" \

--batch_size=64 \

--n_iters_prior=2000 \

--lr=0.003 \

--mdecay=0.05 \

--num_burn_in_steps=2000 \

--n_mcmc_samples=32 \

--keep_every=1000 \

--iter_start_collect=6000python3 experiments/bae_conv_celeba.py \

--seed=1 \

--out_dir="./exp/conv_celeba/bae_latent_50_train_1000_prior_optim" \

--prior_dir="./exp/conv_celeba/prior" \

--prior_type="optim" \

--train="True" \

--training_size=1000 \

--latent_size=50 \

--optimize_prior="True" \

--batch_size=64 \

--n_iters_prior=2000 \

--lr=0.003 \

--mdecay=0.05 \

--num_burn_in_steps=5000 \

--n_mcmc_samples=32 \

--keep_every=2000 \

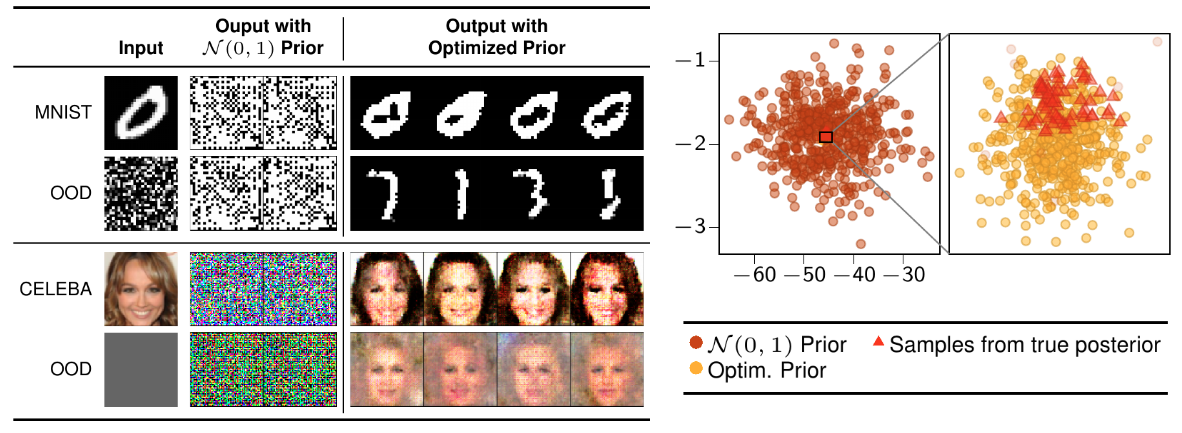

--iter_start_collect=20000- We provide some jupyter notebooks used to visualize the results.

These notebooks can be found in the directory

notebooks.

When using this repository in your work, please consider citing our paper

@inproceedings{Tran2021,

author = {Tran, Ba-Hien and Rossi, Simone and Milios, Dimitrios and Michiardi, Pietro and Bonilla, Edwin V and Filippone, Maurizio},

booktitle = {Advances in Neural Information Processing Systems},

pages = {19730--19742},

publisher = {Curran Associates, Inc.},

title = {{Model Selection for Bayesian Autoencoders}},

volume = {34},

year = {2021}

}