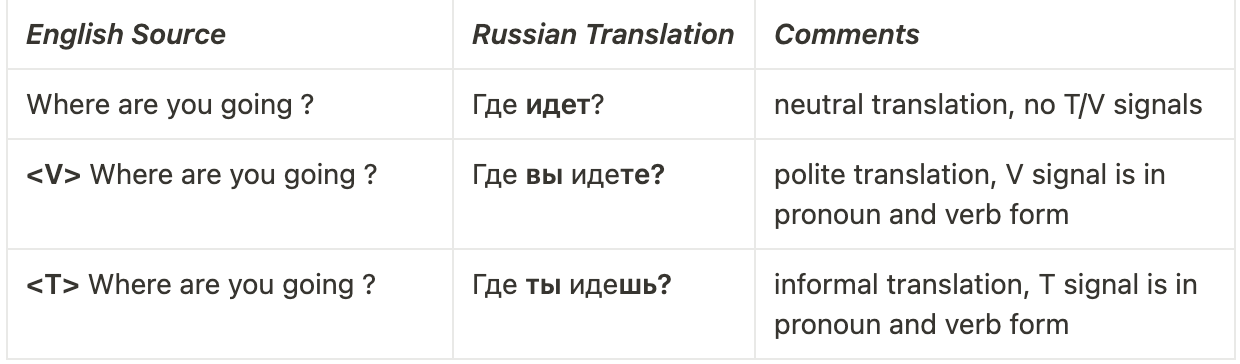

This project is aiming to implement honorifics (T/V) distinction for translating English to Russian.

It is inspired by an article 'Controlling Politeness in Neural Machine Translation via Side Constraints'.

This README provides a short overview of the project, for a lengthy one, please, read this report.

The repo consists of

-

token-based (

code/tv_detector/) and grammar-based (code/conll_tv_detector/) T/V detectors -

neural-based translation, evaluation (BLEURT) and morphosyntactic parsing (DeepPavlov) models in Jupiter Notebook format (under

code/notebooks/). Those models are intended to use via 'Google Collaboratory'. -

data processing utilities (

code/helper.py) and some examples (code/main.py) -

train and test corpora (under

data/) -

predicted translations (under

translations/)

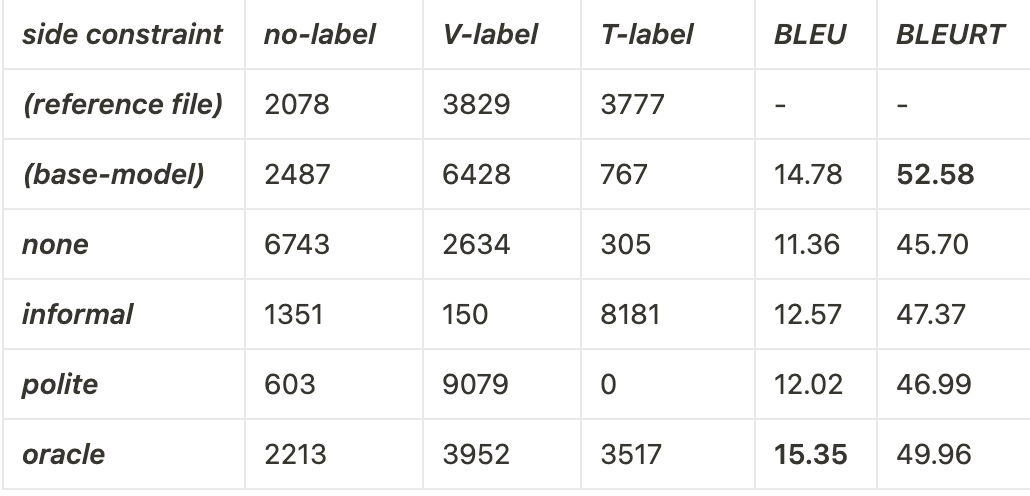

Data for training a neural model is taken from the Yandex 1m EN-RU corpus. Dataset was sampled to select 22k V-sentences, 8k T-sentences and 100k neutral sentences.

Test dataset was crafted from manually annotated sources for solving the deixis problem (Voita et. al, 2019).

The model was developed with the JoeyNMT as a base translation framework.

Main notebooks for model training and demonstration are

code/notebooks/train_TV_model.ipynb

and code/notebooks/demo_TV_model.ipynb

Trained checkpoints and some data files for demo are available on Google Drive (TV_model, base_model).

To sum up, you can see that a simple technique such as prepending T/V tokens to source sentences can add controllability to NMT.

Author: Tsimafei Prakapenka