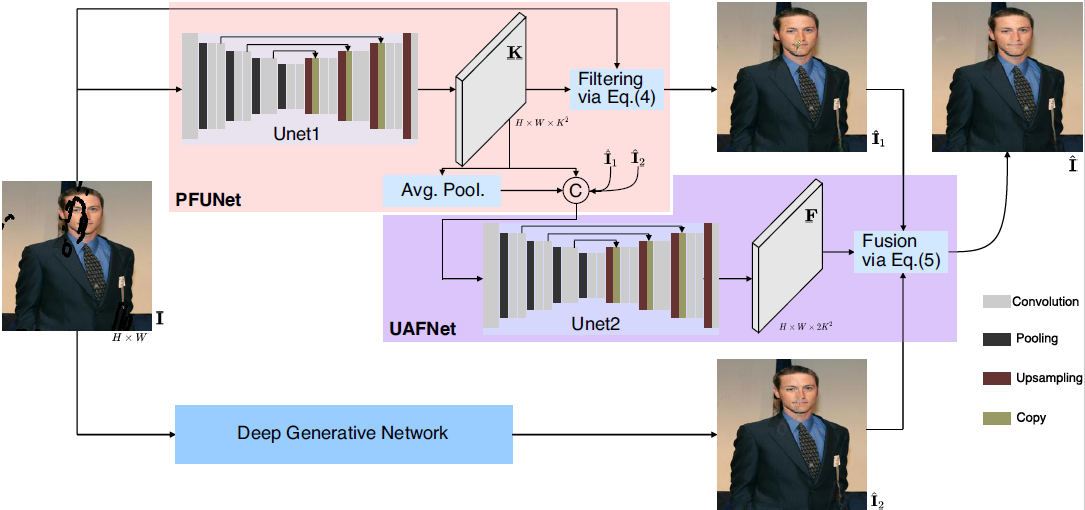

We propose a new method for image inpainting by formulating it as a mix of two problems that predictive filtering and deep generation. This work has been accepted to ACM-MM 2021. Please refer to the paper for details: https://arxiv.org/pdf/2107.04281.pdf

- Places2 Data of Places365-Standard

- CelebA(https://mmlab.ie.cuhk.edu.hk/projects/CelebA.html)

- Dunhuang

- Mask (https://nv-adlr.github.io/publication/partialconv-inpainting)

- For data folder path (CelebA) organize them as following:

--CelebA

--train

--1-1.png

--valid

--1-1.png

--test

--1-1.png

--mask-train

--1-1.png

--mask-valid

--1-1.png

--mask-test

--0%-20%

--1-1.png

--20%-40%

--1-1.png

--40%-60%

--1-1.png- Run the code

./data/data_list.pyto generate the data list

We release our pretrained model (CelebA) at models

pretrained model (Places2) at models

pretrained model (Dunhuang) at models

python train.py

For the parameters: checkpoints/config.yml, kpn/config.py

python test.py

For the parameters: checkpoints/config.yml, kpn/config.py

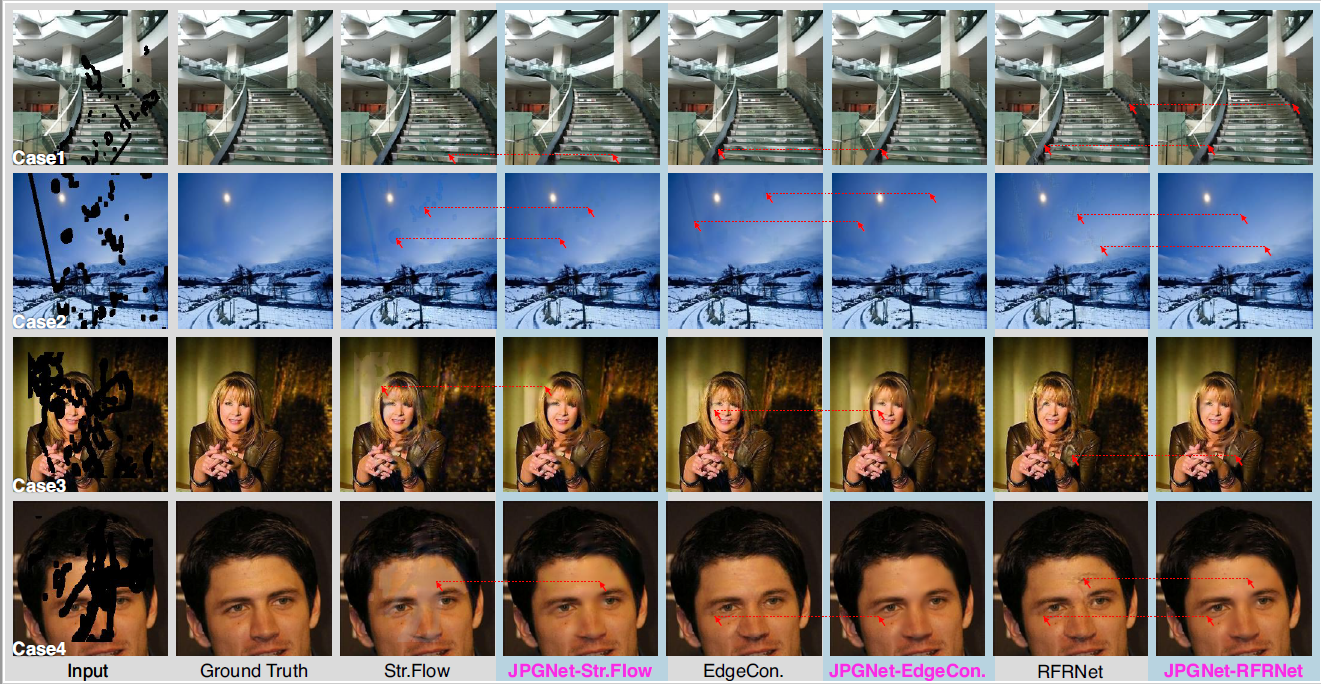

- Comparsion with SOTA, see paper for details.

More details are coming soon

@article{guo2021jpgnet,

title={JPGNet: Joint Predictive Filtering and Generative Network for Image Inpainting},

author={Guo, Qing and Li, Xiaoguang and Juefei-Xu, Felix and Yu, Hongkai and Liu, Yang and others},

journal={ACM-MM},

year={2021}

}

Parts of this code were derived from:

https://github.com/tsingqguo/efficientderain

https://github.com/knazeri/edge-connect

https://github.com/RenYurui/StructureFlow

https://github.com/jingyuanli001/RFR-Inpainting