This app demonstrates Weaviate's multi-modal capabilities.

It uses a CLIP model to encode images and text into the same vector space.

To run this example, you need:

- Docker (to run Weaviate)

- Python 3.8 or higher

- Install the Python dependencies.

pip install -r requirements.txt

- Run docker-compose to spin up an Weaviate instance and the CLIP inference container.

docker compose up -d

- Create the collection definition and import data, as well as some pre-prepared queries.

python add_data.py

- Start the Streamlit app.

streamlit run app.py

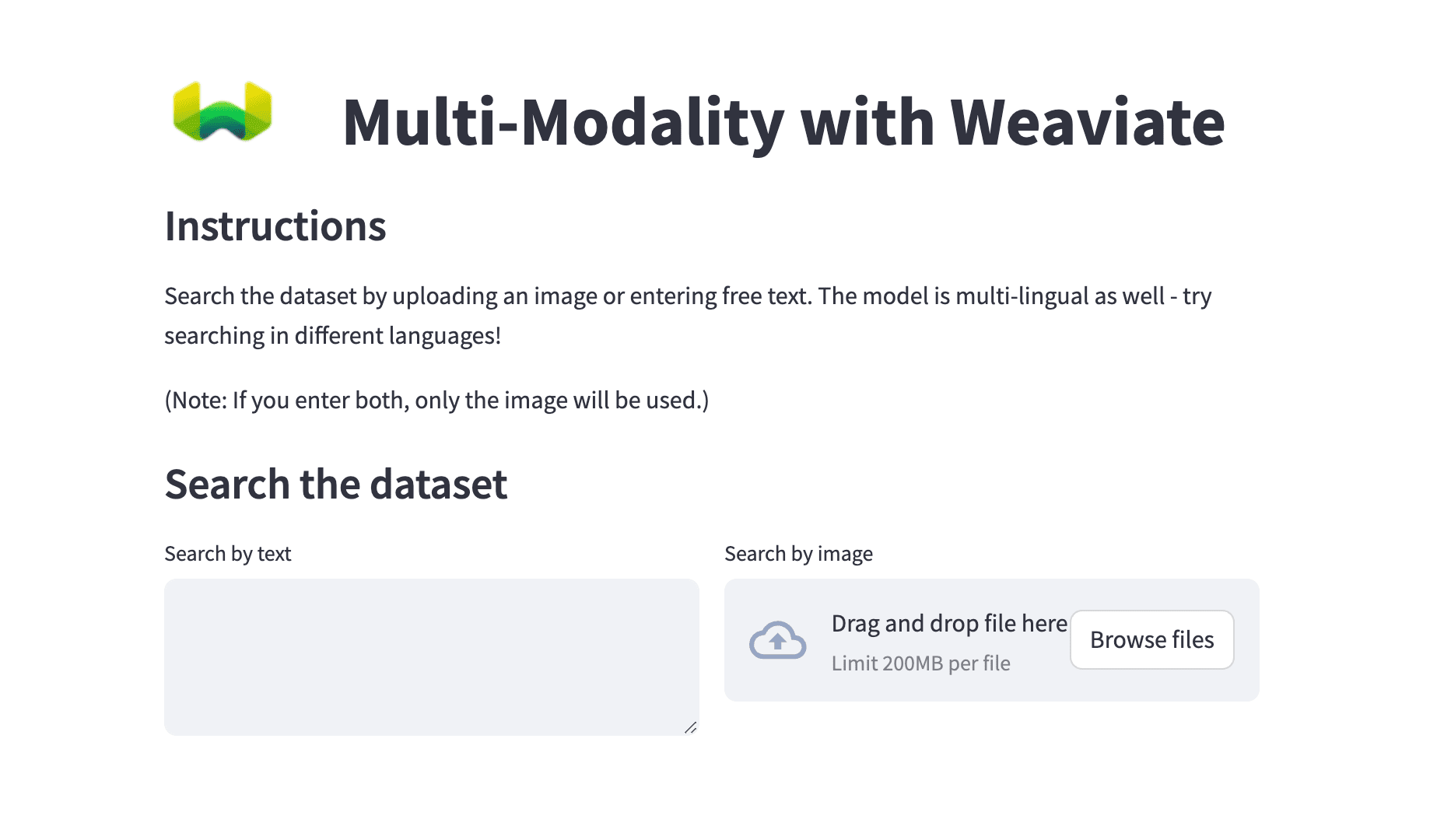

Input a search query into the text box, or upload an image.

This will return the top 6 results from the Weaviate instance. The results are sorted by the cosine similarity between the query and the vector representation of the object.

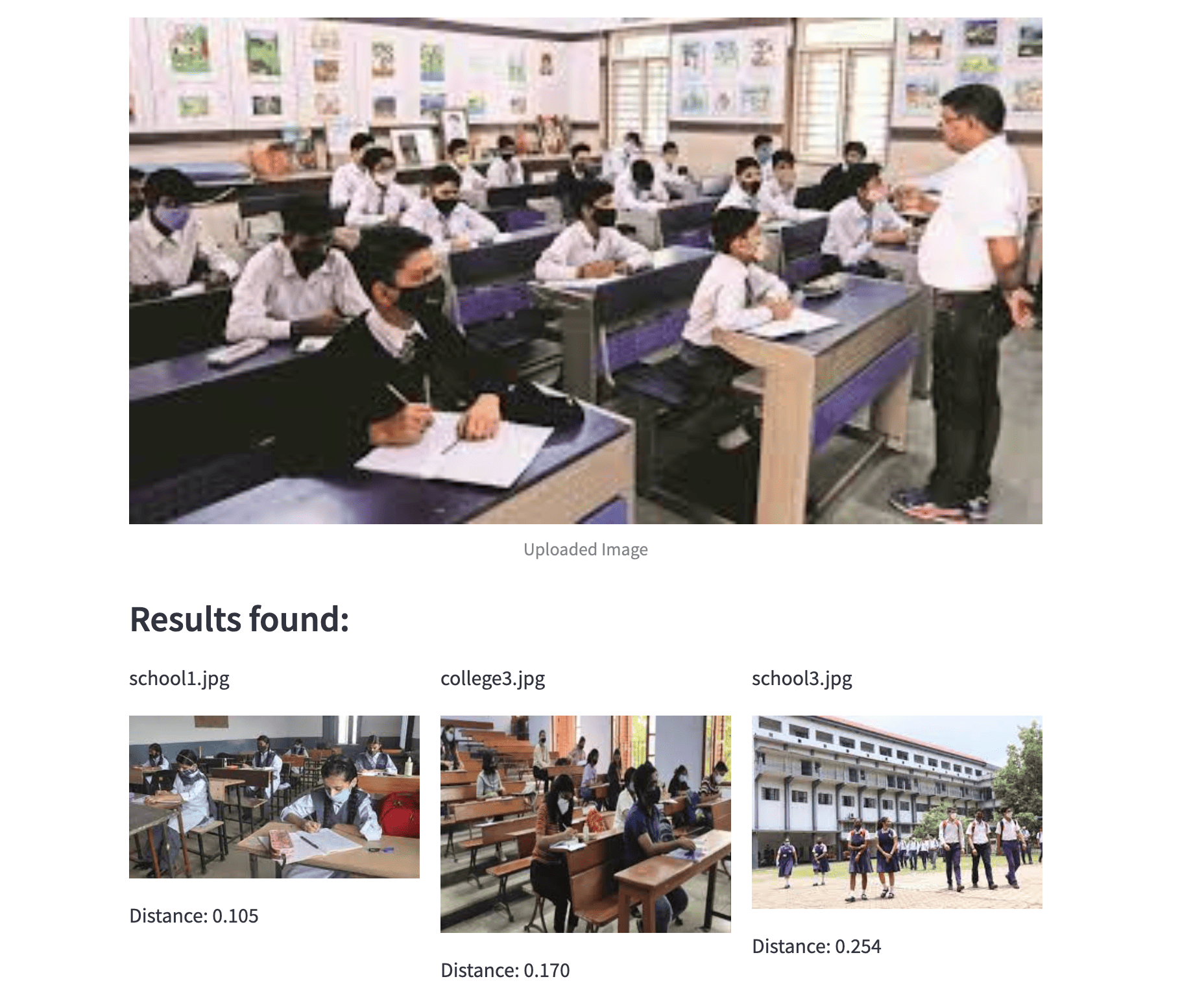

Example search results for an image query:

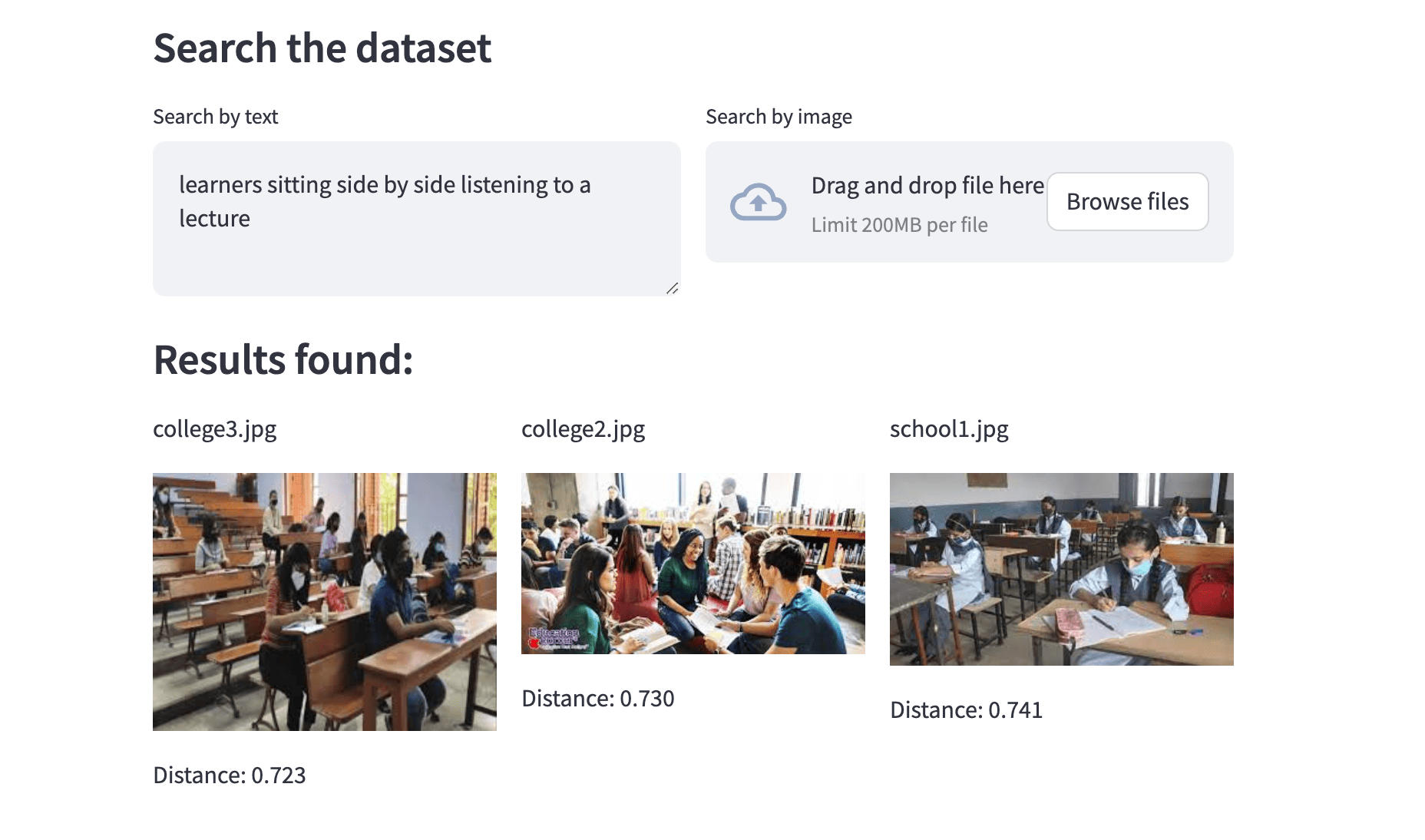

Example search results for a text query:

Note - The model used is multi-lingual! That means it can understand queries in multiple languages. Try a search with an image, and then try inputting a description for that image in different languages!

Universe image from Unsplash

Forest image from Unsplash