This is a custom output for Microsoft.Diagnostics.EventFlow to insert events into Google BigQuery.

The general flow of the process:

- Convert Microsoft.Diagnostics.EventFlow's events(EventData) to Google BigQuery's rows data(RowsData) array.

- If

TableIdhas date format strings, expand it. - If

AutoCreateTableenabled, create table if not exists. - Call tabledata().insertAll() to stream insert rows data into BigQuery with retrying exponential backoff manner.

To quickly get started, you can create a simple console application in VisualStudio as described below or just download and run PlayGround project.

- Prepare Dataset. See Creating and Using Datasets

- Prepare ServiceAccount and set private key json file path to

GOOGLE_APPLICATION_CREDENTIALSenvironment variable. See Authentication

PM> Install-Package Microsoft.Diagnostics.EventFlow.Inputs.Trace

PM> Install-Package Mamemaki.EventFlow.Outputs.BigQuery

PM> Install-Package Microsoft.Diagnostics.EventFlow.Outputs.StdOutputAdd a JSON file named "eventFlowConfig.json" to your project and set the Build Action property of the file to "Copy if Newer". Set the content of the file to the following:

{

"inputs": [

{

"type": "Trace",

"traceLevel": "Warning"

}

],

"outputs": [

{

"type": "StdOutput"

},

{

"type": "BigQuery",

"projectId": "xxxxxx-nnnn",

"datasetId": "xxxxxxxx",

"tableId": "from_eventflow_{yyyyMMdd}",

"tableSchemaFile": ".\\tableSchema.json",

"autoCreateTable": true

}

],

"schemaVersion": "2016-08-11",

"extensions": [

{

"category": "outputFactory",

"type": "BigQuery",

"qualifiedTypeName": "Mamemaki.EventFlow.Outputs.BigQuery.BigQueryOutputFactory, Mamemaki.EventFlow.Outputs.BigQuery"

}

]

}Replace projectId, datasetId as your environment.

Add a JSON file named "tableSchema.json" to your project and set the Build Action property of the file to "Copy if Newer". Set the content of the file to the following:

[

{

"name": "Timestamp",

"type": "TIMESTAMP",

"mode": "REQUIRED"

},

{

"name": "Message",

"type": "STRING",

"mode": "REQUIRED"

}

]Create an EventFlow pipeline in your application code using the code below. Run your application and see your traces in console output or in Google BigQuery.

using (var pipeline = DiagnosticPipelineFactory.CreatePipeline("eventFlowConfig.json"))

{

System.Diagnostics.Trace.TraceWarning("EventFlow is working!");

Console.WriteLine("Press any key to exit...");

Console.ReadKey(intercept: true);

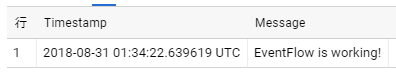

}Query result in Google BigQuery console:

| Parameter | Description | Required(default) |

|---|---|---|

projectId |

Project id of Google BigQuery. | Yes |

datasetId |

Dataset id of Google BigQuery. | Yes |

tableId |

Table id of Google BigQuery. The string enclosed in brackets can be expanded through DateTime.Format(). e.g. "accesslog_{yyyyMMdd}" => accesslog_20181231 | Yes |

autoCreateTable |

If set true, check table exsisting and create table dynamically. see Dynamic table creating. | No(false) |

tableSchemaFile |

Json file that define Google BigQuery table schema. | Yes |

insertIdFieldName |

The field name of InsertId. If set %uuid% generate uuid each time. if not set InsertId will not set. see Specifying insertId property. |

No(null) |

See Quota policy a section in the Google BigQuery document.

This library refers Application Default credentials(ADC). You can use GOOGLE_APPLICATION_CREDENTIALS environment variable to set your ServiceAccount key. See https://cloud.google.com/docs/authentication/getting-started

Your ServiceAccount need permissions as bellow

bigquery.tables.updateDatabigquery.tables.createif useautoCreateTable

tableId accept DateTime.ToString() format to construct table id.

Table id's formatted at runtime using the UTC time.

For example, with the tableId is set to accesslog_{yyyyMM01}, table ids accesslog_20181101, accesslog_20181201 and so on.

Note that the timestamp of logs and the date in the table id do not always match, because there is a time lag between collection and transmission of logs.

There is one method to describe the schema of the target table.

- Load a schema file in JSON.

On this method, set tableSchemaFile to a path to the JSON-encoded schema file which you used for creating the table on BigQuery. see table schema for detail information.

Example:

[

{

"name": "Timestamp",

"type": "TIMESTAMP",

"mode": "REQUIRED"

},

{

"name": "FormattedMessage",

"type": "STRING",

"mode": "REQUIRED"

}

]BigQuery uses insertId property to detect duplicate insertion requests (see data consistency in Google BigQuery documents).

You can set insertIdFieldName option to specify the field to use as insertId property.

If set %uuid% to insertIdFieldName, generate uuid each time.

When autoCreateTable is set to true, check to exists the table before insertion, then create table if does not exist. When table id changed, do this sequence again. In other words, no table existing check occurred if not change table id.

To find the value corresponding to the BigQuery table field from EventData, we use the following process.

- Find matching name from common EventData properties.

- Find matching name from Payloads.

- Error if field mode is "REQUIRED", else will not set the field value.

Supported common EventData properties:

| Name | Description | Data type |

|---|---|---|

Timestamp |

Timestamp of the event | TIMESTAMP |

ProviderName |

Provider name | STRING |

Level |

Level of the event | INTEGER |

Keywords |

Keywords for the event | INTEGER |

NOTE: EventData value's data type and BigQuery table field's data type must be matched too.