A PyTorch implementation of EmpiricalMVM

EmpiricalMVM is an implementation of

"An Empirical Study of End-to-End Video-Language Transformers with Masked Visual Modeling"

Tsu-Jui Fu*, Linjie Li*, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, and Zicheng Liu

in Conference on Computer Vision and Pattern Recognition (CVPR) 2023

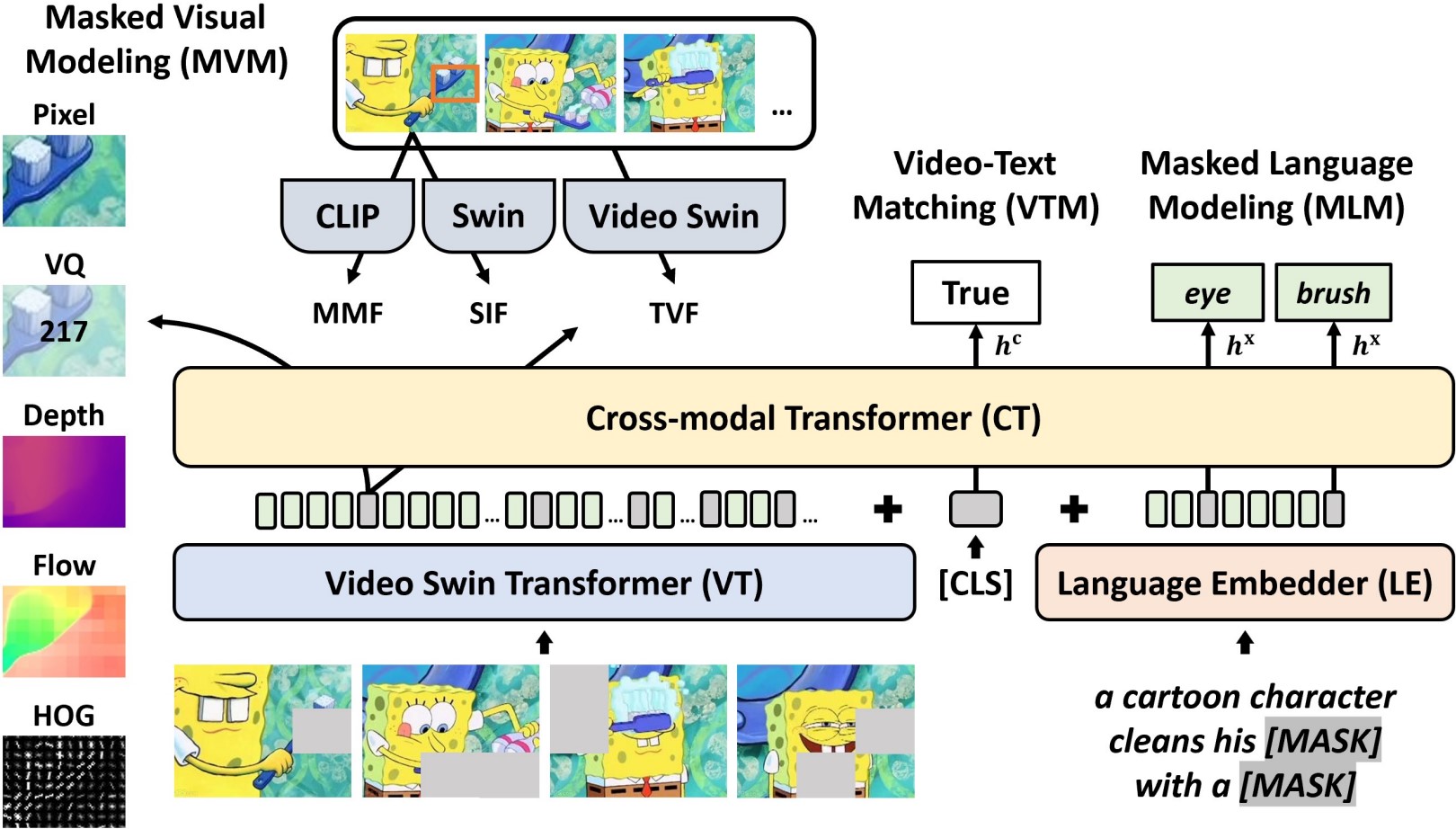

We systematically examine the potential of MVM in the context of VidL learning. Specifically, we base our study on a fully end-to-end VIdeO-LanguagE Transformer (VIOLET), where the supervision from MVM training can be backpropogated to the video pixel space. In total, eight different reconstructive targets of MVM are explored, from low-level pixel values and oriented gradients to high-level depth maps, optical flow, discrete visual tokens and latent visual features. We conduct comprehensive experiments and provide insights into the factors leading to effective MVM training, resulting in an enhanced model VIOLETv2.

This code is implemented under Python 3.9, PyTorch 1.11, TorchVision 0.12.

Download pretraining datasets (WebVid2.5M & CC3M) and pretrain via single-node multi-gpu distributed training.

# edit "mvm_target" in args_pretrain.json # "3d_feature", "2d_feature", "2d_clip_feature", "pixel", "hog", "optical_flow", "depth", "vq"

CUDA_VISIBLE_DEVICES='0,1,2,3,4,5,6,7' LOCAL_SIZE='8' python -m torch.distributed.launch --nproc_per_node=8 --master_port=5566 main_pretrain_yaml.py --config _args/args_pretrain.json

We provide ablation pretrained checkpoints (Table 1 & 6).

Download downstream datasets and our best pretrained checkpoint.

- Multiple-Choice Question Answering (TGIF-Action, TGIF-Transition, MSRVTT-MC, and LSMDC-MC)

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qamc_tsv_mlm_gen_ans_idx.py --config _args/args_tgif-action.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qamc_tsv_mlm_gen_ans_idx.py --config _args/args_tgif-transition.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qamc_tsv.py --config _args/args_msrvtt-mc.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qamc_tsv.py --config _args/args_lsmdc-mc.json

- Open-Ended Question Answering (TGIF-Frame, MSRVTT-QA, MSVD-QA, and LSMDC-FiB)

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qaoe_tsv_mlm_head.py --config _args/args_tgif-frame.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qaoe_tsv_mlm_head.py --config _args/args_msrvtt-qa.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qaoe_tsv_mlm_head.py --config _args/args_msvd-qa.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_qaoe_tsv_lsmdc_fib.py --config _args/args_lsmdc-fib.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_retrieval_tsv.py --config _args/args_msrvtt-retrieval.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python eval_retrieval_tsv.py --config _args/args_msrvtt-retrieval.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_retrieval_tsv.py --config _args/args_didemo-retrieval.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python eval_retrieval_tsv.py --config _args/args_didemo-retrieval.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=5566 main_retrieval_tsv.py --config _args/args_lsmdc-retrieval.json

CUDA_VISIBLE_DEVICES='0,1,2,3' python eval_retrieval_tsv.py --config _args/args_lsmdc-retrieval.json

We also provide our best downstream checkpoints (Table 8 & 9).

| TGIF-Action | TGIF-Transition | MSRVTT-MC | LSMDC-MC | |

|---|---|---|---|---|

| Paper | 94.8 | 99.0 | 97.6 | 84.4 |

| Repo | 94.9 | 99.0 | 96.8 | 84.4 |

| TGIF-Frame | MSRVTT-QA | MSVD-QA | LSMDC-FiB | |

|---|---|---|---|---|

| Paper | 72.8 | 44.5 | 54.7 | 56.9 |

| Repo | 72.7 | 44.5 | 54.6 | 56.9 |

| MSRVTT-T2V | DiDeMo-T2V | LSMDC-T2V | |

|---|---|---|---|

| Paper | 37.2 / 64.8 / 75.8 | 47.9 / 76.5 / 84.1 | 24.0 / 43.5 / 54.1 |

| Repo | 36.3 / 64.9 / 75.5 | 46.0 / 74.1 / 83.9 | 25.1 / 44.2 / 54.9 |

@inproceedings{fu2023empirical-mvm,

author = {Tsu-Jui Fu* and Linjie Li* and Zhe Gan and Kevin Lin and William Yang Wang and Lijuan Wang and Zicheng Liu},

title = {{An Empirical Study of End-to-End Video-Language Transformers with Masked Visual Modeling}},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}

@inproceedings{fu2021violet,

author = {Tsu-Jui Fu and Linjie Li and Zhe Gan and Kevin Lin and William Yang Wang and Lijuan Wang and Zicheng Liu},

title = {{VIOLET: End-to-End Video-Language Transformers with Masked Visual-token Modeling}},

booktitle = {arXiv:2111.1268},

year = {2021}

}