A TensorFlow implementation of PreservingGAN

PreservingGAN is an implementation of

"Region-Semantics Preserving Image Synthesis"

Kang-Jun Liu, Tsu-Jui Fu, Shan-Hung Wu

in Asian Conference on Computer Vision (ACCV) 2018

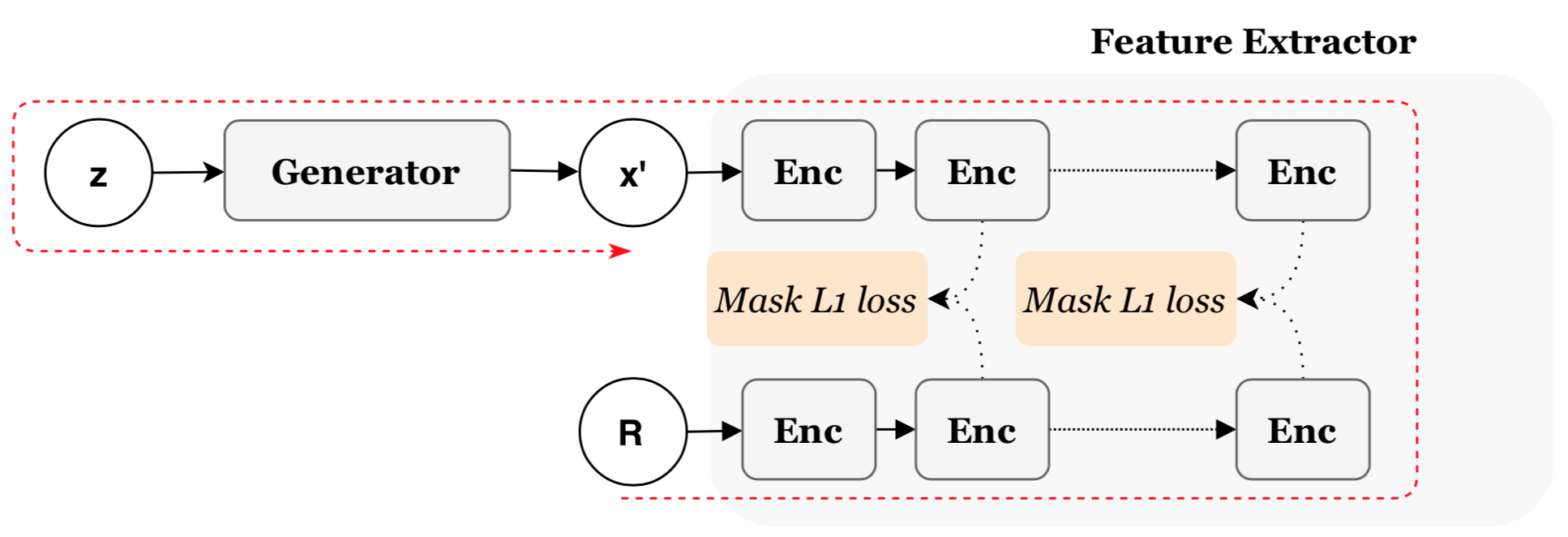

Given a reference image and R, the Fast-RSPer synthesis an image by finding (using the gradient descent) an input variable z for the generator such that, at a deep layer where neurons capture the semantics of the reference R, the feature extractor maps the synthesized region to features similar to those of the reference region. Since both the generator and feature extractor are pre-trained, the Fast-RSPer has no dedicated training phase and can generate images efficiently.

This code is implemented under Python3 and TensorFlow.

Following libraries are also required:

python -m main_bedroom

- Ipynb

PreservingGAN_Bedroom.ipynb

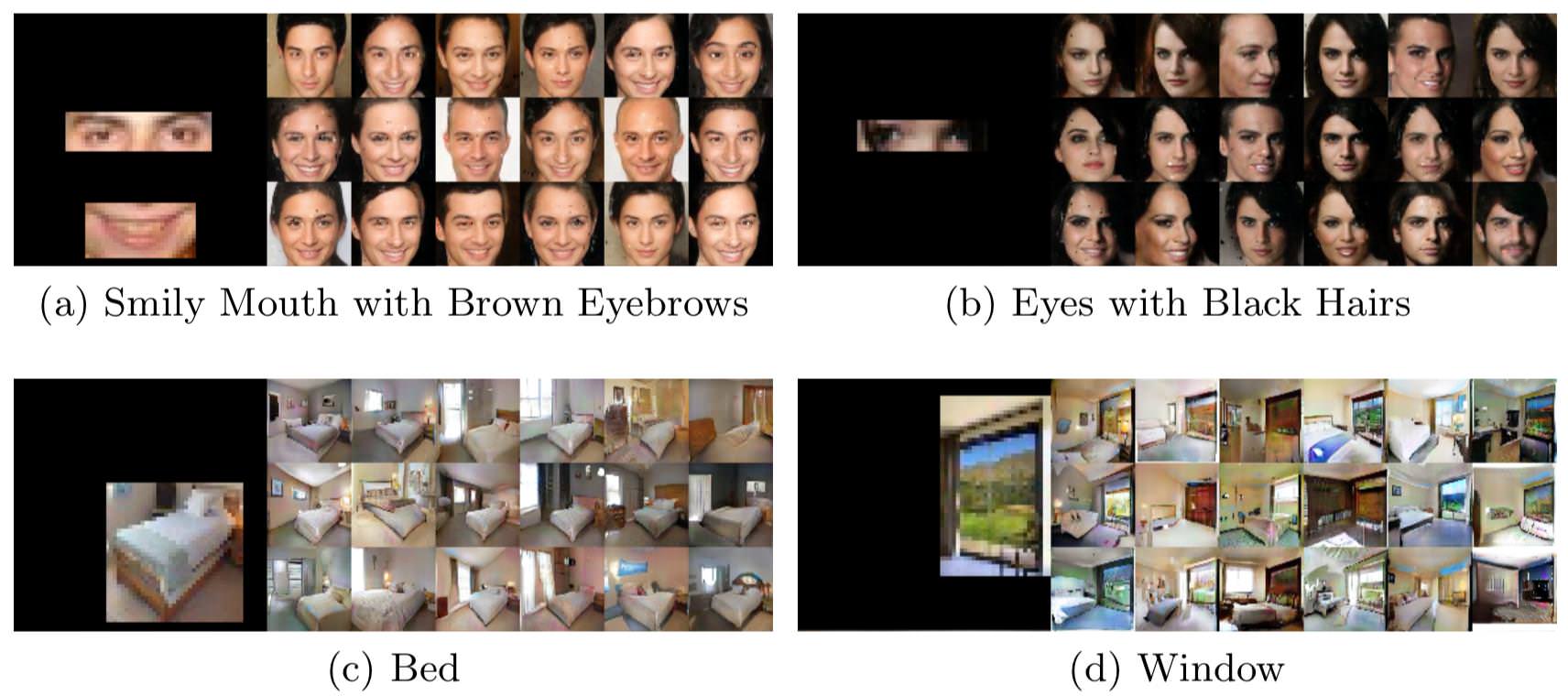

Here are some example inputs.

@inproceedings{liu2018preserving-gan,

author = {Kang-Jun Liu and Tsu-Jui Fu and Shan-Hung Wu},

title = {{Region-Semantics Preserving Image Synthesis}},

booktitle = {Asian Conference on Computer Vision (ACCV)},

year = {2018}

}

- Our CelebA model is based on EBGAN

- Our Bedroom model is based on WGAN-GP

- Our PreservingGAN is also based on NeuralStyle