Pytorch implementation of LADA.

Local Context-Aware Active Domain Adaptation

Tao Sun, Cheng Lu, and Haibin Ling

ICCV 2023

Active Domain Adaptation (ADA) queries the labels of a small number of selected target samples to help adapting a model from a source domain to a target domain. The local context of queried data is important, especially when the domain gap is large. However, this has not been fully explored by existing ADA works.

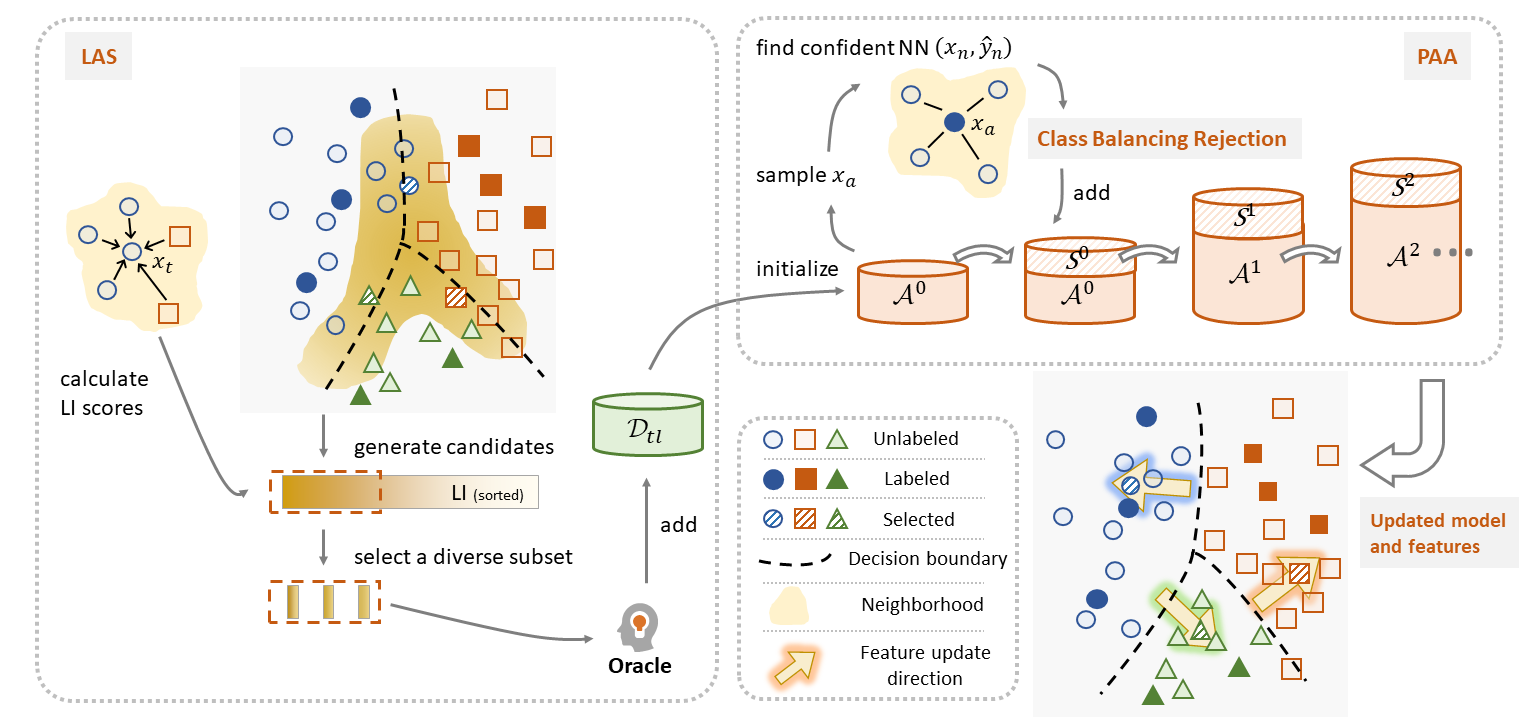

In this paper, we propose a Local context-aware ADA framework, named LADA, to address this issue. To select informative target samples, we devise a novel criterion based on the local inconsistency of model predictions. Since the labeling budget is usually small, fine-tuning model on only queried data can be inefficient. We progressively augment labeled target data with the confident neighbors in a class-balanced manner.

Experiments validate that the proposed criterion chooses more informative target samples than existing active selection strategies. Furthermore, our full method surpasses recent ADA arts on various benchmarks.

We experimented with python==3.8, pytorch==1.8.0, cudatoolkit==11.1.

To start, download the office31, Office-Home, VisDA datasets and set up the path in ./data folder.

| Active Criteria | Paper | Implementation |

|---|---|---|

| Random | - | random |

| Entropy | - | entropy |

| Margin | - | margin |

| LeastConfidence | - | leastConfidence |

| CoreSet | ICLR 2018 | coreset |

| AADA | WACV 2020 | AADA |

| BADGE | ICLR 2020 | BADGE |

| CLUE | ICCV 2021 | CLUE |

| MHP | CVPR 2023 | MHP |

| LAS (ours) | ICCV 2023 | LAS |

| Domain Adaptation | Paper | Implementation |

|---|---|---|

| Fine-tuning (joint label set) | - | ft_joint |

| Fine-tuning | - | ft |

| DANN | JMLR 2016 | dann |

| MME | ICCV 2019 | mme |

| MCC | ECCV 2020 | MCC |

| CDAC | CVPR 2021 | CDAC |

| RAA (ours) | ICCV 2023 | RAA |

| LAA (ours) | ICCV 2023 | LAA |

To obtain results of baseline active selection criteria on office home with 5% labeling budget,

for ADA_DA in 'ft' 'mme'; do

for ADA_AL in 'random' 'entropy' 'margin' 'coreset' 'leastConfidence' 'BADGE' 'AADA' 'CLUE' 'MHP'; do

python main.py --cfg configs/officehome.yaml --gpu 0 --log log/oh/baseline ADA.AL $ADA_AL ADA.DA $ADA_DA

done

doneTo reproduce results of LADA on office home with 5% labeling budget,

# LAS + fine-tuning with CE loss

python main.py --cfg configs/officehome.yaml --gpu 0 --log log/oh/LADA ADA.AL LAS ADA.DA ft

# LAS + MME model adaptation

python main.py --cfg configs/officehome.yaml --gpu 0 --log log/oh/LADA ADA.AL LAS ADA.DA mme

# LAS + Random Anchor set Augmentation (RAA)

python main.py --cfg configs/officehome.yaml --gpu 0 --log log/oh/LADA ADA.AL LAS ADA.DA RAA

# LAS + Local context-aware Anchor set Augmentation (LAA)

python main.py --cfg configs/officehome.yaml --gpu 0 --log log/oh/LADA ADA.AL LAS ADA.DA LAA More commands can be found in run.sh.

The pipline and implementation of baseline methods are adapted from CLUE and deep-active-learning. We adopt configuration files as EADA.

If you find our paper and code useful for your research, please consider citing

@article{sun2022local,

author = {Sun, Tao and Lu, Cheng and Ling, Haibin},

title = {Local Context-Aware Active Domain Adaptation},

journal = {IEEE/CVF International Conference on Computer Vision},

year = {2023}

}