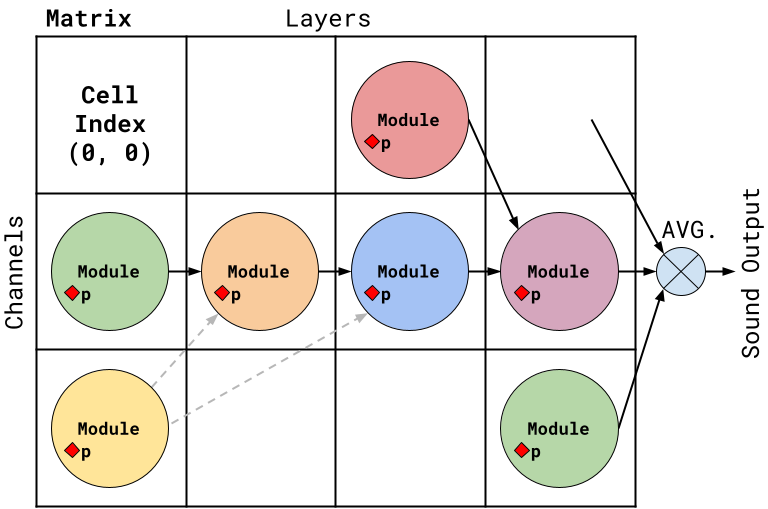

This repo contains the implementation of DiffMoog, a differential, subtractive, modular synthesizer, incorporating standard architecture and sound modules commonly found in commercial synthesizers.

The repo contains code for the synthesizer modules and architecture, as well as a platform for training and evaluating sound matching models using the synthesizer as a basis.

https://arxiv.org/abs/2401.12570

To get started, simply clone the repo and install the requirements in requirements.txt, then follow the instructions below.

To create a dataset, run the file src/dataset/create_data.py with the following arguments:

-g: the index of the GPU to use-s: the dataset to create, either'train'or'val'--size: the number of samples to create-n: the name of the dataset-c: the name of the chain to use fromsrc/synth/synth_chains.py-sd: the signal duration in seconds-no: the note off time in seconds (the time at which the note is released, equivalent to MIDI note off)-bs: the batch size (number of samples to create in parallel)

Example:

-g 0 -s train --size 50000 -n reduced_simple_osc -c REDUCED_SIMPLE_OSC -sd 4.0 -no 3.0 -bs 1The dataset will be saved in location root/data/{dataset_name}.

Before you start with the model training, ensure the following preliminary conditions are met:

-

Dataset Conditions

-

A dataset has been created in the directory

root/data/{dataset_name}with four distinct folders:train,val,train_nsynth, andval_nsynth. The recommended dataset sizes are:- train: 50,000 samples

- val: 5,000 samples

- train_nsynth: 18,000 samples

- val_nsynth: 2,000 samples

-

The

trainandvalfolders are populated automatically by thecreate_data.pyscript as described in the dataset generation section above. This script generates several files inside these folders including{sound_id}.wav,commit_and_args.txt,params_dataset.csv, andparams_dataset.pkl. -

The

val_nsynthandtrain_nsynthfolders need to be manually created by the user and should contain the NSynth dataset. Place the.wavfiles inside a subfolder namedwav_files. You can use the notebookroot/misc_notebooks/get_nsynth_dataset.ipynbto assist with this process.

-

-

Configuration File

- There is a configuration file available (probably at

root/configs/{config_name}.yaml) that contains the model configuration settings. Refer to the example configuration fileroot/configs/example_config.yamlfor an explanation of the different fields and options.

- There is a configuration file available (probably at

The directory structure should resemble the hierarchy below:

root/

└── data/

└── {dataset_name}/

├── train/ # Generated by create_data.py script

│ ├── wav_files/

│ │ └── {sound_id}.wav # Generated files

│ ├── commit_and_args.txt # Generated files

│ ├── params_dataset.csv # Generated files

│ └── params_dataset.pkl # Generated files

├── val/ # Generated by create_data.py script

│ ├── wav_files/

│ │ └── {sound_id}.wav # Generated files

│ ├── commit_and_args.txt # Generated files

│ ├── params_dataset.csv # Generated files

│ └── params_dataset.pkl # Generated files

├── val_nsynth/

│ └── wav_files/

│ └── {sound_id}.wav

└── train_nsynth/

└── wav_files/

└── {sound_id}.wav

To train the model, execute the src/main.py script with the following arguments:

-g: The index of the GPU to use.-e: The name of the experiment, for the purposes of storing results and TensorBoard logging. The experiment data will be stored inroot/experiments/{experiment_name}.-d: The dataset to use, specified by the name of the dataset folder inroot/data/{dataset_name}.-c: The path to the configuration file, e.g.,root/configs/{config_name}.yaml.--seed: seed number to use (optional).

Example:

-g 0 -e params_only -d modular_chain_dataset -c root/configs/paper_configs_reduced/params_only_config.yamlThe paper is available in the repository at root/paper.pdf.

Supplementary material is at root/paper_supplementary.

In the examples directory, you will find several notebooks to help get you familiar with the DiffMoog synthesizer and its capabilities.

Start with explore_chains.ipynb and create_dataset.ipynb for introduction about the synth structure, configuration and sound generation.

Use train_model.ipynb and evaluate_model.ipynb to train and evaluate a sound matching model.