The LayoutLMv3 model was proposed in LayoutLMv3: Pre-training for Document AI with Unified Text and Image Masking by Yupan Huang, Tengchao Lv, Lei Cui, Yutong Lu, Furu Wei. LayoutLMv3 simplifies LayoutLMv2 by using patch embeddings (as in ViT) instead of leveraging a CNN backbone, and pre-trains the model on 3 objectives: masked language modeling (MLM), masked image modeling (MIM) and word-patch alignment (WPA).

The abstract from the paper is the following:

Self-supervised pre-training techniques have achieved remarkable progress in Document AI. Most multimodal pre-trained models use a masked language modeling objective to learn bidirectional representations on the text modality, but they differ in pre-training objectives for the image modality. This discrepancy adds difficulty to multimodal representation learning. In this paper, we propose LayoutLMv3 to pre-train multimodal Transformers for Document AI with unified text and image masking. Additionally, LayoutLMv3 is pre-trained with a word-patch alignment objective to learn cross-modal alignment by predicting whether the corresponding image patch of a text word is masked. The simple unified architecture and training objectives make LayoutLMv3 a general-purpose pre-trained model for both text-centric and image-centric Document AI tasks. Experimental results show that LayoutLMv3 achieves state-of-the-art performance not only in text-centric tasks, including form understanding, receipt understanding, and document visual question answering, but also in image-centric tasks such as document image classification and document layout analysis.

This model uses NVIDIA's enterprise containers available on NGC with a valid API_KEY. These containers provide best-in-class development tools and frameworks for the AI practitioner and reliable management and orchestration for the IT professional to ensure performance, high availability, and security. For more information on how to obtain access to these containers, check the NVIDIA website.

- Login to nvcr.io with your enterprise API key.

docker login nvcr.io

- Build the images. This will also compile Tesseract for OCR to run on NVIDIA GPUs.

bash build.sh

- Launch the Jupyter Lab and follow the workflow within

LayoutLMv3-notebook.ipynbdocker compose up layoutlmv3-pytorch

- Launch Triton Inference Server with your Models from the prior step.

docker compose up layoutlmv3-triton-server

- In another terminal, send a sample inference image.

docker compose up layoutlmv3-triton-client

-

Run NVIDIA Model Analyzer. NVIDIA Triton Model Analyzer is a versatile CLI tool that helps with a better understanding of the compute and memory requirements of models served through NVIDIA Triton Inference Server. This enables you to characterize the tradeoffs between different configurations and choose the best one for your use case.

docker compose up layoutlmv3-model-analyzer

-

Run NVIDIA Performance Analyzer. The perf_analyzer application generates inference requests to your model and measures the throughput and latency of those requests. To get representative results, perf_analyzer measures the throughput and latency over a time window, and then repeats the measurements until it gets stable values. By default perf_analyzer uses average latency to determine stability but you can use the --percentile flag to stabilize results based on that confidence level. For example, if --percentile=95 is used the results will be stabilized using the 95-th percentile request latency.

docker compose up layoutlmv3-triton-client-query

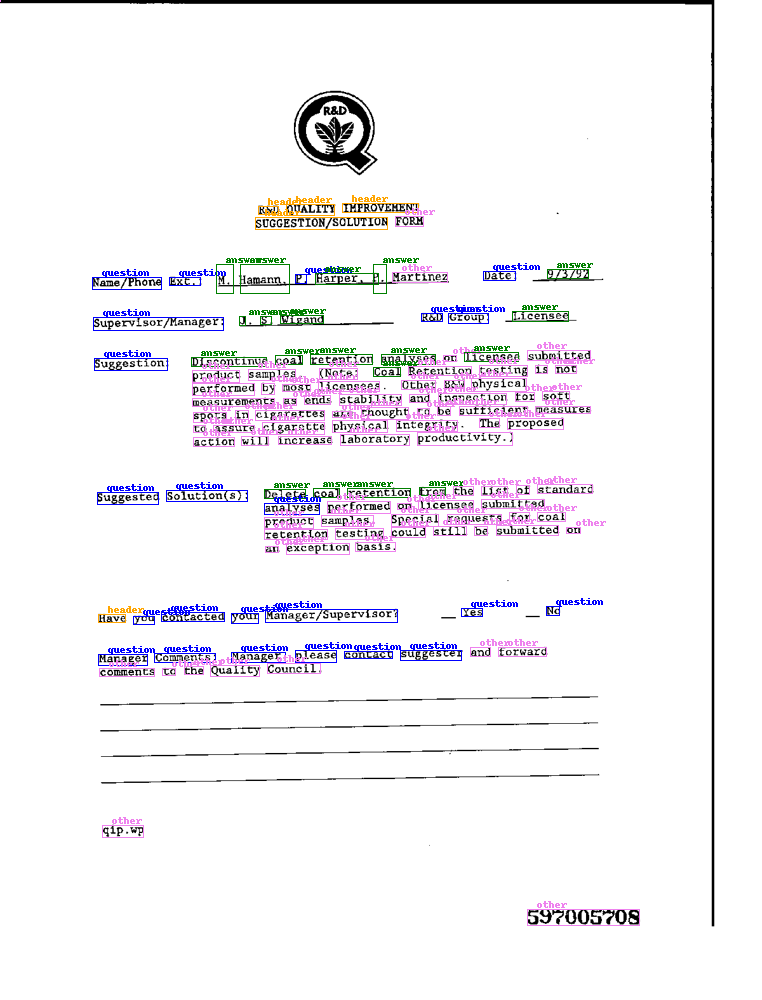

Triton will return 'true_predictions' and 'true_boxes' as arrays. They can be overlayed as boxes on an image as so:

# simple mapper, also available in pytesseract.py

def iob_to_label(label):

label = label[2:]

if not label:

return "other"

return label

# Original user image

draw = ImageDraw.Draw(image)

font = ImageFont.load_default()

# Label categories for the model

label2color = {

"question": "blue",

"answer": "green",

"header": "orange",

"other": "violet",

}

for prediction, box in zip(true_predictions, true_boxes):

predicted_label = iob_to_label(prediction).lower()

draw.rectangle(box, outline=label2color[predicted_label])

draw.text(

(box[0] + 10, box[1] - 10),

text=predicted_label,

fill=label2color[predicted_label],

font=font,

)

# Output

image