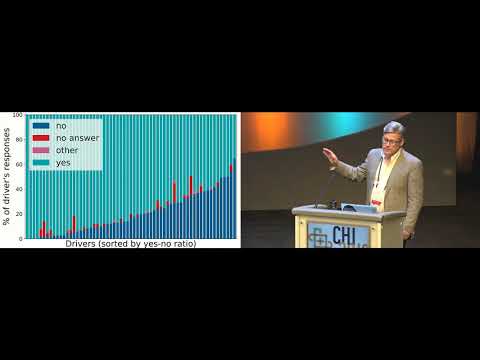

The Is Now a Good Time (INAGT) dataset consists of automotive, physiological, and visual data collected from drivers who self-annotated responses to the question "Is now a good time?," indicating the opportunity to receive non-driving information during a 50-minute drive. We augment this original driver-annotated data with third-party annotations of perceived safety, in order to explore potential driver overconfidence. The dataset includes data from 46 drivers and 1915 samples.

- 1915 samples across 46 drivers during a 28.5 km route

- Yes / No annotations from drivers if it is a good or bad time to interact

- 3rd part rating of Safe / Unsafe moments (from MTurk raters)

- Road video, driver face video, driver side video, and driver over shoulder video

- Automotive data (CAN) from 2015 Toyota Prius

- Physiological data from driver

- Pre-comuted road object detection (via I3D Inception V1)

- Pre-computed Font body pose (via OpenPose)

- Pre-computed facial landmarks (via OpenPose)

- Pre-computed side body pose (via OpenPose)

Sample data from participants 40 and 76

The dataset is available for research use. Interesed parties will need to have IRB approval to use the data. Please fill out our dataset request form to get in contact with us about downloading the full dataset.

Rob Semmens, Nikolas Martelaro, Pushyami Kaveti, Simon Stent, and Wendy Ju. 2019. Is Now A Good Time? An Empirical Study of Vehicle-Driver Communication Timing. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19). Association for Computing Machinery, New York, NY, USA, Paper 637, 1–12. DOI:https://doi.org/10.1145/3290605.3300867

Tong Wu, Nikolas Martelaro, Simon Stent, Jorge Ortiz, and Wendy Ju. 2021. Learning When Agents Can Talk to Drivers Using the INAGT Dataset and Multisensor Fusion. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 5, 3, Article 133 (Sept 2021), 28 pages. DOI:https://doi.org/10.1145/3478125

@article{wu_martelaro_2021, author = {Wu, Tong and Martelaro, Nikolas and Stent, Simon and Ortiz, Jorge and Ju, Wendy}, title = {Learning When Agents Can Talk to Drivers Using the INAGT Dataset and Multisensor Fusion}, year = {2021}, issue_date = {Sept 2021}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {5}, number = {3}, url = {https://doi.org/10.1145/3478125}, doi = {10.1145/3478125}, journal = {Proc. ACM Interact. Mob. Wearable Ubiquitous Technol.}, month = sep, articleno = {133}, numpages = {28}, keywords = {multi-modal learning, dataset, interaction timing, deep convolutional network, vehicle} }