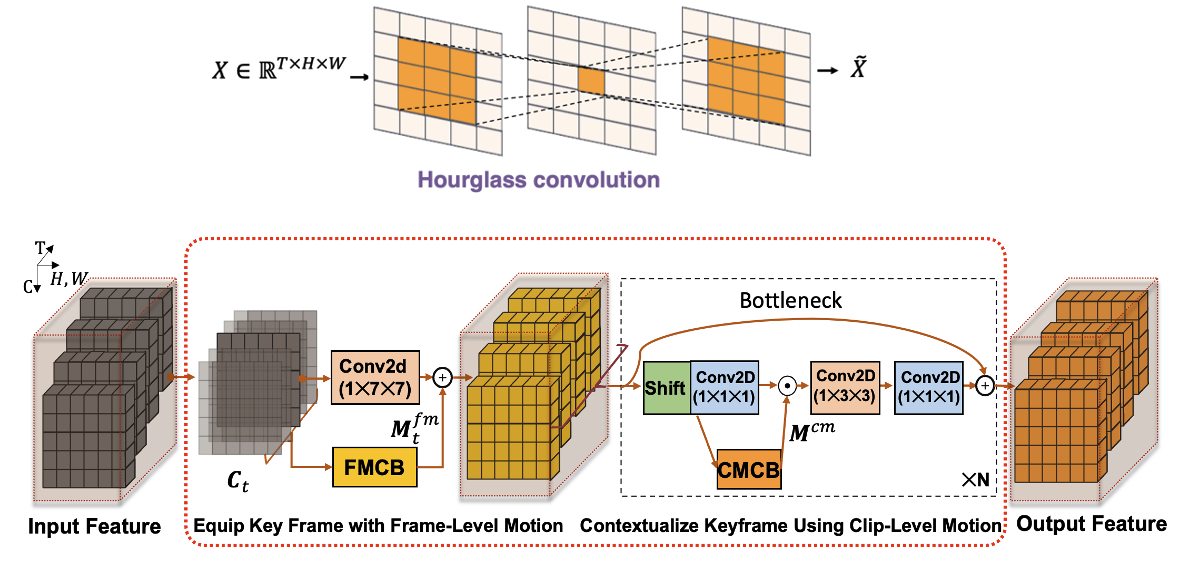

This is an official implementaion of paper "Hierarchical Hourglass Convolutional Network for Efficient Video Classification", which has been accepted by MM 2022. Paper link

- Release this V1 version (the version used in paper) to public. Complete codes and models will be released soon.

The code is built with following libraries:

- PyTorch >= 1.7, torchvision

- tensorboardx

For video data pre-processing, you may need ffmpeg.

We need to first extract videos into frames for all datasets (Kinetics-400, Something-Something V1 and V2, Diving48 and EGTEA Gaze+), following the TSN repo.

The implement of H2CN refers to TSN, TSM,TDN codebases

Here we provide some of the pretrained models.

Something-Something V1&V2 datasets are highly temporal-related. Here, we use the 224×224 resolution for performance report.

| Model | Frame * view | Top-1 Acc. | Top-5 Acc. | Checkpoint |

|---|---|---|---|---|

| H2CN | 8 * 1 | 53.6% | 81.4% | |

| H2CN | 16 * 1 | 55.0% | 82.4% | |

| H2CN | (8+16) * 1 | 56.7% | 83.2% |

| Model | Frame * view | Top-1 Acc. | Top-5 Acc. | Checkpoint |

|---|---|---|---|---|

| H2CN | 8 * 1 | 65.2% | 89.7% | |

| H2CN | 16 * 1 | 66.4% | 90.1% | |

| H2CN | (8+16) * 1 | 67.9% | 91.2% |

| Model | Frame * view | Top-1 Acc. | Top-5 Acc. | Checkpoint |

|---|---|---|---|---|

| H2CN | 8 * 30 | 76.9% | 93.0% | link(password:defq) |

| H2CN | 16 * 30 | 77.9% | 93.3% | link(password:vert) |

| H2CN | (8+16) * 30 | 78.7% | 93.6% |

| Model | Frame * view | Top-1 Acc. | Checkpoint |

|---|---|---|---|

| H2CN | 16 * 1 | 87.0% |

| Model | Frame * view * clip | Split1 | Split2 | Split3 |

|---|---|---|---|---|

| H2CN | 8 * 1 * 1 | 66.2% | 63.9% | 60.5% |

python train.py

We use the test code and protocal of repo TDN

- For center crop single clip, the processing of testing can be summarized into 2 steps:

- Run the following testing scripts:

CUDA_VISIBLE_DEVICES=0 python3 test_models_center_crop.py something \ --archs='resnet50' --weights <your_checkpoint_path> --test_segments=8 \ --test_crops=1 --batch_size=16 --gpus 0 --output_dir <your_pkl_path> -j 4 --clip_index=0 - Run the following scripts to get result from the raw score:

python3 pkl_to_results.py --num_clips 1 --test_crops 1 --output_dir <your_pkl_path>

- Run the following testing scripts:

- For 3 crops, 10 clips, the processing of testing can be summarized into 2 steps:

- Run the following testing scripts for 10 times(clip_index from 0 to 9):

CUDA_VISIBLE_DEVICES=0 python3 test_models_three_crops.py kinetics \ --archs='resnet50' --weights <your_checkpoint_path> --test_segments=8 \ --test_crops=3 --batch_size=16 --full_res --gpus 0 --output_dir <your_pkl_path> \ -j 4 --clip_index <your_clip_index> - Run the following scripts to ensemble the raw score of the 30 views:

python pkl_to_results.py --num_clips 10 --test_crops 3 --output_dir <your_pkl_path>

- Run the following testing scripts for 10 times(clip_index from 0 to 9):

@article{H2CN2022,

title={Hierarchical Hourglass Convolutional Network for Efficient Video Classification},

author={Yi Tan, Yanbin Hao, Hao Zhang, Shuo Wang, Xiangnan He},

journal={MM 2022},

}Thanks for the following Github projects: