[2024.9.26] 🔥🔥🔥 Our MECD is accepted in NeurIPS 2024 as a Spotlight Paper!

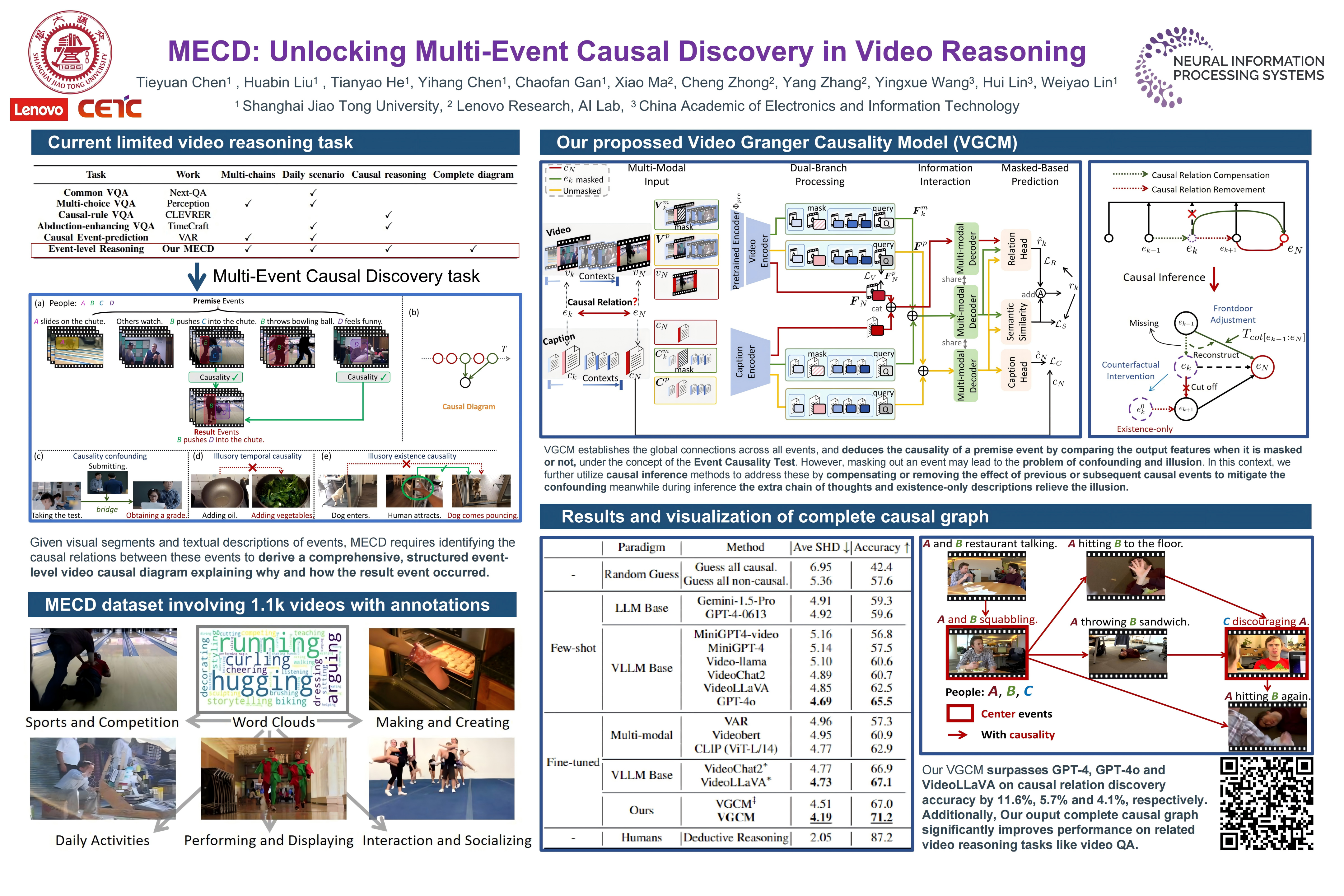

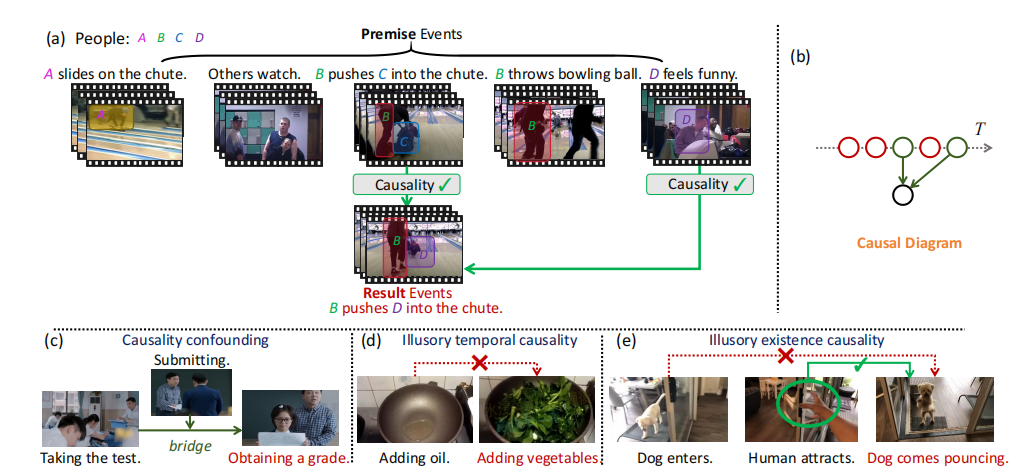

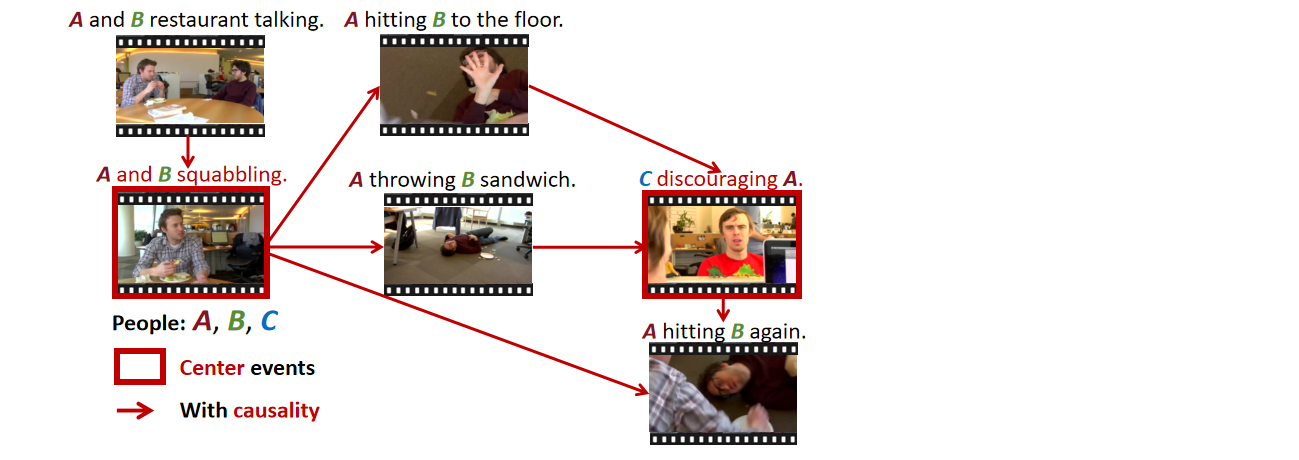

Video causal reasoning aims to achieve a high-level understanding of video content from a causal perspective. However, current video reasoning tasks are limited in scope, primarily executed in a question-answering paradigm and focusing on short videos containing only a single event and simple causal relations. To fill this gap, we introduce a new task and dataset, Multi-Event Causal Discovery (MECD). It aims to uncover the causal relations between events distributed chronologically across long videos.

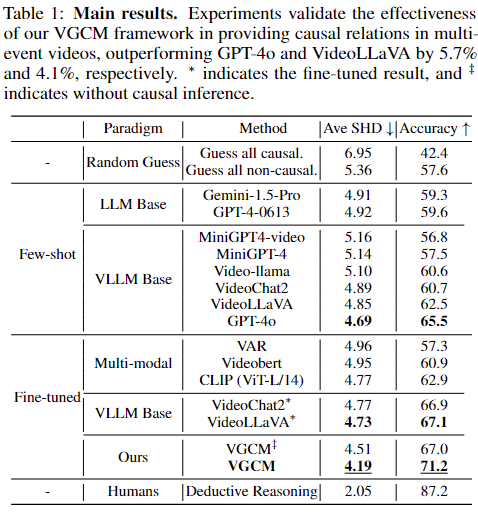

To address MECD, we devise a novel framework inspired by the Granger Causality method, using an efficient mask-based event prediction model to perform an Event Granger Test, which estimates causality by comparing the predicted result event when premise events are masked versus unmasked. Furthermore, we integrate causal inference techniques such as front-door adjustment and counterfactual inference to address challenges in MECD like causality confounding and illusory causality.

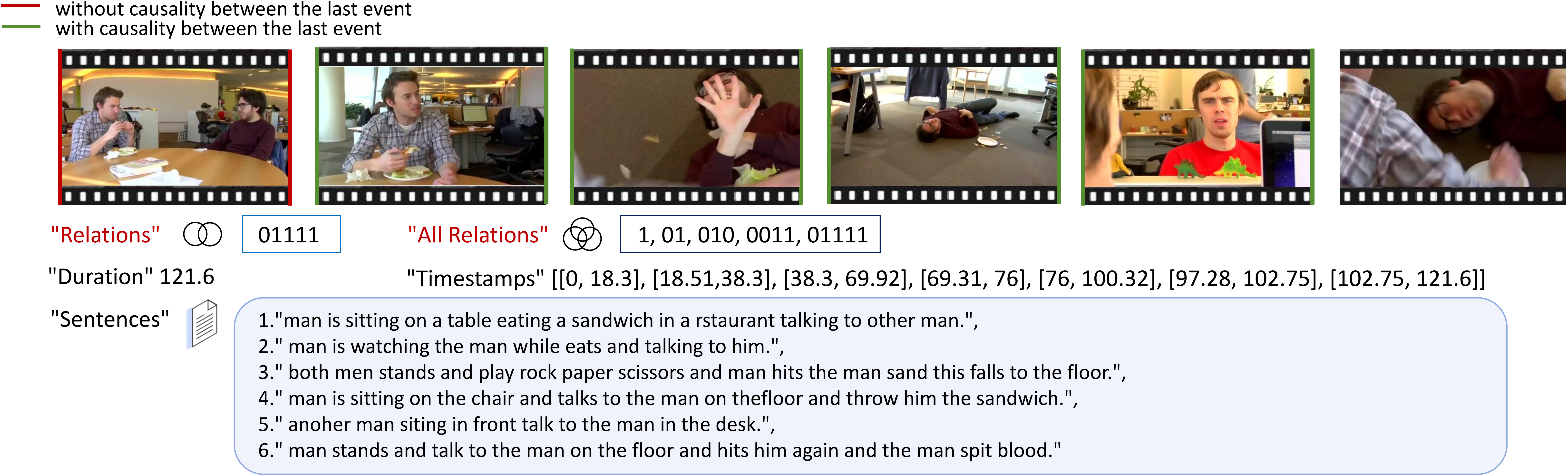

An example of causality diagram:

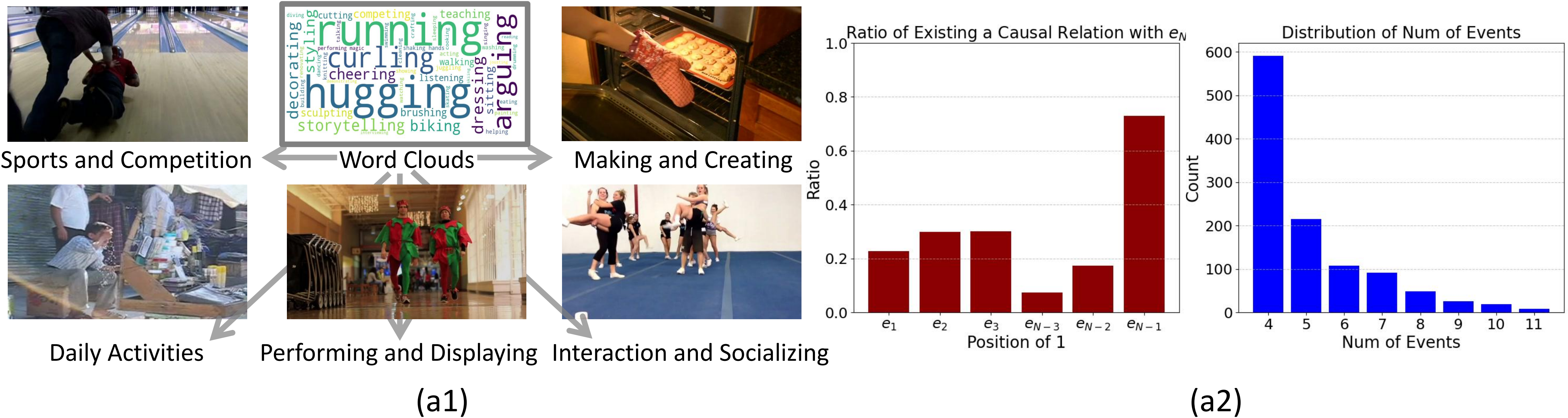

Our MECD dataset includes 806 and 299 videos for training set and testing set, respectively.

The annotations of training set: captions/train.json, the causal relation attribute 'relation' is introduced.

The annotations of testing set: captions/test.json, the causal relation attribute 'relation' is introduced.

Full causal relation diagram annotations of the test set can be found at captions/test_complete.json, an additional attribute 'all_relation'

is introduced to conduct complete causal graph reasoning which is evaluated by the 'Average_Structural_Hamming_Distance (Ave SHD)' metric.

An annotation example (Annotation display version can also be viewed at HunggingFace):

The videos can be found in ActivityNet official website https://activity-net.org/ according to our provided video ID.

The pretraining feature extracted by ResNet200 can be got by following the command below (details can be found in VAR) :

python feature_kit/extract_feature.pyFor training and our validating VGCM(Video Granger Causality Model), please follow the command below:

sh scripts/train.sh To reproduce our results in the above table, please follow the default hyperparameters settings in: src/runner.py and scripts/train.sh

We fine-tune the vision-language projector of 🦙Video-LLaVA and 🦜VideoChat2 using LoRA under its official implementation on our entire MECD training set.

During the fine-tuning phase, the relation is transformed into a list of length (n-1), and the regular pattern of causality representation offered by the conversation is supplied to the VLLM.

Task prompt can be found in (mecd_vllm_finetune/Video-LLaVA-ft/videollava/conversation.py and mecd_vllm_finetune/VideoChat2-ft/multi_event.py) :

system = "Task: The video consists of n events,

and the text description of each event has been given correspondingly(separated by " ",).

You need to judge whether the former events in the video are the cause of the last event or not,

the probability of the cause 0(non-causal) or 1(causal) is expressed as the output, "Please follow the command to reproduce thr fine-tuning result on our MECD benchmark:

Evaluate the causal discovery ability after fine-tuning of 🦙Video-LLaVA:

cd mecd_vllm_finetune/Video-LLaVA-ft

sh scripts/v1_5/finetune_lora.sh

python videollava/eval/video/run_inference_causal_inference.pyEvaluate the causal discovery ability after fine-tuning of 🦜VideoChat2:

cd mecd_vllm_fewshot/VideoChat2-ft

OMP_NUM_THREADS=2 torchrun --nnodes=1 --nproc_per_node=8 tasks/train_it.py ./scripts/videochat_mistral/config_7b_stage3.py

python multi_event.pyAll LLM-based and VLLM-based models are evaluated under a few-shot setting (In-Context Learning).

Specifically, following the approach in causal discovery for NLP tasks and after proving the sufficiency,

three representative examples are provided during inference, which can be found in mecd_llm_fewshot/prompt.txt, mecd_vllm_fewshot/video_chat2/multi_event.py,

and mecd_vllm_fewshot/Video-LLaVA/videollava/conversation.py.

Please follow the command to evaluate the In-Context causal discovery ability of Video-LLaVA:

cd mecd_vllm_fewshot/Video-LLaVA

python videollava/eval/video/run_inference_causal_inference.pySimilarly, please follow the command to evaluate the In-Context causal discovery ability of Videochat2:

cd mecd_vllm_fewshot/VideoChat2

python multi_event.pySimilarly, please follow the command to evaluate the In-Context causal discovery ability of GPT-4:

cd mecd_llm_fewshot

python gpt4.pySimilarly, please follow the command to evaluate the In-Context causal discovery ability of Gemini-pro:

cd mecd_llm_fewshot

python gemini.pyFirst, you will need to set up the environment and extract pretraining weight of each video. We offer an environment suitable for both VGCM and all VLLMs:

conda create -n mecd python=3.10

conda activate mecd

pip install -r requirements.txtThe pre-training weight of VGCM is available in Google Drive.

@inproceedings{chen2024mecd,

title={{MECD}: Unlocking Multi-Event Causal Discovery in Video Reasoning},

author={Chen, Tieyuan and Liu, Huabin and He, Tianyao and Chen, Yihang and Gan, Chaofan and Ma, Xiao and Zhong, Cheng and Zhang, Yang and Wang, Yingxue and Lin, Hui and others},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems},

year={2024}

}We would also like to recognize and commend the following open source projects, thank you for your great contribution to the open source community:

We would like to express our sincere gratitude to the PCs, SACs, ACs, as well as Reviewers 17Ce, 2Vef, eXFX, and 9my4, for their constructive feedback and support provided during the review process of NeurIPS 2024. Their insightful comments have been instrumental in enhancing the quality of our work.

We will continue to update the performance of new state-of-the-art (SOTA) models on the MECD dataset, such as VideoLLaMA 2, PLLaVA, OpenAI-o1, etc., and we will also continuously expand the volume of data and video sources in MECD.