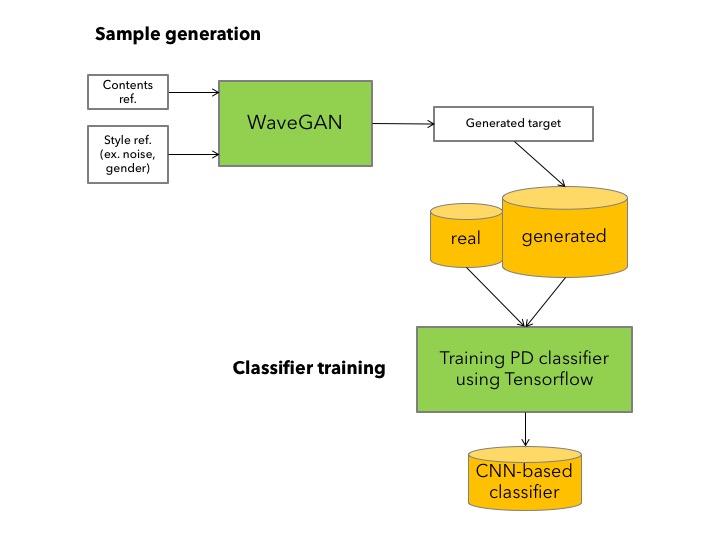

In this project we use Deep learning to classify Parkinson's disease in four classes. To build an accurate model, we synthesized speech using WaveGAN [paper] and augmented speech using newly developed GAN [github repo].

Parkinson’s disease (PD) is impacting more than 10 million people worldwide. However, the conventional method of diagnosing PD based on physicians’ decision is time consuming and often inaccurate. Hence, we aim to develop an accurate deep learning diagnosis model of PD based on vocal symptoms of hypokinetic dysarthria unique to PD. With the help of deep learning, which does not require complex feature extraction and selection steps necessary in machine learning, we have successfully increased the efficiency as well as the accuracy of the classification model. Furthermore, parameters such as the volume of voice, quality of the recording, and patient’s ethnicity may confound the diagnosis. We propose to implement Generative Adversarial Network (GAN) to revise these parameter values into a standard threshold. The revised inputs, when put into a network that is particularly trained with the threshold, resulted in higher accuracy.

- Software

- Hardware

- Tensorflow >= 1.4

- Python 3.6

- Noisy DEMAND 2

- Speech Commands Zero through Nine (SC09)

- Techsorflow Challenge Speech Commands data full

- Parkinson's Disease Extended Vowel Sound dataset (not public)

We are using the four class classifier based on CNN. [baseline] [paper]

python3 classifier/speech_classifier/speech.py

The data structure must assume the form of

data/train >

healthy >

audio_0.wav

audio_1.wav

pd_1 >

audio_2.wav

audio_3.wav

pd_2>

audio_4.wav

audio_5.wav

We are using WaveGAN and newly proposed GAN model for speech Synthesis from the training data.

- Little MA, et al. "Suitability of dysphonia measurements for telemonitoring of Parkinson’s disease." IEEE Transactions on Bio-medical Engineering. 2009. [paper]

- Tsanas, A., et al. “Novel Speech Signal Processing Algorithms for High-Accuracy Classification of Parkinson's Disease.” IEEE Transactions on Biomedical Engineering. 2012. [paper]

- Donahue, Chris, Julian McAuley, and Miller Puckette. "Synthesizing Audio with Generative Adversarial Networks." arXiv preprint arXiv:1802.04208 (2018). [paper]

- Shen, Jonathan, et al. "Natural TTS synthesis by conditioning wavenet on mel spectrogram predictions." arXiv preprint arXiv:1712.05884 (2017). [paper]

- Perez, Anthony, Chris Proctor, and Archa Jain. Style transfer for prosodic speech. Tech. Rep., Stanford University, 2017. [paper]

- Goodfellow, Ian, et al. "Generative adversarial nets." Advances in neural information processing systems. 2014. [paper]

- Salimans, Tim, et al. "Improved techniques for training gans." Advances in Neural Information Processing Systems. 2016. [paper]

- Grinstein, Eric, et al. "Audio style transfer." arXiv preprint arXiv:1710.11385 (2017). [paper]

- Pascual, Santiago, Antonio Bonafonte, and Joan Serra. "SEGAN: Speech enhancement generative adversarial network." arXiv preprint arXiv:1703.09452 (2017). [paper]

- Yongcheng Jing, Yezhou Yang, Zunlei Feng, Jingwen Ye, Yizhou Yu, Mingli Song. "Neural Style Transfer: A Review" arXiv:1705.04058 (2017) [paper]

- Van Den Oord, Aäron, et al. "Wavenet: A generative model for raw audio." CoRR abs/1609.03499 (2016). [paper]

- Glow: Generative Flow with Invertible 1×1 Convolutions [paper]

- Kingma, Diederik P., et al. "Semi-supervised learning with deep generative models." Advances in Neural Information Processing Systems. 2014. [paper]

- Van Den Oord, Aäron, et al. "Wavenet: A generative model for raw audio." CoRR abs/1609.03499 (2016). [paper]

- Chae Young Lee - Hankuk Academy of Foreign Studies - [github]

- Anoop Toffy - IIIT Bangalore - [Website]

- Seo Yeon Yang - Seoul National University - [Website]

This project is licensed under the MIT License - see the LICENSE.md file for details

- Prof. Giovanni Dimauro, Universita' degli Studi di Bari 'Aldo Moro'

- Dr. Woo-Jin Han, Netmarble IGS

- Dr. Gue Jun Jung, SK Telecom

- Tensorflow Korea

- Google Korea