Md Rizwan Parvez, Tolga Bolukbasi Kai-Wei Chang,Venkatesh Saligrama: EMNLP-IJCAI 2019

For details, please refer to this paper

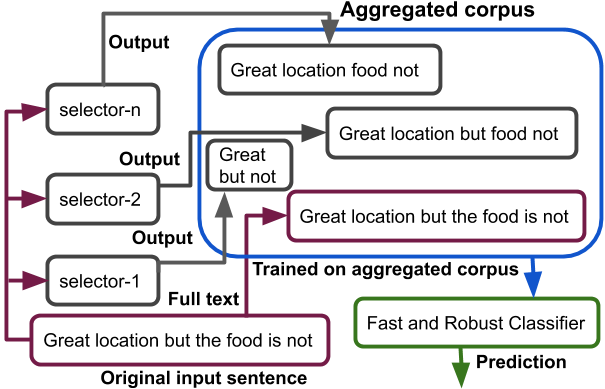

We design a generic framework for learning a robust text classification model that achieves high accuracy under different selection budgets (a.k.a selection rates) at test-time. We take a different approach from existing methods and learn to dynamically filter a large fraction of unimportant words by a low-complexity selector such that any high-complexity classifier only needs to process a small fraction of text, relevant for the target task. To this end, we propose a data aggregation method for training the classifier, allowing it to achieve competitive performance on fractured sentences. On four benchmark text classification tasks, we demonstrate that the framework gains consistent speedup with little degradation in accuracy on various selection budgets.

please see the run_scripts run_experiments.py, lstm_experiments.py, source code skim_LSTM.py

See an example source code of L1 regularized bag-of-words selector: (i) train model (ii) generate selector output text using the trained model

-

Please cite

@article{parvez2018building,

title={Building a Robust Text Classifier on a Test-Time Budget},

author={Parvez, Md Rizwan and Balukbasi, Tolga and Sarigrama, Venkatesh},

booktile={emnlp}

year={2019}

}

|

|---|