A Reinforcement Learning project starter, designed for fast extension, experimentation, and analysis.

Report Bug

·

Request Feature

Table of Contents

There are many great Reinforcement Learning frameworks on GitHub. However, it is usually challenging to figure out how they can be extended for various purposes that come up in research. Keeping this in mind, this project has been developing by the following features in mind:

- highly readable code and documentation that makes it easier to understand and extend different the project for various purposes

- enables fast experimentation: it shouldn't take you more than 5 minutes to submit an experiment idea, no matter how big it is!

- enables fast analysis: all the info necessary for anlaysing the experiments or debugging them should be readily avaiable to you!

- help me improve my software engineering skills and understand reinforcement learning algorithms to a greater extent As Richard Feynman said: "What I cannot create, I do not understand."

All of this contributes to a single idea: you shouldn't spend too much time writing ''duplicated code'', instead you should be focused on generating ideas and evaluating them as fast as possible.

This section gets you through the requirements and installation of this library.

All the prerequisites of this library are outlined in setup.cfg file.

To set up your project, you should follow these steps:

- Clone the project from GitHub:

git clone https://github.com/erfanMhi/base_reinforcement_learning

- Navigate to the root directory:

cd base_reinforcement_learning - Use pip to install all the dependencies (recommend setting up a new VirtualEnv for this repo)

pip install . - To make sure that the installation is complete and the library works properly run the tests using

pytest test

You can run a set of dqn experiments on CartPole environment by running:

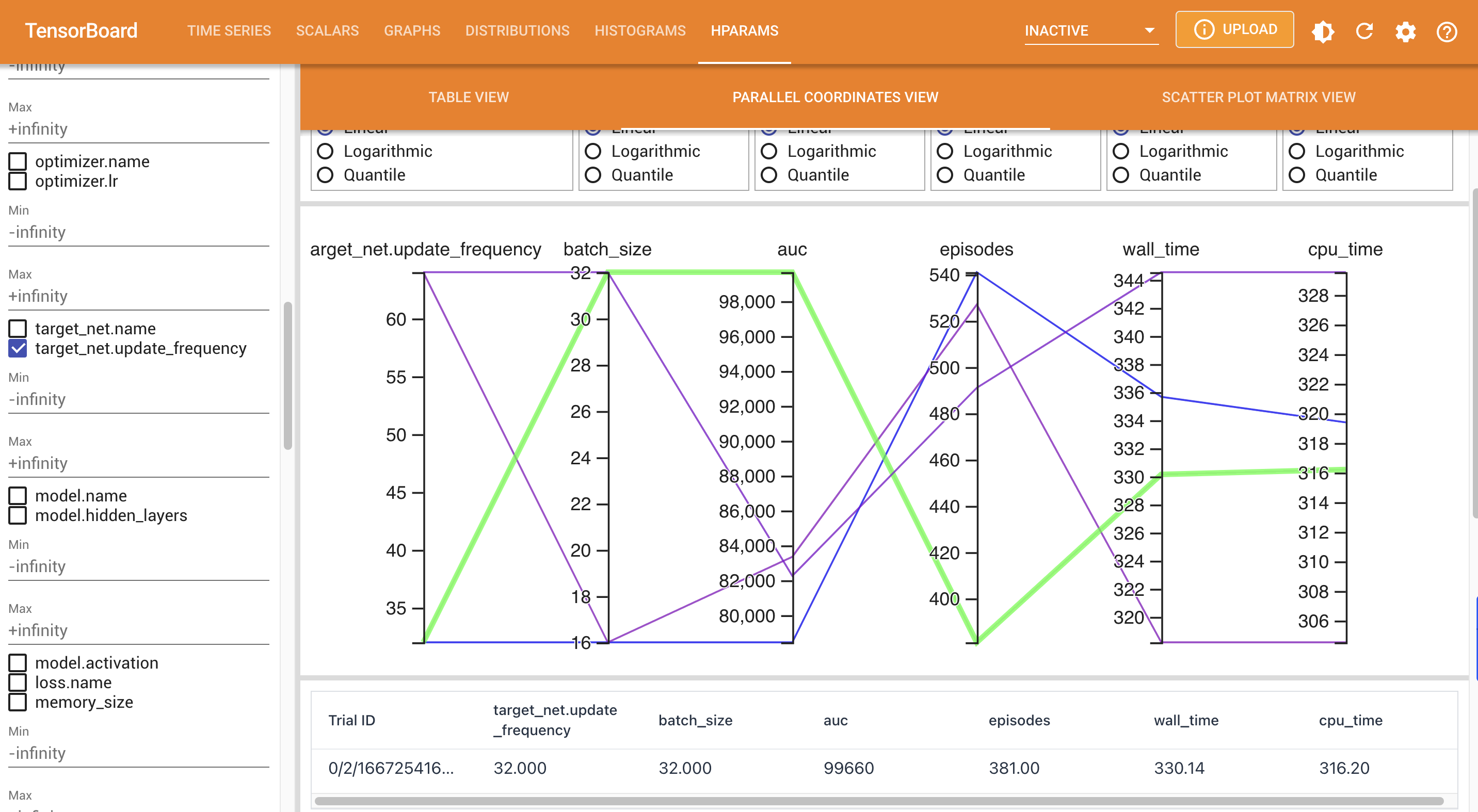

python main.py --config-file experiments/data/configs/experiments_v0/online/cart_pole/dqn/sweep.yaml --verbose debug --workers 4This experiment will tune the batch-size and memory_size of the replay buffer specified in the config file and returns the most performant parameters. It speeds up the experiments by using 4 parallel processes. The most performant parameters are stored in experiments/data/results/experiments_v0/online/cart_pole/dqn/sweep directory.

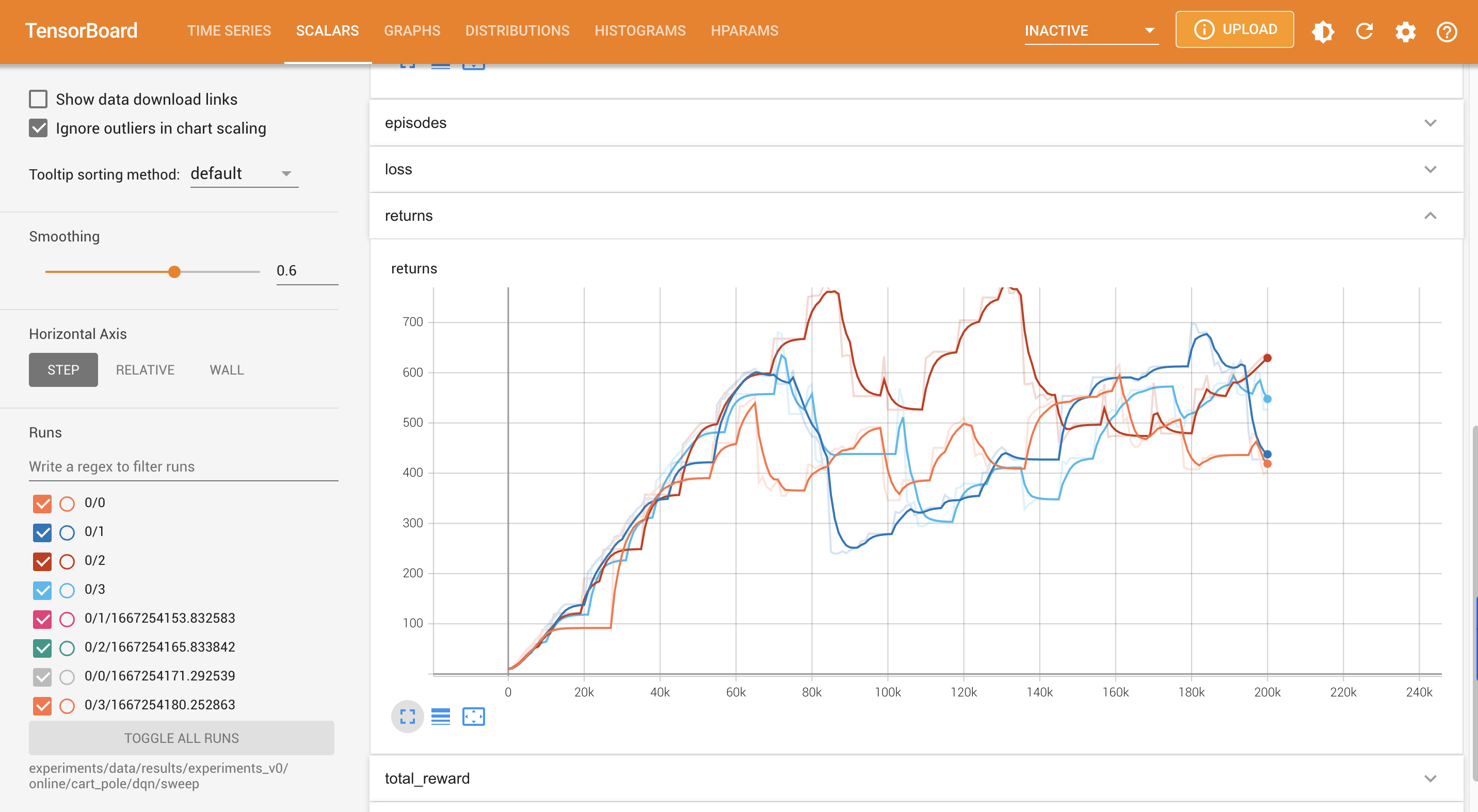

You can now easily analyze the experiments in tensorboard by running the following command:

tensorboard --log-dir experiments/data/results/experiments_v0/online/cart_pole/dqn/sweepDoing so enables you to quickly analyse many parameters including, but not limited to:

- The learning curve of each algorithms.

To run experiments on this project, you only need to call main.py with the proper arguments:

python main.py main.py [-h] --config-file CONFIG_FILE [--gpu] --verbose VERBOSE [--workers WORKERS] [--run RUN]

The main file for running experiments

optional arguments:

-h, --help show this help message and exit

--config-file CONFIG_FILE

Expect a json file describing the fixed and sweeping parameters

--gpu Use GPU: if not specified, use CPU (Multi-GPU is not supported in this version)

--verbose VERBOSE Logging level: info or debug

--workers WORKERS Number of workers used to run the experiments. -1 means that the number of runs are going to be automatically determined

--run RUN Number of times that each algorithm needs to be evaluated

--gpu, --run, and --workers arguments don't require much explanation. I am going to throughly introduce the function of the remaining arguments.

Let's breakdown --config-file argument first. --config-file requires you to specify the relative/absolute address of a config file. This config file can be in any data-interchange format. Currently, yaml files are only supported, but adding other formats like json is tirivial. An example of one of these config files are provided below:

config_class: DQNConfig

meta-params:

log_dir: 'experiments/data/results/experiments_v0/online/cart_pole/dqn/best'

algo_class: OnlineAlgo

agent_class: DQNAgent

env_class: CartPoleEnv

algo-params:

discount: 0.99

exploration:

name: epsilon-greedy

epsilon: 0.1

model:

name: fully-connected

hidden_layers:

grid-search: # searches through different number of layers and layer sizes for the fully-connected layer

- [16, 16, 16]

- [32, 32]

- [64, 64]

activation: relu

target_net:

name: discrete

update_frequency: 32

optimizer:

name: adam

lr:

uniform-search: [0.0001, 0.001, 8] # searches over 8 random values between 0.0001 and 0.001

loss:

name: mse

# replay buffer parameters

memory_size: 2500

batch_size: 16

# training parameters

update_per_step: 1

max_steps: 100000

# logging parameters

log_interval: 1000

returns_queue_size: 100 # used to generate the learning curve and area under the curve of the reinforcement learning techniqueIn this file you specify the environment, the agent, and the algorithm you want to use to model the interaction between agent and the environment, along with their parameters. To tune the parameters, you can use different key-words such as uniform_search and grid_search which specify the search space of the Tuner class. Currently, Tuner class only supports the grid-search and random-search, however this class can be instantiated and is able to support much more operations. You almost have control over all of different parameters of your algorithm in this config file.

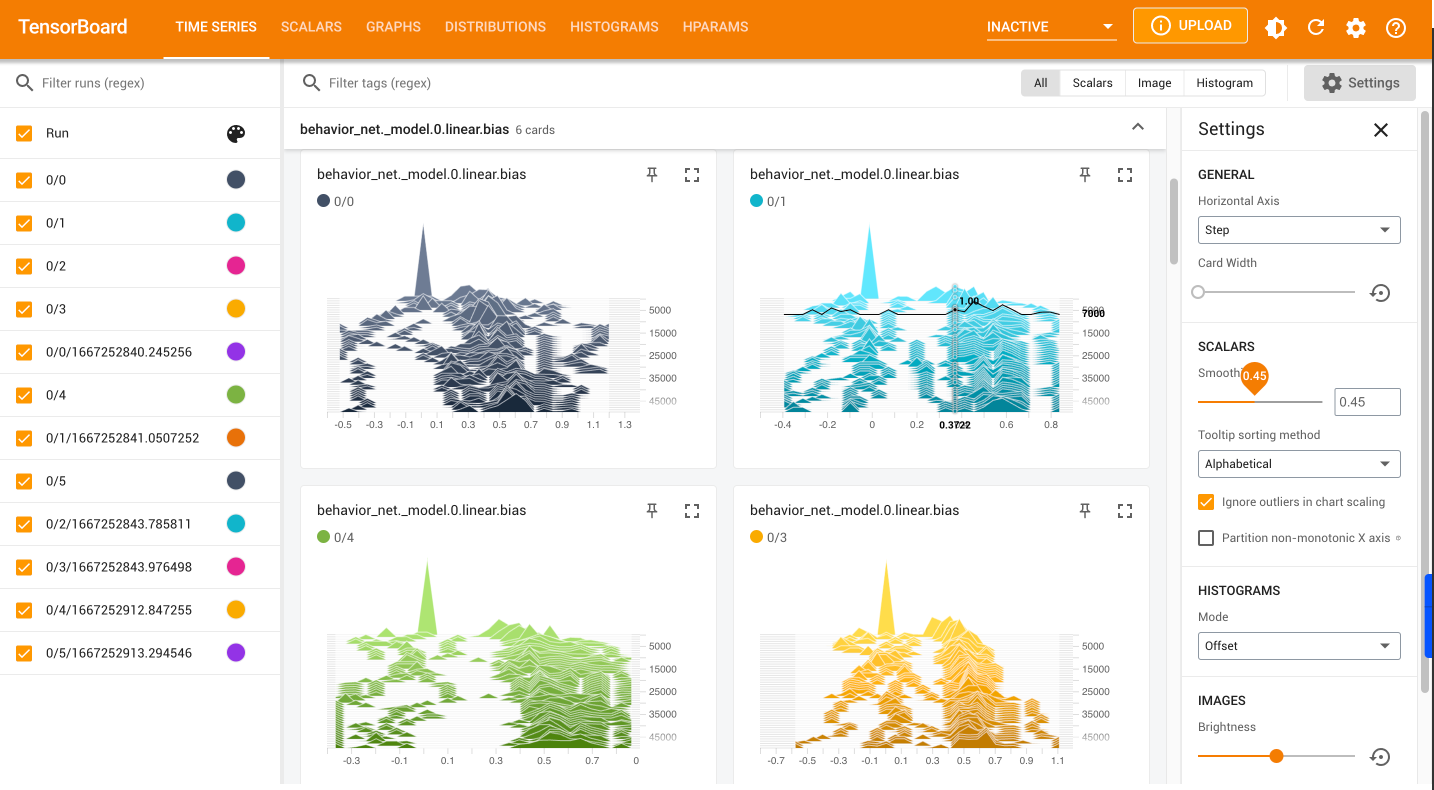

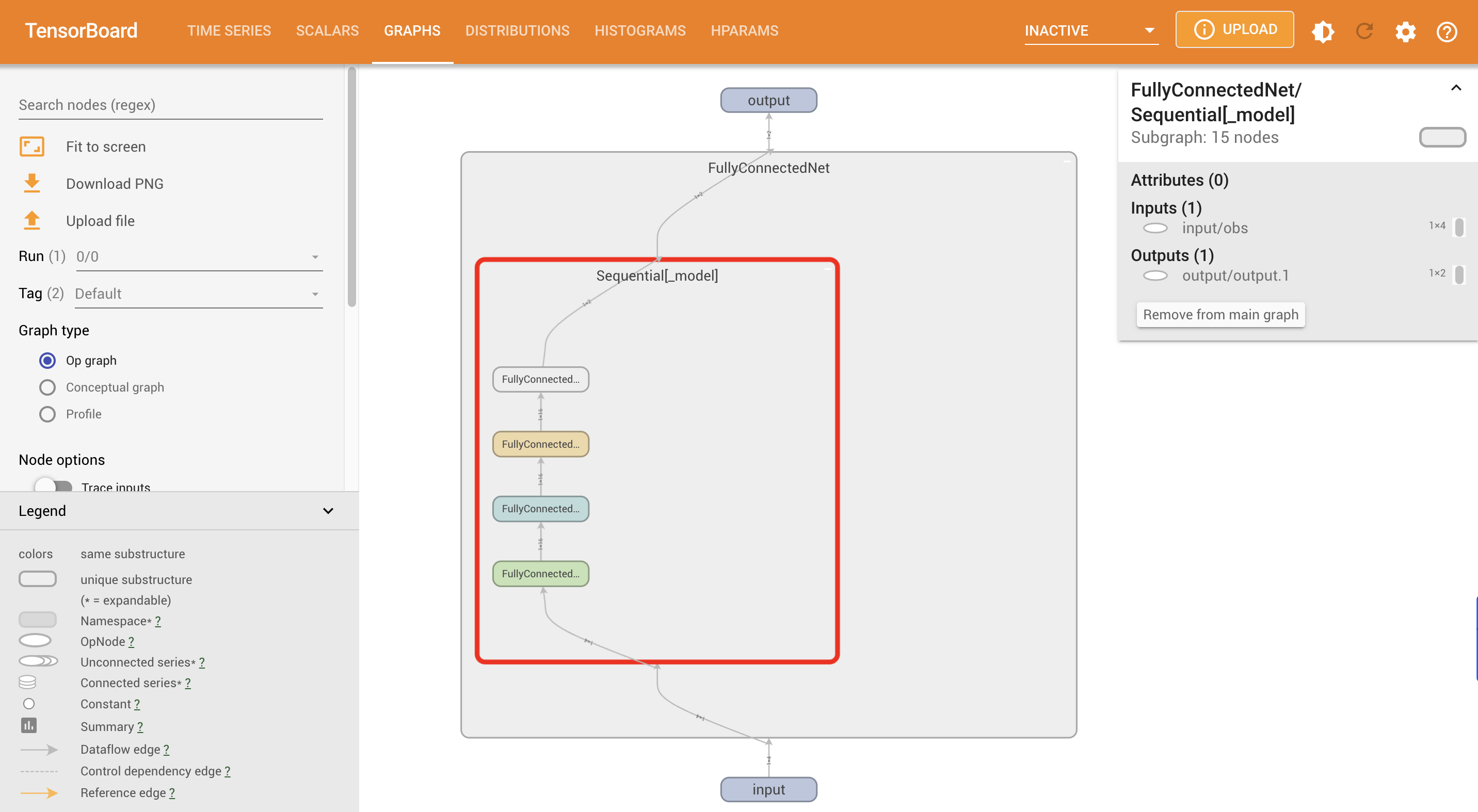

Experiments can be run in two verbosity modes: info and debug. In the former, the process will only record the logs required to analyse the performance of the algorithm, such as the learning curve and the area under the curve. In the latter, all sorts of different values that can help us debug the algorithm will be logged, such as the histogram of weights in different layers of networks, the loss values in each step, the graph of the neural network to help us find the architectural bugs, etc.

- Implementing and testing initial version of the code

- Hardware Capability

- multi-cpu execution

- multi-gpu execution

- Add value-based algorithms:

- DQN implemented and tested

- DDQN

- Refactoring the code

- Factory methods for Optimizers, Loss functions, Networks

- Factory method for environments (requires slight changes of the configuration system)

- Add run aggregator for enabling tensorboard to aggregate the results of multiple runs

- Add RL algorithms for prediction:

- TD(0) with General Value Functions (GVF)

- Implement OfflineAlgorithm class

- Implement Policy Gradient Algorithms

- Vanila Policy Gradient

- Vanila Actor-Critic

- PPO

- Reconfiguring the config files using Dependency Injection approach (most likely using Hydra)

See the open issues for a full list of proposed features (and known issues).

Distributed under the MIT License. See LICENSE.rst for more information.

Erfan Miahi - @your_twitter - mhi.erfan1@gmail.com

Project Link: https://github.com/erfanMhi/base_reinforcement_learning