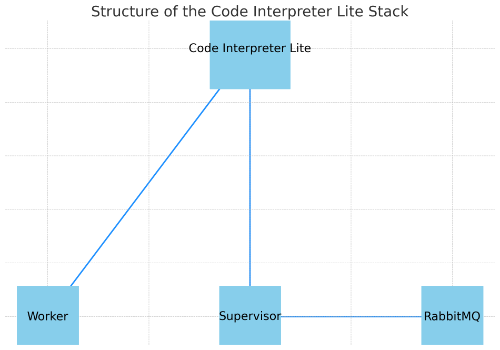

An experimental client that uses LLMs with docker-python-sandbox-supervisor and docker-python-sandbox to run python code in a sandboxed environment.

While the current version only works with GPT API, the goal is to make it work with any LLMs, especially local ones like LLaMA2.

- Clone the repo

- Download/build all the required images. Check the docker-python-sandbox-supervisor and docker-python-sandbox for guides on how to build those images. The Code Interpreter Lite application can be built by running the following command.

build-production-image

- After you have all the images ready, you can run the following command to set up the Code Interpreter Lite stack.

make full-detached

The application will be available at http://localhost:7860.

- Docker

- Python 3.10 or higher (Tested on 3.10.4 and 3.10.12)

- Clone the repo

- Run the following command to set up the development environment.

make build-development-environment

- Run the following command to start the development environment.

make develop

The application will be available at http://localhost:7860.

- More reliable handling of files - Currently, the LLMs get confused about how to access the files

- Add support for local LLMs - LLaMa does not use the correct format, therefore the code cannot be run.

- Add support for Claude from Antrophic - Currently Claude gets stuck running the code again and again until it times out.