Flow Matching Beyond Kinematics: Generating Jets with Particle-ID and Trajectory Displacement Information

Joschka Birk, Erik Buhmann, Cedric Ewen, Gregor Kasieczka, David Shih

This repository contains the code for the results presented in the paper 'Flow Matching Beyond Kinematics: Generating Jets with Particle-ID and Trajectory Displacement Information'.

Abstract:

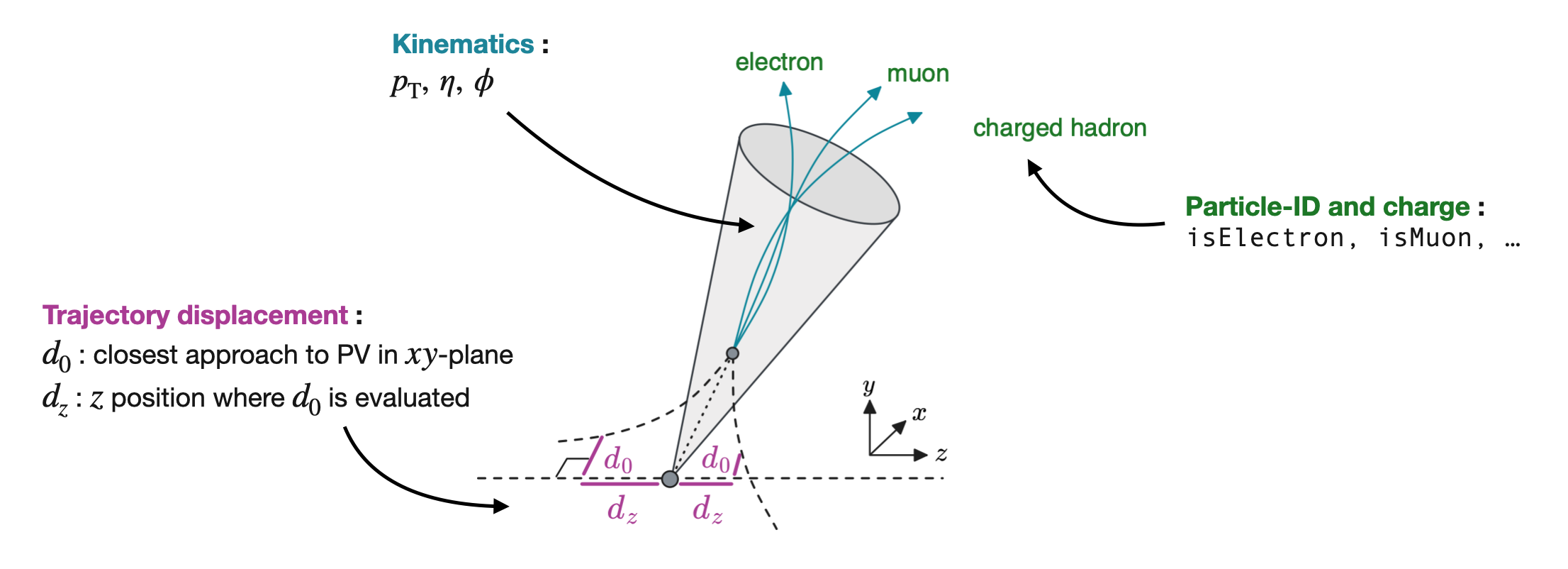

We introduce the first generative model trained on the JetClass dataset. Our model generates jets at the constituent level, and it is a permutation-equivariant continuous normalizing flow (CNF) trained with the flow matching technique. It is conditioned on the jet type, so that a single model can be used to generate the ten different jet types of JetClass. For the first time, we also introduce a generative model that goes beyond the kinematic features of jet constituents. The JetClass dataset includes more features, such as particle-ID and track impact parameter, and we demonstrate that our CNF can accurately model all of these additional features as well. Our generative model for JetClass expands on the versatility of existing jet generation techniques, enhancing their potential utility in high-energy physics research, and offering a more comprehensive understanding of the generated jets.

If you just want to generate new jets with our model (i.e. for comparisons with future work), the required setup is minimal and doesn't need an installation if you use the provided Docker image.

git clone git@github.com:uhh-pd-ml/beyond_kinematics.git

cd beyond_kinematics

singularity shell --nv docker://jobirk/pytorch-image:v0.2.2

source /opt/conda/bin/activate

python scripts/generate_jets.py \

--output_dir <path_to_output_dir> \

--n_jets_per_type <number_of_jets_to_generate_per_type> \

--types <list_of_jet_types>If you want to play around with the code, or don't want to use the Docker image, you can follow the instructions below.

git clone git@github.com:uhh-pd-ml/beyond_kinematics.git

cd beyond_kinematicsCreate a .env file in the root directory to set paths and API keys

LOG_DIR="<your-log-dir>"

COMET_API_TOKEN="<your-comet-api-token>"

HYDRA_FULL_ERROR=1Docker image: You can use the Docker image jobirk/pytorch-image:v0.2.2, which contains all dependencies.

On a machine with singularity installed, you can run the following command to convert the Docker image to a Singularity image and run it:

singularity shell --nv -B <your_directory_with_this_repo> docker://jobirk/pytorch-image:v0.2.2To activate the conda environment, run the following inside the singularity container:

source /opt/conda/bin/activateManual installation: Alternatively, you can install the dependencies manually:

# [OPTIONAL] create conda environment

conda create -n myenv python=3.10

conda activate myenv

# install pytorch according to instructions

# https://pytorch.org/get-started/

# install requirements

pip install -r requirements.txtTo generate new jets with the model used in our paper, you can simply

run the script scripts/generate_jets.py:

python scripts/generate_jets.py \

--output_dir <path_to_output_dir> \

--n_jets_per_type <number_of_jets_to_generate_per_type> \

--types <list_of_jet_types>First, you have to download the JetClass dataset.

For that, please follow the instructions found in the repository

jet-universe/particle_transformer.

After downloading the dataset, you can preprocess it.

Adjust the corresponding input and output paths in configs/preprocessing/data.yaml.

Then, run the following:

python scripts/prepare_dataset.py && python scripts/preprocessing.pyOnce you have the dataset prepared, you can train the model.

Set the path to your preprocessed dataset directory in

configs/experiment/jetclass_cond.yaml and run the following:

python src/train.py experiment=jetclass_condThe results should be logged to comet and stored

locally in the LOG_DIR directory specified in your .env file.

To evaluate the model, you can run the following:

python scripts/eval_ckpt.py \

--ckpt=<model_checkpoint_path> \

--n_samples=<number_of_jets_to_generate> \

--cond_gen_file=<file_with_the_conditioning_features>This will store the generated jets in a subdirectory evaluated_checkpoints of the

checkpoint directory.

After evaluating the model, you can run the classifier test.

For that, you have to set the path to the directory pointing to the generated jets

in configs/experiment/jetclass_classifier.yaml and run the following.

However, if you want to load the pre-trained version of ParT, you will

have to adjust the corresponding paths to the checkpoints as well (which

you'll find in the

jet-universe/particle_transformer

repository).

After you've done all that, you can run the classifier training with

python src/train.py experiment=jetclass_condIf you use this code in your research, please cite our paper:

@misc{birk2023flow,

title={Flow Matching Beyond Kinematics: Generating Jets with Particle-ID and Trajectory Displacement Information},

author={Joschka Birk and Erik Buhmann and Cedric Ewen and Gregor Kasieczka and David Shih},

year={2023},

eprint={2312.00123},

archivePrefix={arXiv},

primaryClass={hep-ph}

}