Golang API to track and account tasks performed

- Postman

- Golang - Echo web Framework

- Testify - Mockery - GORM

- Redis

- Mysql

- Docker and Kubernetes

- Clone the project

git clone https://github.com/uiansol/task-accounter.git- Create the .env file (skip, see below)

Only to make it easy for demonstration, I added the .env that was already filled on the repo. Don't do this at home.

- Build and run with docker compose

docker compose builddocker compose upDepending on your configurations, you may need docker-compose instead.

Wait until the server is completely running. The first time, it can be slow because of the setup on MySQL.

- If you want to deploy on a Kubernetes infrastructure, use this command for files in the deploy folder.

kubectl apply -f <filename>To facilitate testing on this project, I made an initial setup with migrations and input some users by hand. They are also pre-loaded at the Postman collection with usernames and passwords for easy login.

| data | User 1 | User 2 | User 3 |

|---|---|---|---|

| username | tech-1 | tech-2 | manager-1 |

| password | tech-1 | tech-2 | manager-1 |

| role | technician | technician | manager |

-

At Postman, import the collection with the file task-accounter.postman_collection.json

-

Make the Login with all users to make it easy to manipulate the tokens. When making any other call (CREAD, READ, READ ALL, UPDATE, DELETE), you must put the token that I make the operations. Results will differ depending on the user making the call based on the business rules.

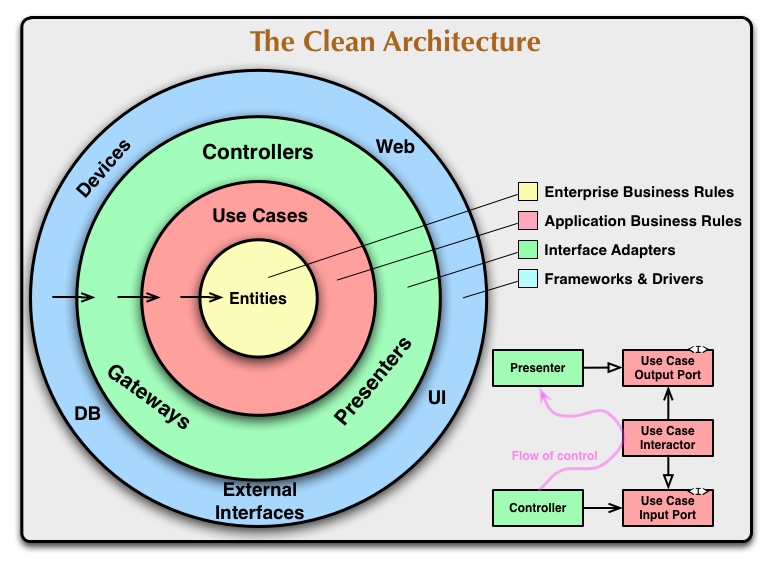

- Using pure, clean architecture and driven-domain design is suitable for Golang micro-services.

- Good SOLID usage as with Single-responsibility and Dependency inversion.

- REST standard, or Restfull. On methods as well on codes returned.

- API versioning. It is not necessary. It was to demonstrate knowledge.

- Unit tests with mocks to isolate execution.

- Standard folder structure on Golang ecosystem as described at Standard Go Project Layout.

- Encryption is ultimately handed at code, not a database. To isolate the business rules from external technologies that somebody can easily change with this architecture.

- I used Redis as a message broker, although only the publisher, not the receiver, is implemented.

- I used git-flow with branches and merges. For simplicity, I avoided having a

developmentbranch in this project. - conventional commits to signal for feature, fix, chore.

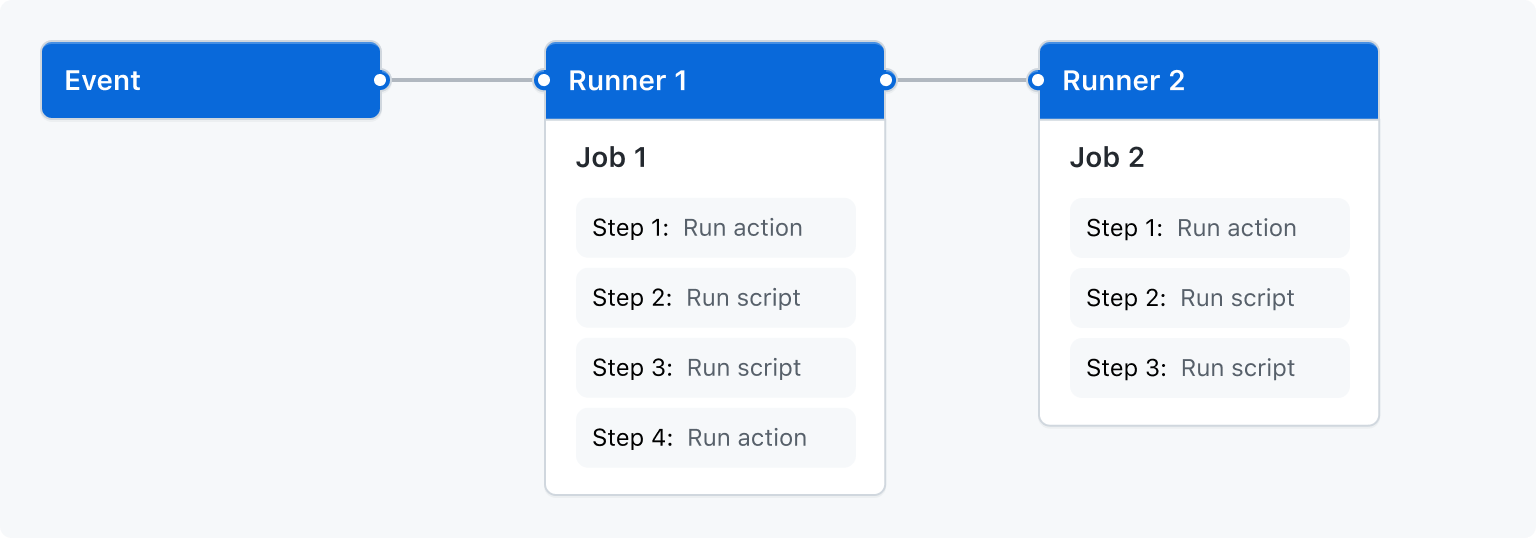

- Using CI with GitHub Actions to build and run automated tests when someone makes a pull request to the main branch.

- I am directly using ambient vars loaded from the

.envfile to containers. - A multi-stage build for the API.

- Load dependence between containers. The database must load first.

- Improve the database model. I didn't normalize the tables so that they could be easily verified by accessing the database through Docker Desktop for ease of demonstration.

- Prioritize the addition of more user management routes. Initially, this was deferred to focus on delivering other features with better quality. However, enhancing the system's functionality and user experience through more user management routes is crucial for the overall project quality.

- Deploy workflow with Kubernetes to complete the CI-CD.