📖 Paper: arXiv

-

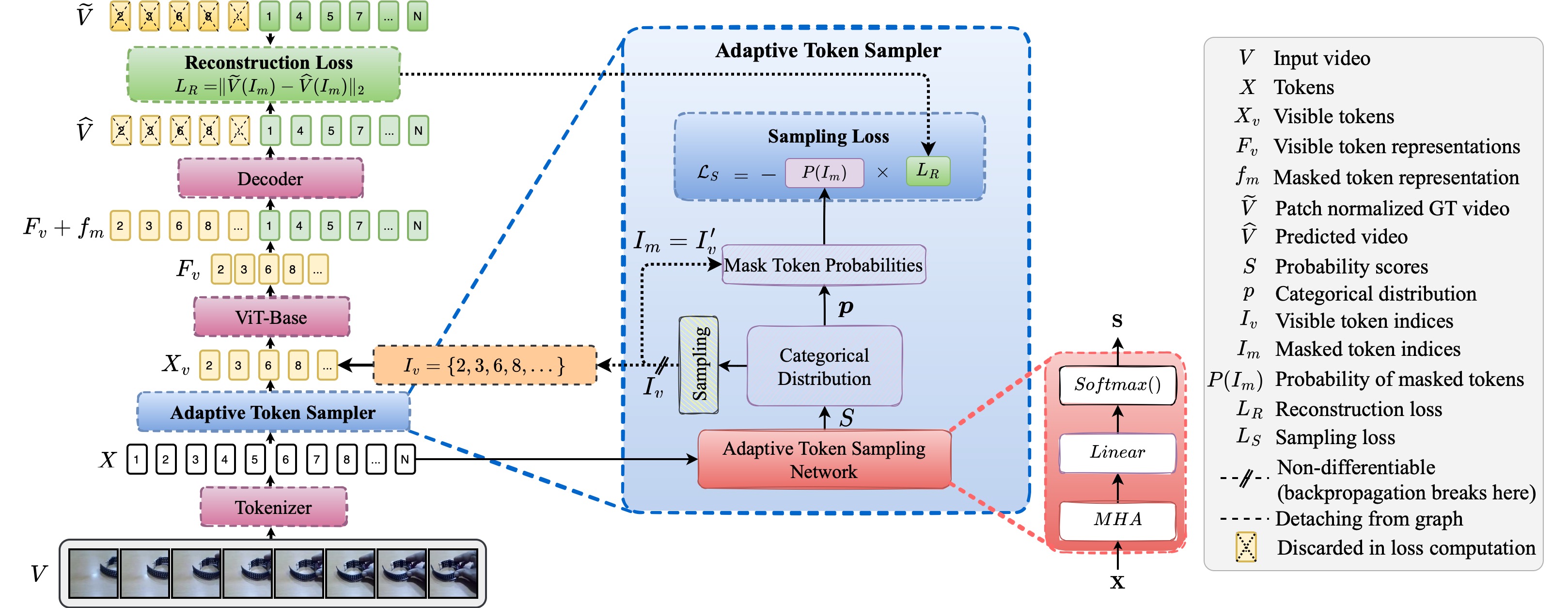

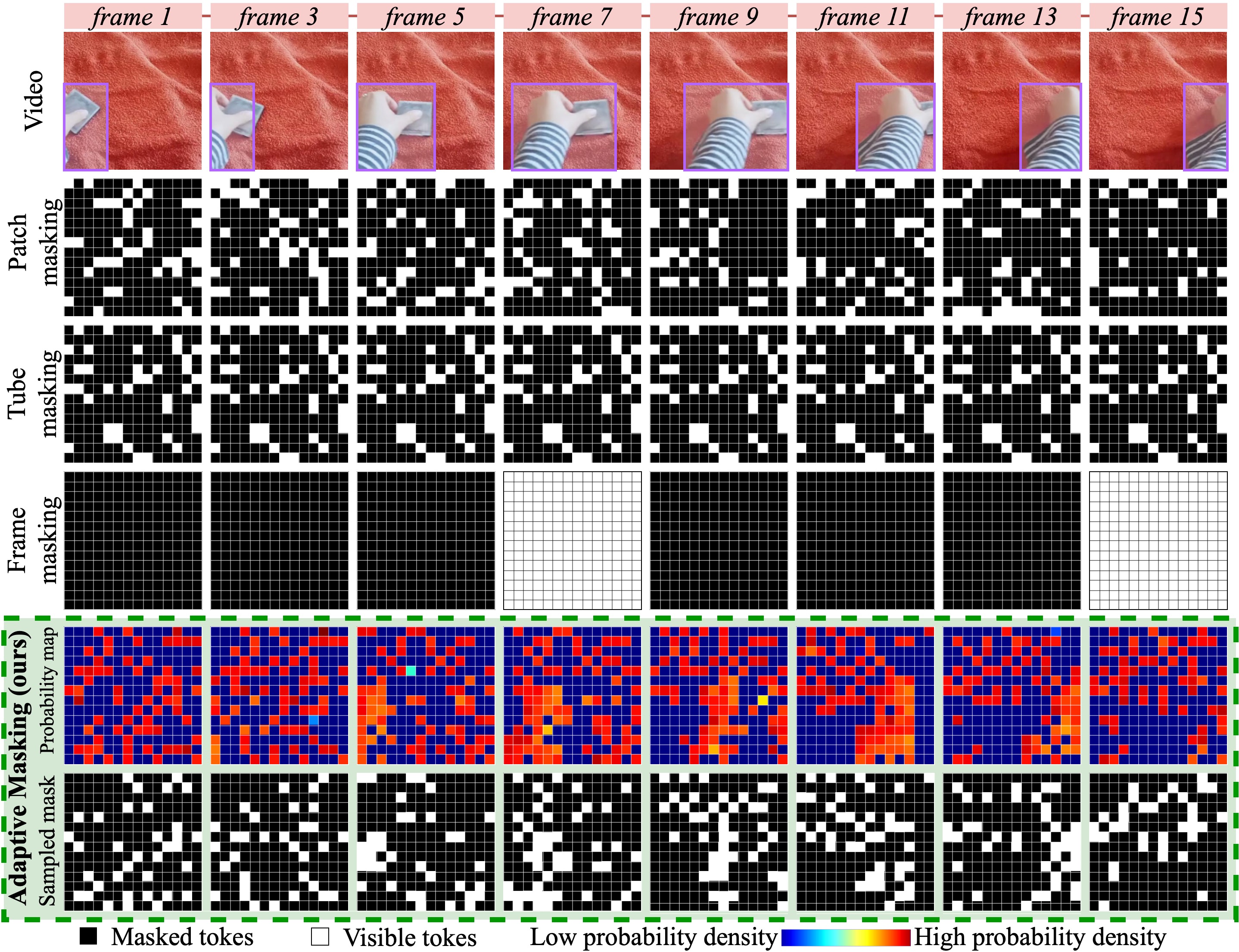

We propose AdaMAE, a novel, adaptive, and end-to-end trainable token sampling strategy for MAEs that takes into account the spatiotemporal properties of all input tokens to sample fewer but informative tokens.

-

We empirically show that AdaMAE samples more tokens from high spatiotemporal information regions of the input, resulting in learning meaningful representations for downstream tasks.

-

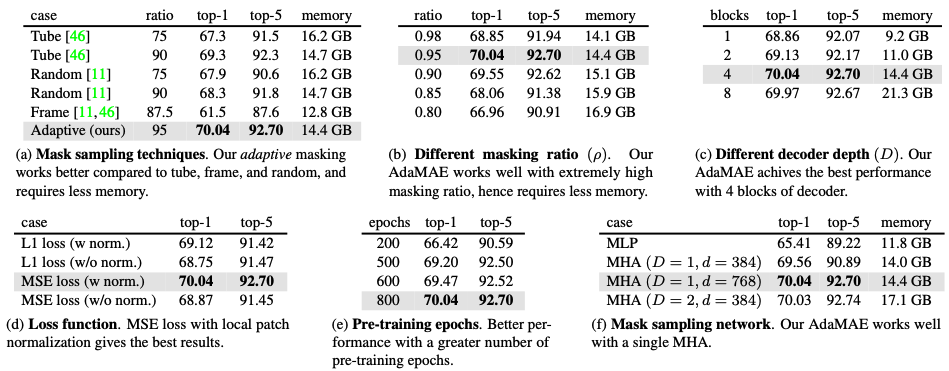

We demonstrate the efficiency of AdaMAE in terms of performance and GPU memory against random patch, tube, and frame sampling by conducting a thorough ablation study on the SSv2 dataset.

-

We show that our AdaMAE outperforms state-of-the-art (SOTA) by

$0.7%$ and$1.1%$ (in top-1) improvements on$SSv2$ and$Kinetics-400$ , respectively.

| Video | Pred. | Error | CAT | Mask | Video | Pred. | Error | CAT | Mask |

|---|

| Video | Pred. | Error | CAT | Mask | Video | Pred. | Error | CAT | Mask |

|---|

Comparison of our adaptive masking with existing random patch, tube, and frame masking for masking ratio of 80%.} Our adaptive masking approach selects more tokens from the regions with high spatiotemporal information while a small number of tokens from the background.

We use ViT-Base as the backbone for all experiments. MHA

-

We closely follow the VideoMAE pre-trainig receipy, but now with our adaptive masking instead of tube masking. To pre-train AdaMAE, please follow the steps in

DATASET.md,PRETRAIN.md. -

To check the performance of pre-trained AdaMAE please follow the steps in

DATASET.mdandFINETUNE.md. -

To setup the conda environment, please refer

FINETUNE.md.

Our AdaMAE codebase is based on the implementation of VideoMAE paper. We thank the authors of the VideoMAE for making their code available to the public.

@misc{https://doi.org/10.48550/arxiv.2211.09120,

doi = {10.48550/ARXIV.2211.09120},

url = {https://arxiv.org/abs/2211.09120},

author = {Bandara, Wele Gedara Chaminda and Patel, Naman and Gholami, Ali and Nikkhah, Mehdi and Agrawal, Motilal and Patel, Vishal M.},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Artificial Intelligence (cs.AI), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {AdaMAE: Adaptive Masking for Efficient Spatiotemporal Learning with Masked Autoencoders},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}