Note: This assumes that you have an AWS account, IAM user with required permissions, and its credentials were set up as a profile for aws-cli, and you have kubectl installed.

Be sure to set correct values to the required fields on;

-

backend.tf for the remote state bucket and prefix (you'll need to create the bucket beforehand)

-

main.tf also has some lines that needs modification according to the region and AZs you'll be using

-

Again main.tf should match the configuration on the backend.tf

-

Before running the terraform commands, export your AWS_PROFILE like:

export AWS_PROFILE=your_profile_name -

Then, go to the root path and run :

terraform init -

If step 5 is successfull, run

terraform plan. It should be adding ~37 resources. Inspect the output, and if it's listing all the intended resources, carry on with the next step. -

Run

terraform applyand wait for it to finish. Inspect the outputs. It should includenatgw_outbound_ips, listing the outbound IP addresses of the EKS cluster. These IP addresses can be used for any kind of IP allowlisting. -

To be able to interact with your EKS cluster, setup your kubeconfig by running;

aws eks --region eu-west-1 update-kubeconfig --name example(be sure to set the region and name aligned with your own setup) -

Once set up, check cluster connectivity by running

kubectl cluster-info. TheKubernetes control planeline on the output must list the endpoint, matching to theterraform applyoutput namedcluster_endpoint. You can also runkubectl get nodes -o wideto list nodes info. -

Go to workload directory, and run

kubectl applyfor each manifest

kubectl apply -f backend-deployment.yaml

kubectl apply -f backend-service.yaml

kubectl apply -f frontend-deployment.yaml

kubectl apply -f frontend-service.yaml- If the above commands succeed, run

kubectl get svc frontendand get theEXTERNAL-IPcolumn for the service URL, exposed publicly. Let's assume the URL isa89e305127e4a4f54b0d3fe1e12ac29e-674045712.eu-west-1.elb.amazonaws.com. Check the availability of the URL by running:curl http://a89e305127e4a4f54b0d3fe1e12ac29e-674045712.eu-west-1.elb.amazonaws.com. It should print "{"message":"Hello"}"

Here's a summary of what we've created with the steps above:

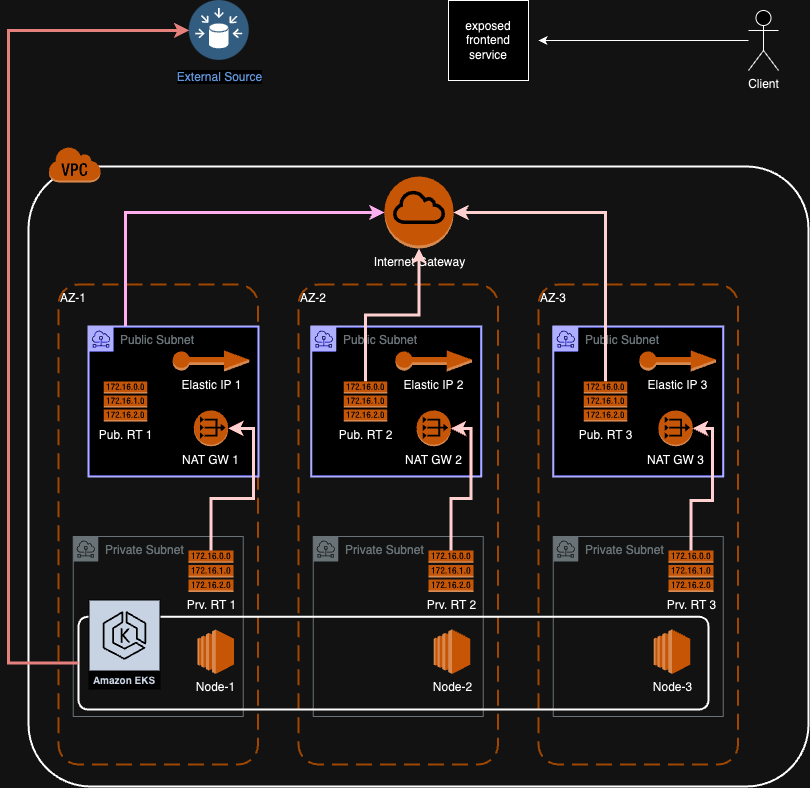

- A VPC

- Private and public subnets on 3 different AZs

- An EKS cluster with 3 nodes spread across these private subnets

- 3 NAT gateways on 3 different public subnets

- An internet gateway, attached to the VPC

- Route tables for private subnets, pointing to the NAT gateways, for the default route

- Route tables for the public subnets, pointing to the internet gateway, for the default route

- Route table associations for the related subnet / route table pairs

- Private EKS nodes can reach the internet, with 3 NAT gateway IP addresses from 3 AZs

- Backend service is not exposed to the public, and not reachable

- Frontend service is exposed to the public, and reachable

- Both deployments are scalable (replica count can be tuned), and highly available (multi-az setup)

What can be improved?

- We can add anti-affinity definitions to the deployment manifests to guarantee each replicas' provisioning to a separate node

- We can improve the way we call subnet.ids from the module on main.tf, by directly calling the output from the module and not calling it from the remote state.