A workshop on how to prototype and deploy a visual search DL model based on Siamese Mask R-CNN

DISCLAIMER: it's a prototype, thus the code base is not optimized for production.

Audience level: Beginner - Intermediate

Task: Prototype a visual search application with human-like flexibility

Limitations: Unaffordable price for large annotated datasets, small data.

Solution: One-shot instance segmentation with Siamese Mask R-CNN

During the workshop we will learn more about one-shot instance segmentation and cover the building blocks of Siamese Mask R-CNN model. Next, we will try a single deployment. Lastly, we will discuss possible limitations and improvements. At the end of the workshop, participants will have a basic understanding on how to prototype and deploy human-like visual search DL models.

One-Shot Instance Segmentation can be summed up as: Given a query image and a reference image showing an object of a novel category, detect and segment all instances of the corresponding category. Note, that no ground truth annotations of reference categories are used during training.

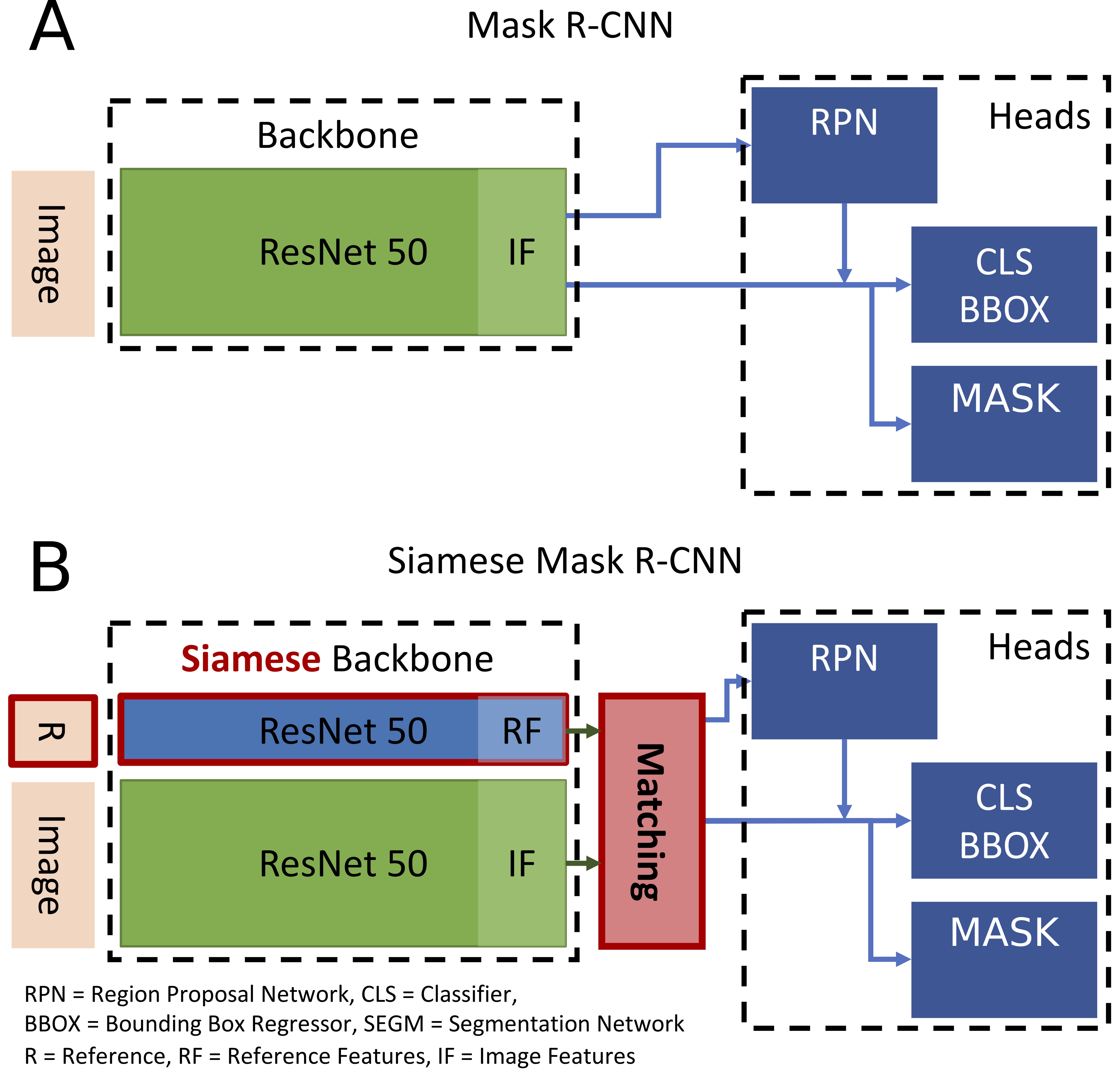

Siamese Mask R-CNN extends Mask R-CNN (a state-of-the-art object detection and segmentation system) with a Siamese backbone and a matching procedure to perform this type of visual search. For more details please read the original paper.

- miniconda and other packages listed in

environment.yml.

Anyone with a unix machine (Mac or any Linux Disto) could download the repo and run it.

-

Clone this repository

-

Install dependencies

cd Small_data_visual_search_app

conda env create -f environment.yml

conda activate updated-app- Create folders

mkdir -p checkpoints data/coco -

Download pretrained weights from the releases menu and place them in

checkpointsfolder -

Prepare MSCOCO Dataset

Inference part requires the CocoAPI and MS COCO Val2017 and Test2017 images, Train/Val2017 annotations to be added to /data/coco folder.

- First, install pycocotools

cd data/coco

git clone https://github.com/waleedka/coco

cd coco/PythonAPI

make install

cd ../../../..

- Second, return to the workshop root folder and run python script to upload 2017 Val and Test dataset and Train/Val annotaions. At least 8GB free space on disc required.

python data_utilities/coco_loader.py --dataset=data/coco/ --year=2017 --download=True

- Follow the instructions in Workshop slides

Any questions, recommendations or need help with troubleshooting ping me on LinkedIn