Table of contents:

| Preparation | Intro | Poking pods |

|---|---|---|

| Storage | Network | Security |

| Observability | Vaccination | References |

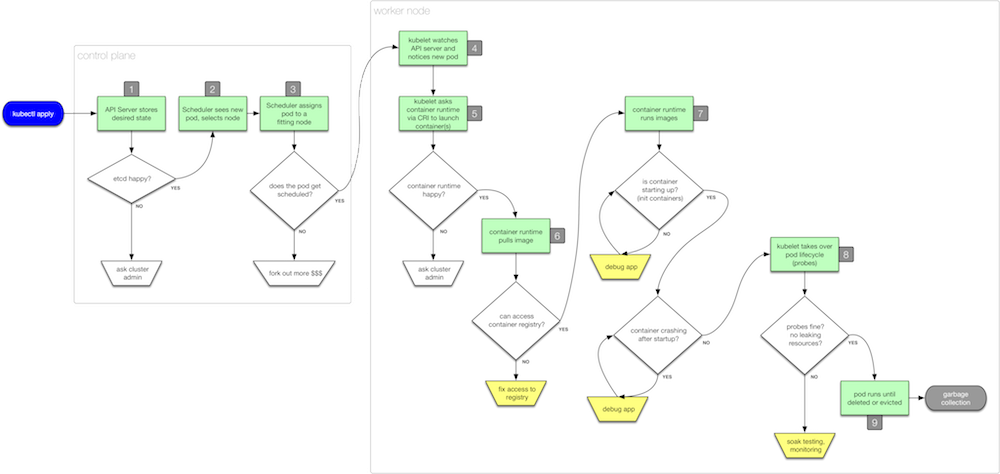

To demonstrate the different issues and failures as well as how to fix them, I've been using the commands and resources as shown below.

NOTE: whenever you see a 📄 icon, it means this is a reference to the official Kubernetes docs.

- Kubernetes 1.16 or higher

Before starting, set up:

# create the namespace we'll be operating in:

kubectl create ns vnyc

# in different tmux pane keep an eye on the resources:

watch kubectl -n vnyc get all

Using 00_intro.yaml:

kubectl -n vnyc apply -f 00_intro.yaml

kubectl -n vnyc describe deploy/unhappy-camper

THEPOD=$(kubectl -n vnyc get po -l=app=whatever --output=jsonpath={.items[*].metadata.name})

kubectl -n vnyc describe po/$THEPOD

kubectl -n vnyc logs $THEPOD

kubectl -n vnyc exec -it $THEPOD -- sh

kubectl -n vnyc delete deploy/unhappy-camper

Download in original resolution.

Download in original resolution.

Using 01_pp_image.yaml:

# let's deploy a confused image and look for the error:

kubectl -n vnyc apply -f 01_pp_image.yaml

kubectl -n vnyc get events | grep confused | grep Error

# fix it by specifying the correct image:

kubectl -n vnyc patch deployment confused-imager \

--patch '{ "spec" : { "template" : { "spec" : { "containers" : [ { "name" : "something" , "image" : "mhausenblas/simpleservice:0.5.0" } ] } } } }'

kubectl -n vnyc delete deploy/confused-imager

Relevant real-world examples on StackOverflow:

- Kubernetes how to debug CrashLoopBackoff

- Kubernetes imagePullSecrets not working; getting “image not found”

- Trying to create a Kubernetes deployment but it shows 0 pods available

Using 02_pp_oomer.yaml and 02_pp_oomer-fixed.yaml:

# prepare a greedy fellow that will OOM:

kubectl -n vnyc apply -f 02_pp_oomer.yaml

# wait > 5s and then check mem in container:

kubectl -n vnyc exec -it $(kubectl -n vnyc get po -l=app=oomer --output=jsonpath={.items[*].metadata.name}) -c greedymuch -- cat /sys/fs/cgroup/memory/memory.limit_in_bytes /sys/fs/cgroup/memory/memory.usage_in_bytes

kubectl -n vnyc describe po $(kubectl -n vnyc get po -l=app=oomer --output=jsonpath={.items[*].metadata.name})

# fix the issue:

kubectl -n vnyc apply -f 02_pp_oomer-fixed.yaml

# wait > 20s

kubectl -n vnyc exec -it $(kubectl -n vnyc get po -l=app=oomer --output=jsonpath={.items[*].metadata.name}) -c greedymuch -- cat /sys/fs/cgroup/memory/memory.limit_in_bytes /sys/fs/cgroup/memory/memory.usage_in_bytes

kubectl -n vnyc delete deploy wegotan-oomer

Relevant real-world examples on StackOverflow:

- InfluxDB container dies over time, and can't restart

- AWS deployment with kubernetes 1.7.2 continuously running in pod getting killed and restarted

Using 03_pp_logs.yaml:

kubectl -n vnyc apply -f 03_pp_logs.yaml

# nothing to see here:

kubectl -n vnyc describe deploy/hiccup

# but I see it in the logs:

kubectl -n vnyc logs --follow $(kubectl -n vnyc get po -l=app=hiccup --output=jsonpath={.items[*].metadata.name})

kubectl -n vnyc delete deploy hiccup

Relevant real-world examples on StackOverflow:

- My kubernetes pods keep crashing with “CrashLoopBackOff” but I can't find any log

- Kubernetes Readiness probe failed error

- Kubernetes error: validating data found invalid field env for v1 PodSpec

References:

- Debugging microservices - Squash vs. Telepresence

- Debugging and Troubleshooting Microservices in Kubernetes with Ray Tsang (Google)

- Troubleshooting Kubernetes Using Logs

Using 04_storage-failedmount.yaml and 04_storage-failedmount-fixed.yaml:

kubectl -n vnyc apply -f 04_storage-failedmount.yaml

# has the data been written?

kubectl -n vnyc exec -it $(kubectl -n vnyc get po -l=app=wheresmyvolume --output=jsonpath={.items[*].metadata.name}) -c writer -- cat /tmp/out/data

# has the data been read in?

kubectl -n vnyc exec -it $(kubectl -n vnyc get po -l=app=wheresmyvolume --output=jsonpath={.items[*].metadata.name}) -c reader -- cat /tmp/in/data

kubectl -n vnyc describe po $(kubectl -n vnyc get po -l=app=wheresmyvolume --output=jsonpath={.items[*].metadata.name})

kubectl -n vnyc apply -f 04_storage-failedmount-fixed.yaml

kubectl -n vnyc delete deploy wheresmyvolume

Relevant real-world examples on StackOverflow:

- How to find out why mounting an emptyDir volume fails in Kubernetes?

- Kubernetes NFS volume mount fail with exit status 32

References:

- Debugging Kubernetes PVCs

- Further references see Stateful Kubernetes

Using 05_network-wrongsel.yaml and 05_network-wrongsel-fixed.yaml:

kubectl -n vnyc run webserver --image nginx --port 80

kubectl -n vnyc apply -f 05_network-wrongsel.yaml

kubectl -n vnyc run -it --rm debugpod --restart=Never --image=centos:7 -- curl webserver.vnyc

kubectl -n vnyc run -it --rm debugpod --restart=Never --image=centos:7 -- ping webserver.vnyc

kubectl -n vnyc run -it --rm debugpod --restart=Never --image=centos:7 -- ping $(kubectl -n vnyc get po -l=run=webserver --output=jsonpath={.items[*].status.podIP})

kubectl -n vnyc apply -f 05_network-wrongsel-fixed.yaml

kubectl -n vnyc delete deploy webserver

Other scenarios often found:

- See an error message that says something like

connection refused? You could be hitting the127.0.0.1issue with the solution to make the app listen on0.0.0.0rather than on localhost. Further, see also some discussion here. - Missing firewall rules, from cluster-internal open ports to communication between clusters can cause all kinds of issues. It very much depends on the environment (AWS, Azure, GCP, on-premises, etc.) how exactly you go about it and most certainly is an infra admin task rather than an appops task.

- Taking a pod offline for debugging: on the pod, simply remove the relevant label(s) the service uses in its

selectorand that removes the pod from the pool of endpoints the service has to serve traffic to while leaving the pod running, ready for you tokubectl exec -itin.

Relevant real-world examples on StackOverflow:

- Connection Refused error when connecting to Kubernetes Redis Service

- “kubectl get pods” showing STATUS - ImagePullbackOff

- Service not exposing in kubernetes

- Kubernetes: Can not curl minikube pod

References:

- Debug Services 📄

- Troubleshooting Kubernetes Networking Issues

- Further references see Container Networking

kubectl -n vnyc create sa prober

kubectl -n vnyc run -it --rm probepod --serviceaccount=prober --restart=Never --image=centos:7 -- sh

# in the container; will result in an 403, b/c we don't have the permissions necessary:

export CURL_CA_BUNDLE=/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

APISERVERTOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)

curl -H "Authorization: Bearer $APISERVERTOKEN" https://kubernetes.default/api/v1/namespaces/vnyc/pods

# different tmux pane, verify if the SA actually is allowed to:

kubectl -n vnyc auth can-i list pods --as=system:serviceaccount:vnyc:prober

# … seems not to be the case, so give sufficient permissions:

kubectl create clusterrole podreader \

--verb=get --verb=list \

--resource=pods

kubectl -n vnyc create rolebinding allowpodprobes \

--clusterrole=podreader \

--serviceaccount=vnyc:prober \

--namespace=vnyc

# clean up

kubectl delete clusterrole podreader && kubectl delete ns vnyc

Relevant real-world examples on StackOverflow:

- Accessing Kubernetes API from pod fails although roles are configured is configured

- How to deploy a deployment in another namespace in Kubernetes?

- Unable to read my newly created Kubernetes secret

References see kubernetes-security.info.

From metrics (Prometheus and Grafana) to logs (EFK/ELK stack) to tracing (OpenCensus and OpenTracing).

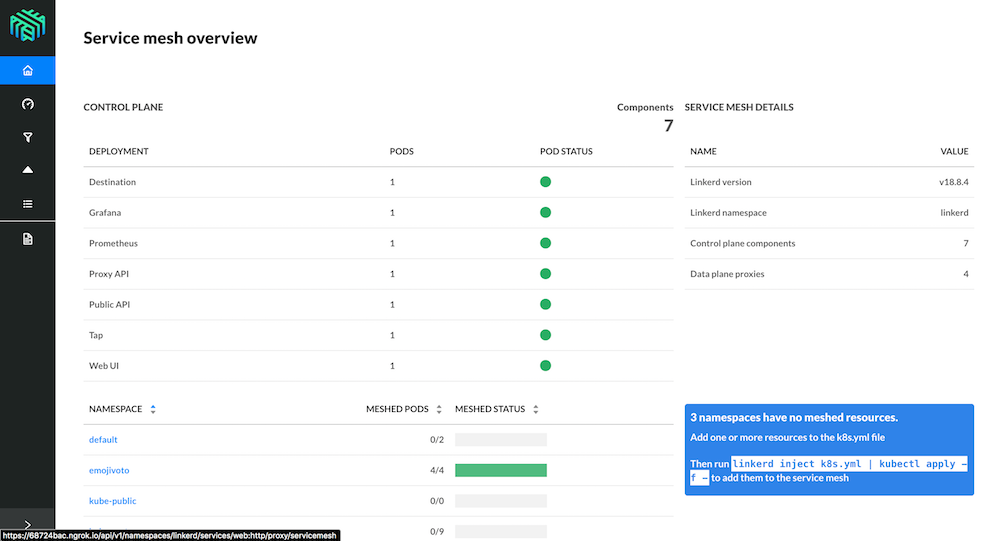

Show Linkerd 2.0 in action using this Katacoda scenario as a starting point.

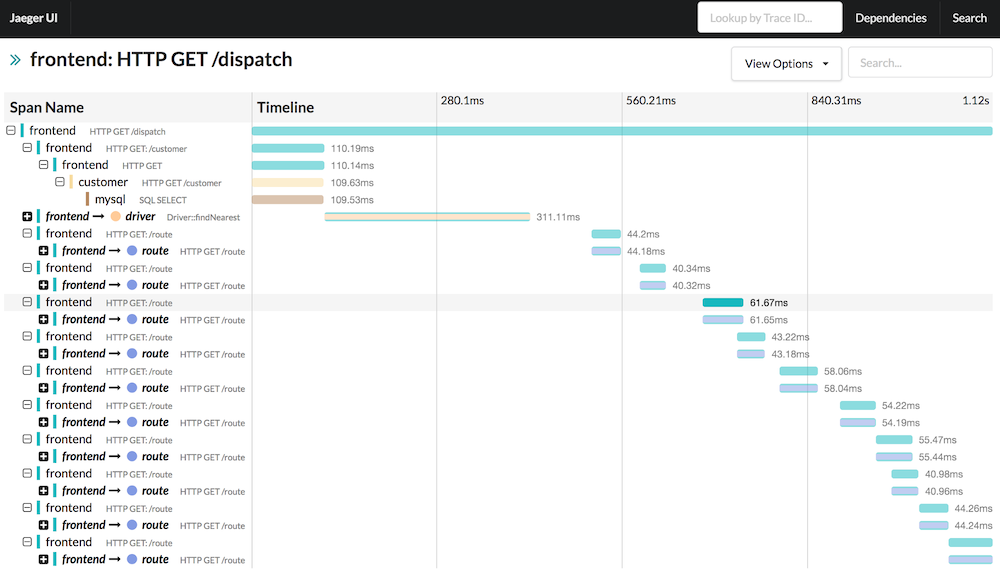

Show Jaeger 1.6 in action using this Katacoda scenario.

References:

- Logs and Metrics

- Evolution of Monitoring and Prometheus

- The life of a span

- Distributed Tracing with Jaeger & Prometheus on Kubernetes

- Debugging: KubeSquash

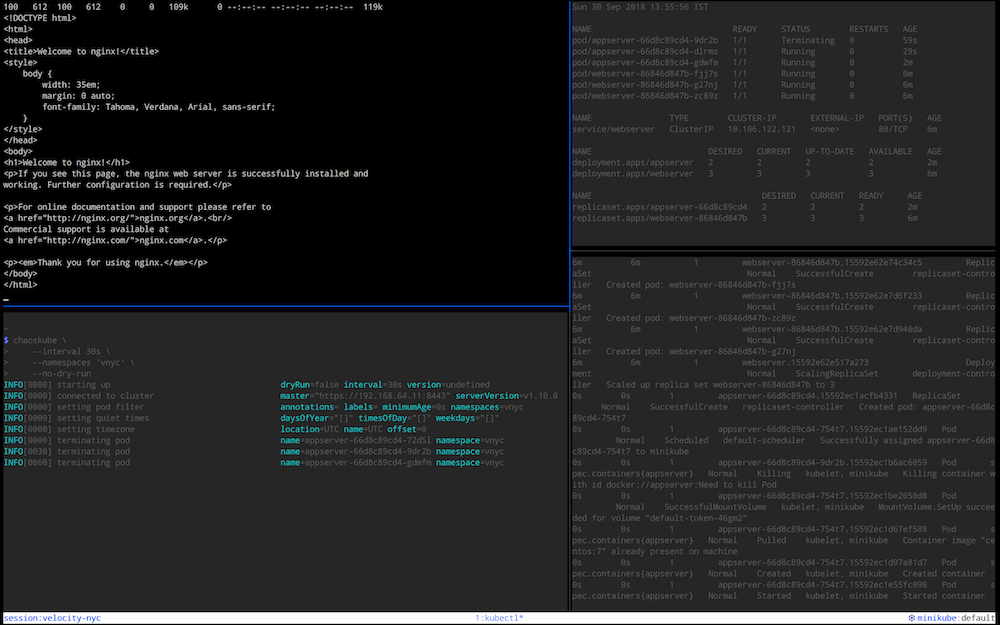

Show chaoskube in action, killing off random pods in the vnyc namespace.

We have the following setup:

+----------------+

| |

+-----> | webserver/pod1 |

| | |

+----------------+ | +----------------+

| | | +----------------+

| appserver/pod1 +--------+ +---------+ | | |

| | | +--+ | +-----> | webserver/pod2 |

+----------------+ | X | | | |

| X | | +----------------+

| X | | +----------------+

v X | | | |

X svc/webserver +--------> | webserver/pod3 |

^ X | | | |

+----------------+ | X | | +----------------+

| | | X | | +----------------+

| appserver/pod2 +--------+ X | | | |

| | +--+ | +-----> | webserver/pod4 |

+----------------+ +----------+ | | |

| +----------------+

| +----------------+

| | |

+-----> | webserver/pod5 |

| |

+----------------+

That is, a webserver running with five replicas along with a service as well as an appserver running with two replicas that queries said service.

# let's create our victims, that is webservers and appservers:

kubectl create ns vnyc

kubectl -n vnyc run webserver --image nginx --port 80 --replicas 5

kubectl -n vnyc expose deploy/webserver

kubectl -n vnyc run appserver --image centos:7 --replicas 2 -- sh -c "while true; do curl webserver ; sleep 10 ; done"

kubectl -n vnyc logs deploy/appserver --follow

# also keep on the events generated:

kubectl -n vnyc get events --watch

# now release the chaos monkey:

chaoskube \

--interval 30s \

--namespaces 'vnyc' \

--no-dry-run

kubectl delete ns vnyc

And here's a screen shot of chaoskube in action, with all the above commands applied:

References:

- Kubernetes: five steps to well-behaved apps

- Kubernetes Best Practices

- Developing on Kubernetes

- Kubernetes Application Operator Basics

- Using chaoskube with OpenEBS

- Tooling:

- Troubleshoot Applications 📄

- Troubleshoot Clusters 📄

- A site dedicated to Kubernetes Troubleshooting

- Debug a Go Application in Kubernetes from IDE

- CrashLoopBackoff, Pending, FailedMount and Friends: Debugging Common Kubernetes Cluster (KubeCon NA 2017): video and slide deck

- 10 Most Common Reasons Kubernetes Deployments Fail: Part 1 and Part 2