Do this first : modify file /usr/include/pcl-1.10/pcl/filters/voxel_grid.h line 340 and line 669 old: for (Eigen::Index ni = 0; ni < relative_coordinates.cols (); ni++) new: for (int ni = 0; ni < relative_coordinates.cols (); ni++) (edited)

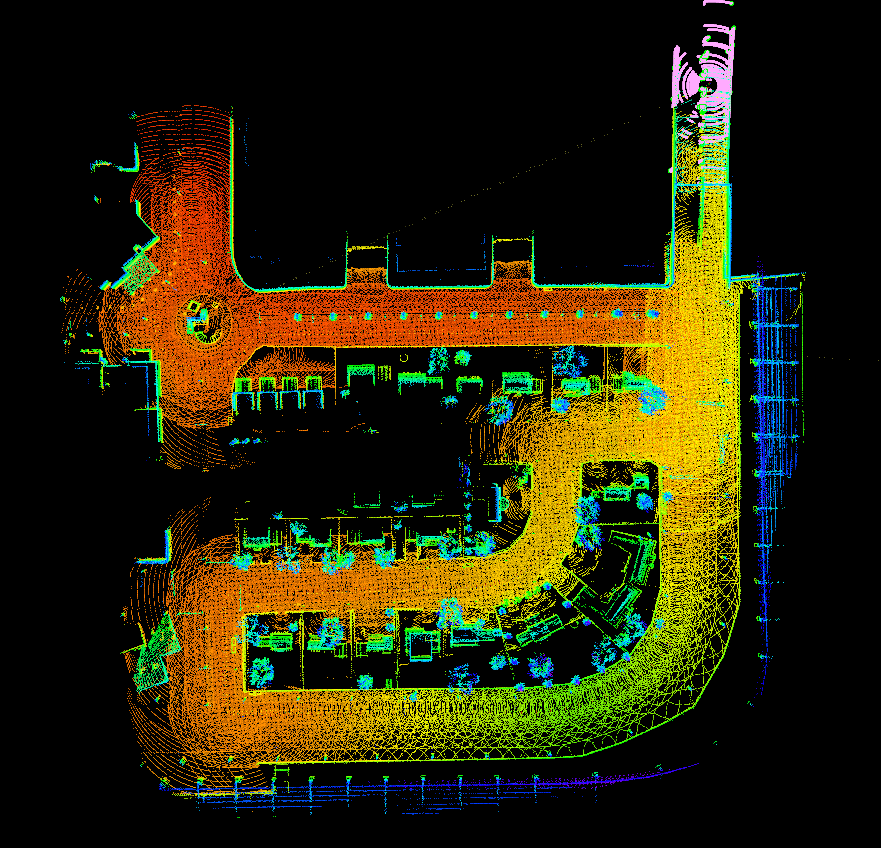

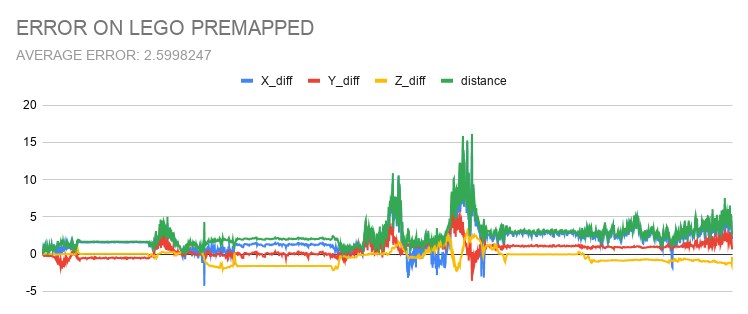

This is a customised LeGO-LOAM to save the mapping result during the first run and then localise in a premapped environment for later runs. The map is stored in the dump format readable from interactive slam in the /tmp/dump folder and is read from the same folder while localising in the premapped environment.

For the other functionalities, see the original LeGO-LOAM and facontidavide's fork.

This code uses this LeGO-LOAM-BOR repository as its base due to its speed improvements over the LeGO LOAM and the map saving code.

- ROS (tested with indigo and kinetic)

- gtsam (Georgia Tech Smoothing and Mapping library, 4.0.0-alpha2)

wget -O ~/Downloads/gtsam.zip https://github.com/borglab/gtsam/archive/4.0.0-alpha2.zip cd ~/Downloads/ && unzip gtsam.zip -d ~/Downloads/ cd ~/Downloads/gtsam-4.0.0-alpha2/ mkdir build && cd build cmake .. sudo make install

You can use the following commands to download and compile the package.

cd ~/catkin_ws/src

git clone https://github.com/Nishantgoyal918/LeGO-LOAM-BOR.git

cd ..

catkin_make

To save map run

roslaunch lego_loam_bor createMap.launch rosbag:=/path/to/your/rosbag lidar_topic:=/velodyne_points

To localise using saved map run

roslaunch lego_loam_bor localization.launch rosbag:=/path/to/your/rosbag lidar_topic:=/velodyne_points

Change the parameters rosbag, lidar_topic as needed.

Initial position of the vehcile can also be defined in /LeGO-LOAM-BOR/LeGO-LOAM/initalRobotPose.txt (6 space separated values in order x, y, z, roll, pitch, yaw)

Some sample bags can be downloaded from here.

This is a fork of the original LeGO-LOAM.

The purpose of this fork is:

- To improve the quality of the code, making it more readable, consistent and easier to understand and modify.

- To remove hard-coded values and use proper configuration files to describe the hardware.

- To improve performance, in terms of amount of CPU used to calculate the same result.

- To convert a multi-process application into a single-process / multi-threading one; this makes the algorithm more deterministic and slightly faster.

- To make it easier and faster to work with rosbags: processing a rosbag should be done at maximum speed allowed by the CPU and in a deterministic way (usual speed improvement in the order of 5X-10X).

- As a consequence of the previous point, creating unit and regression tests will be easier.

This repository contains code for a lightweight and ground optimized lidar odometry and mapping (LeGO-LOAM) system for ROS compatible UGVs. The system takes in point cloud from a Velodyne VLP-16 Lidar (palced horizontal) and optional IMU data as inputs. It outputs 6D pose estimation in real-time. A demonstration of the system can be found here -> https://www.youtube.com/watch?v=O3tz_ftHV48

LeGO-LOAM is speficifally optimized for a horizontally placed lidar on a ground vehicle. It assumes there is always a ground plane in the scan. The UGV we are using is Clearpath Jackal.

The package performs segmentation before feature extraction.

Lidar odometry performs two-step Levenberg Marquardt optimization to get 6D transformation.

To customize the behavior of the algorithm or to use a lidar different from VLP-16, edit the file config/loam_config.yaml.

One important thing to keep in mind is that our current implementation for range image projection is only suitable for sensors that have evenly distributed channels. If you want to use our algorithm with Velodyne VLP-32c or HDL-64e, you need to write your own implementation for such projection.

If the point cloud is not projected properly, you will lose many points and performance.

The IMU has been remove from the original code. Deal with it.

This dataset, Stevens data-set, is captured using a Velodyne VLP-16, which is mounted on an UGV - Clearpath Jackal, on Stevens Institute of Technology campus. The VLP-16 rotation rate is set to 10Hz. This data-set features over 20K scans and many loop-closures.