Developers: Deekshita Saikia, Jaya Khan, Satvik Kishore

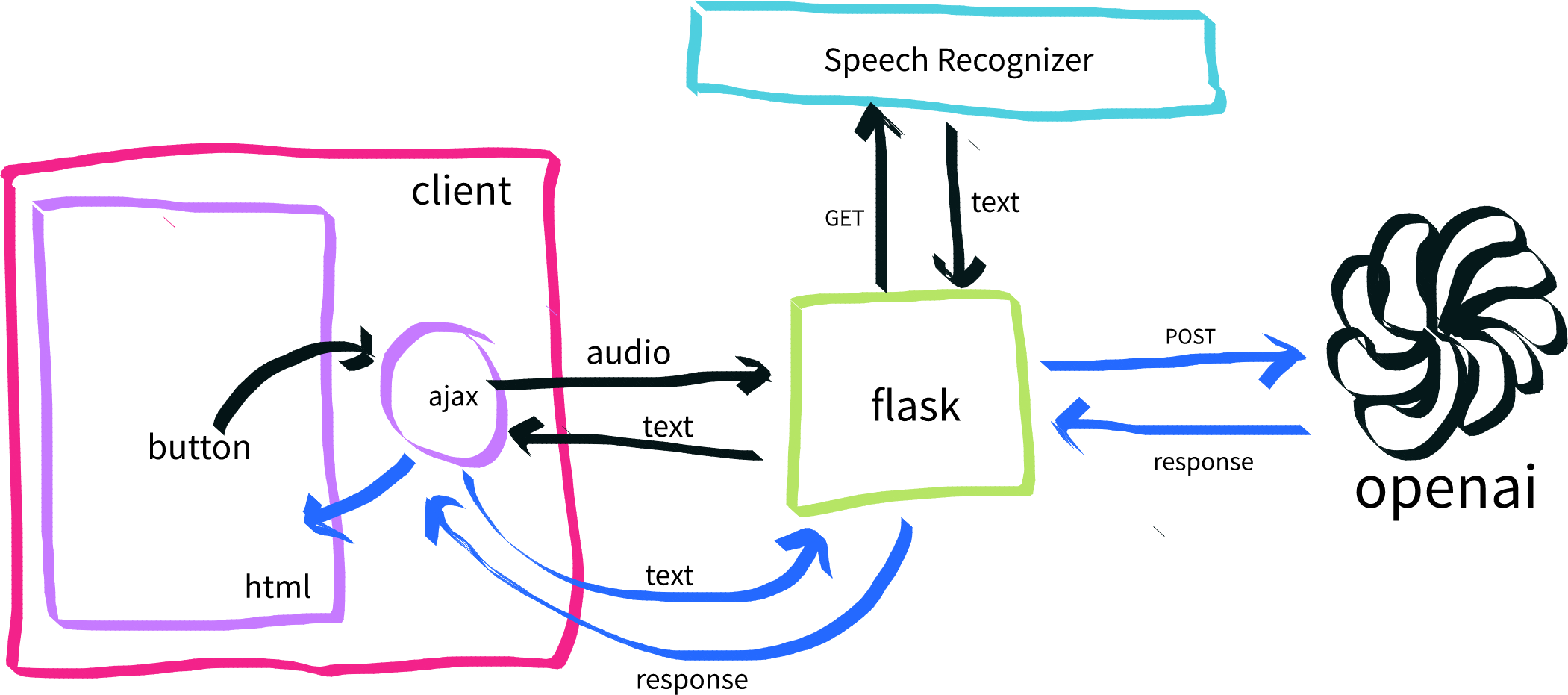

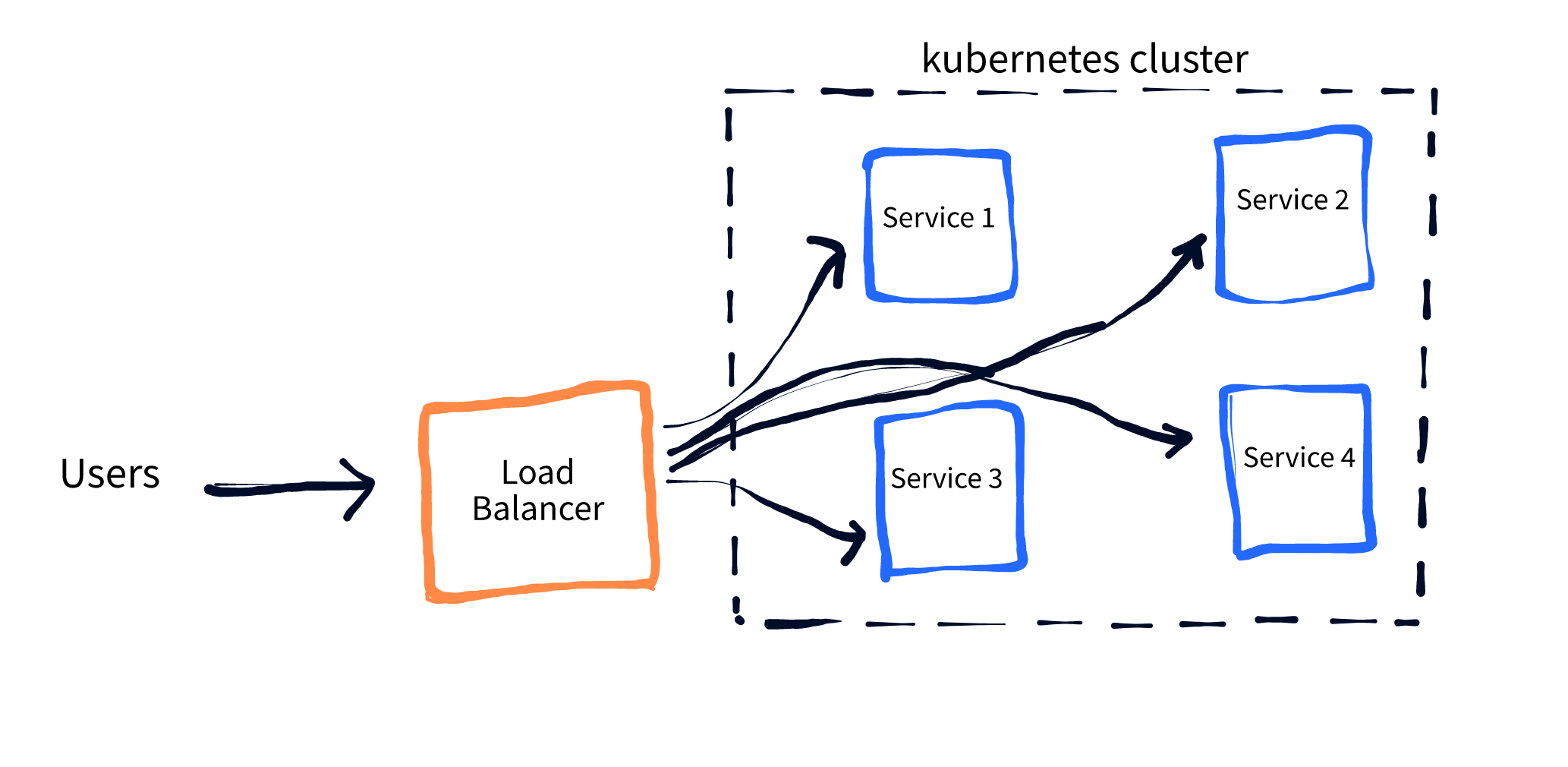

This project is a Flask application that uses SpeechRecognizer and OpenAI APIs to create a voice chatbot. The chatbot can respond to user input in natural language and is designed to be scalable using Azure Kubernetes Service (AKS) and load testing with Locust.

- Python 3.6 or higher

- OpenAI API key

- Docker

- Kubernetes command-line tool (kubectl)

- Azure command-line tool (Azure CLI)

To run the chatbot on your local machine, follow these steps:

- Clone the repository:

git clone https://github.com/YOUR_USERNAME/YOUR_REPO.git

cd YOUR_REPO

- Create a virtual environment and activate it:

python -m venv venv

source venv/bin/activate

- Install the required dependencies:

pip install -r requirements.txt

- Set up your OpenAI API credentials as the environment variable:

export OPENAI_API_KEY=YOUR_API_KEY

Replace YOUR_API_KEY with your OpenAI API key.

- Run the app locally:

export FLASK_APP=app.py

flask run

The app will be available at http://localhost:80/.

To deploy the chatbot to an AKS cluster, follow these steps:

- Build the Docker image:

docker build -t YOUR_IMAGE_NAME .

Replace YOUR_IMAGE_NAME with a name for your Docker image.

- Push the Docker image to a registry:

docker tag YOUR_IMAGE_NAME YOUR_REGISTRY_URL/YOUR_IMAGE_NAME

docker push YOUR_REGISTRY_URL/YOUR_IMAGE_NAME

Replace YOUR_REGISTRY_URL with the URL of your Docker registry.

-

Create an AKS cluster in your Azure account.

-

Deploy the app to AKS:

kubectl apply -f azdeploy.yaml

- Access the app:

kubectl get service chatapp-front2 --watch

This will return the IP address where the service is deployed to. Use this to access the app in your web browser.

To test the scalability of the chatbot, we use Locust, an open-source load testing tool. Follow these steps to run a load test:

- Install Locust:

pip install locust

- Run Locust:

locust --host=http://<external-ip-address>

Replace with the IP address that the service is running at.

- Access the Locust web interface in your browser:

http://localhost:8089/

Here, you can start the load test and see the results.

Contributions are welcome. Please open a pull request and we will respond at the earliest.